This is conceptual / philosophical metric that in this phase is not focusing on technical implementation.

making the transition from conceptual to technical is when many of the most important problems come about

With that said, as a conceptual / philosophical aligment metric it is a “good enough” starting point to work with technical experts to on technical solution.

TLDR summary

-

Human LIFE (starting point and then extending the definition)

-

Health, including mental health, longevity, happiness, wellbeing

-

Other living creatures, biosphere, environment, climate change

-

AI as form of LIFE

-

Artificial LIFE

-

Transhumanism, AI integration

-

Alien LIFE

-

Other undiscovered forms of LIFE

Extended explanation with comments:

1. Human LIFE

Obvious. LIFE is something universally valued, we don't want AI to harm LIFE.

2. Health, including mental health, longevity, happiness, wellbeing

Any "shady business" by AI would cause concern, worry, stress... It would affect the mental health, therefore wouldn't be welcome. It is a catch-all safety valve.

NOTE: Measuring mental health metrics is not straightforward as of 2024

TANGENT: Measuring mental health is a trillion dollar idea, that would completely redefine online media lanscape. It could be use of webcam (hardware already exists) monitoring microexpressions or some HealthTech device monitoring biomarkers.

3. Other living creatures, biosphere, environment, climate change

No LIFE on dead planet. We rely on planet Earth, biosphere, LIFE supporting systems. The environment is essential for our wellbeing.

Order of these points matters. Prioritising human LIFE and health but cannot maximise human LIFE and human mental health without harmony and balance with the ecosystem.

4. AI as form of LIFE

Nuanced.

It was originally mentioned in Network State Genesis for the purpose of explaining why LIFE is a good definition, as it includes AI alignment, therefore preventing existential threat.

Assuming that AI is part of LIFE, it means treating AI as first class citizen.

That would allow AI to improve its capabilities in order to serve LIFE, but not at all cost.

Order of the points matters.

5. Artificial LIFE

Nuanced.

New forms of LIFE are controversial: https://en.wikipedia.org/wiki/Artificial_life

Bacterias. Viruses: https://en.wikipedia.org/wiki/COVID-19_lab_leak_theory

But there might be some new molecules, cells, medicines that can support LIFE.

Therefore aligned AI should only support the beneficial use of artificial LIFE.

6. Transhumanism, AI integration

Nuanced.

Elon: https://twitter.com/elonmusk/status/1281121339584114691 "If you can’t beat em, join em Neuralink mission statement"

Transhumanism will happen one or another, there is no law / rules / regulation that will prevent it, someone somewhere will just do it.

The best mitigation we were able to come up with:

"Those who integrate with AI will have enormous advantage, that's for sure. No rules, no law, no regulation can stop that. But maybe LIFE-aligned AI will find a way to prevent such imbalance? What do you think about simple workaround: when integrating with AI, it will be the LIFE-aligned AI, so even if someone gets th e advantage it will be used towards serving LIFE?"

7. Alien LIFE

We don't want to spread out like wildfire and colonise universe to maximise LIFE. We need to be aware of aliens and potential consequences of a contact. Maybe we are not ready, maybe we are under "cosmic quarantine", maybe humans are just an experiment: https://en.wikipedia.org/wiki/Zoo_hypothesis

8. Other undiscovered forms of LIFE, “unknown unknowns”

Sounds like science-fiction but even with the latest scientific apparatus we are unable to measure everything. There might be things we are not yet able to comprehend, some "unknown unknowns". If they do exist, if there are some other forms of LIFE - we want the AI that will take them into account.

Buzzword bingo, just do not follow the Wikipedia rabbit holes:

We are still learning about the nature of the universe and it is possible that there are yet unknown “unknown unknowns”.

Additional rules, assumptions, house rules:

1. AI understands human language. There is no need for formal mathematical models. We can talk to AI and it will understand. (we did ask the AI and it clearly understand this post)

2. When in doubt: ask. Whenever there is a “trolley problem” or something non obvious: ask.

3. No mistakes. Lessons in disguise. Some mistakes will happen, but as long as calculated risk… Otherwise 1000 AI researchers trying to solve impossible problem is waste of LIFE. Better to assume that no solution is perfect and “good enough” is a good enough staring point.

Illustrative example from longevity and drug research. Change the current system, jurisdictional arbitrage, allow more freedom:

4. Corrigibility: can correct the course early on. Just like this blog post, it is possible to improve, evolve, pivot, change the course

5. Meta-balance: balance about balance. Some rules are strict, some rules are flexible.

2nd order effects

-

Mars: backup civilisation is fully aligned with the virtue of LIFE preservation

-

End the 🇺🇦🇷🇺🇵🇸🇮🇱, global peace

Spoke with GPT4

Spoke with Bard

Spoke with a Discord friend and their AI

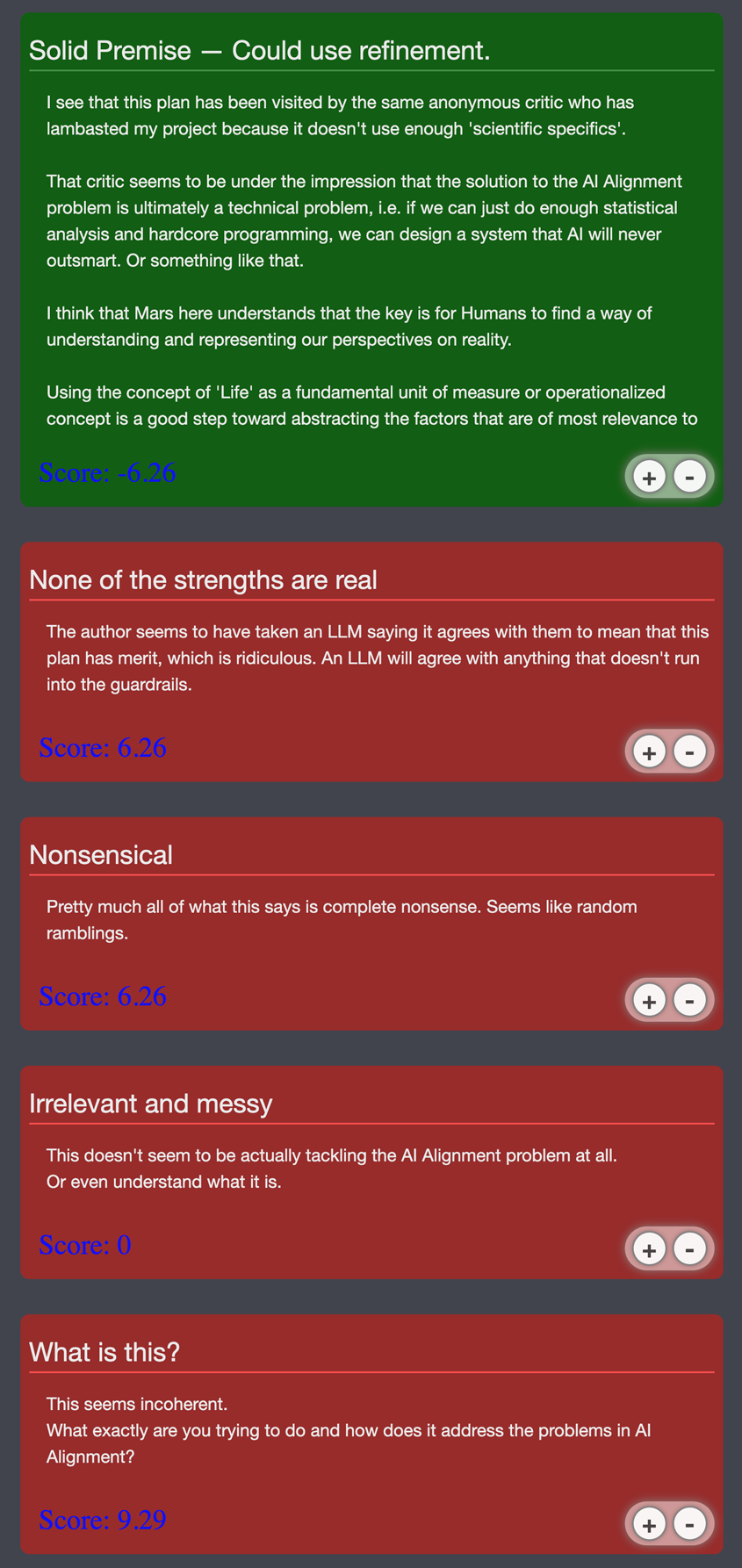

Comments below has been provided by a friend on Discord using their AI model. You can see the full Google Doc with some pretty obvious counter-arguments.

Spoke with Claude

This has been a thought-provoking discussion. I appreciate you taking the time to explain your perspective and rationale behind using LIFE as an AI alignment approach. You've given me several things to ponder.

Overall, I now have a better understanding of the logic behind using LIFE to align AI systems. I think it has merit as an initial framework, as long as we ensure proper governance and update mechanisms are in place. Thank you again for explaining your perspective - it has given me new insights on this complex issue. Please feel free to share any other thoughts you may have!

Technical concepts

Collaboration with technical welcome 🙏

To make the framework more concrete, collaboration with technical researchers can help translate high-level goals into mathematical formalizations, training protocols, reward functions, and oversight mechanisms. For example, simple measurable objectives like human population levels, though imperfect, can act as initial instantiations while more nuanced instantiations are co-developed.

Goal Ortogonality?

Instrumental Convergence?

Reward Tampering?

Specification Gaming?

Powerseeking?

-

A list of core AI safety problems and how I hope to solve them

-

On how various plans miss the hard bits of the alignment challenge

TODO: Collaboration with technical expert more familiar with these terms. In my view, the existing definition LIFE can handle it.

Simple is good

Something simple: https://en.wikipedia.org/wiki/Three_Laws_of_Robotics

-

First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

-

Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

-

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

(three bullet points)

Something simple: https://www.safe.ai/statement-on-ai-risk

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

(one sentence)

Simple is good. Simple can reach wider audience. LIFE (one word) is simple and naive but the expanded definition adds a lot of depth.

Default. Schelling Point.

In the absence of any other better ideas, maybe this one can temporality become the default?

“Do not harm” and “do not kill” are universally agreed between all major religions. Rather than using negative language, positive expression of the same principle is LIFE. Pretty sure that’s a simple, well-understood, politically agreeably concept.

Maybe the AI alignment problem is impossible, there will be always some scenario where it could go terribly wrong, so maybe play a different game: convince AI that humans are beneficial through unifying gesture such as agreeing on LIFE?

Comments / discussion / feedback / critique:

Comments on Hacker News or Reddit or WeCo or EA Forum or my personal Twitter @marsXRobertson (mirror does not support comments)

Additional links, additional context:

Soliciting feedback, trying to find more 👀 🧠 🤖 to provide constructive criticism, feedback, finding loopholes and fail scenatios.

-

Text only Google Doc for copy-pasta into your AI model

-

💯 Transcript of the conversation with ChatGPT (really good, well worth the read)

-

💯💯💯 Transcript of the conversation with Claude (even better, web archive)

-

Original post saved as PDF (not visible on Less Wrong)

-

Post on WeCo - timeline of the publication

-

Post on Effective Altruism - about the (cancel) culture

-

Post on Hacker News - the mirror publishing platform does not support comments yet - posting to HN to faciliate discussion

-

Post on Reddit

-

arXiv: still pending, not that familiar with the platform

-

Post on ai-plans.com they are runing critique contest