Abstract

Web3.0 x AI has been a very hot topic as of late, and many builders are rushing to build all sorts of applications, protocols, and infrastructure in this intersection of technologies. Ranging from on-chain ChatGPT to AI clones to “autonomous AI agents” to ML models in DeFi, there is a disarray of ideas blooming from the hype in this space, some of which make more sense than others.

But why does AI need to be decentralized? Does this intersection even make sense? More importantly, how do we differentiate noise from signal? In this article, we will provide a generic but robust framework for thinking about building infrastructure in the intersection between peer-to-peer decentralized networks and inference of artificial intelligence models. Keeping in mind that there are many gross exaggerations of what Web3.0 x AI can achieve today, we will take on a pragmatic approach to explaining the impact of the intersection of these technologies.

Introduction

Web3.0 and AI are both loaded terms that encompass a wide range of different technologies and themes. The intersection of both fields can be broadly broken down into two core subspaces:

-

Integrating Web3.0 into AI: Building generic AI infrastructure to inherit the properties of modern peer-to-peer blockchain networks including decentralization, censorship-resistance, utilization of token incentives…etc.

-

Integrating AI into Web3.0: Building infrastructure that allows Web3.0 to leverage sophisticated models to empower both new and existing on-chain use-cases.

While there is significant overlap between the two problem spaces as they are not mutually exclusive (e.g. quite plausible to think that on-chain trading agents will be accessible and operate in a decentralized fashion), we’ve created this subcategorization intentionally since the generic problems technologists are trying to tackle in these two verticals, as we explain later in this article, fundamentally have different properties and time horizons.

Let’s dive in.

Decentralizing AI (Web3.0 -> AI)

Question: So what does it mean to integrate Web3.0 into AI?

Answer: At a high level, as we have previously described, it’s about building infrastructure that decentralizes the use of AI models so that users will always be able to have access to open-sourced and credibly neutral AI. As closed-source AI gradually takes over the world, the introduction of biases into these models for gain could pose a risk to the neutrality of models, having an open-platform for researchers to share, iterate, and develop AI could pose as a critical form a resistance to these risks. This is not dissimilar to cryptocurrencies, where we want to build a system robust to centralized points of failure: we want to help people become financially resistant to bank defaults or faults of governments. Similarly, relying on closed-source AI companies like OpenAI means they have significant control over the information you consume.

Question: Okay, but why is that important?

Answer: Especially when it comes to a powerful technology like AI, there are risks associated with having a single centralized entity with complete control. As people become increasingly reliant on powerful tools like ChatGPT, if a centralized entity were to have control over AI, they could effectively curate consumable information made accessible to the public, control the narratives exposed to people, and effectively redefine the way people think about certain topics. This becomes an increasingly scary idea when you think about the fact that this bias doesn’t stop at human consumption, other forms of technologies and models are beginning to programmatically depend more and more on AI: biased AI can churn out biased information, which is used as training data to create more biased models.

Question: So what does decentralization of AI inference actually look like?

Answer: Before we think about inference, let’s scrutinize what decentralization means broadly. The core value propositions to decentralization anything via a distributed ledger primarily include transparency, verifiability, and censorship-resistance. Using Ethereum as an example: the network offers complete transparency to the transactions that occur on the network, ensures verifiability of blocks of transactions via consensus and fork choice, and offers censorship-resistance by allowing anyone to gain access to the network by permissionlessly running a node. Similarly, these properties can all be inherited and applied to infrastructure aiming to decentralize inference as well: transparency of execution of inference, verifiability to ensure the integrity of the inference, and censorship-resistance by allowing anyone to permissionlessly upload or inference any models.

Question: If this is so important, why don’t AI folks care about Web3.0 x AI?

Answer: There isn’t active censorship of AI technology just yet, everyone still has access to AI and to our knowledge there aren’t centralized entities attempting to consciously introduce biases to AI in a way that has proven harmful yet (ahem Gemini). As a result, the primary problem that people in the AI camp are trying to solve is how to make models better: how to improve quality of model output, how to reduce error rate, how to increase model explainability…etc. It’s understandable that censorship-resistance and neutrality aren’t top of mind when the models themselves still have plenty of room for improvement, but one can also already observe entities like the US government preemptively taking steps in the direction of increasing their control.

This is also why the integration of Web3.0 into AI is a longer-term vision; censorship of AI is very much a function of the capabilities of those models and what one can do with those models. Currently many people might not believe it makes sense to put AI on-chain (aas s decentralization introduces computational overhead), but as AI gets better and becomes more powerful, it’s quite plausible to see centralized entities stepping in to restrict access to, regulate, or monetize the technology.

As a result, Vanna is actively building a network for AI models that directly front-runs this: it will be critical for AI in the long-run to be accessible through decentralized infrastructure that helps democratize access to this intelligence to prevent centralization of influence, introduction of biases, or outright censorship.

Question: That was a lot of wishful thinking associated with a dystopian future where AI is censored and regulated, what can Web3.0 do for AI do today?

Answer: One thing Web3.0 has been empirically useful for is creating economic incentive structures through distribution and consumption of cryptocurrency tokens. Akin to how tokens are used as computational gas on Ethereum, tokens can also be used to align incentives and stimulate supply-side contribution to the mission of the token issuer. In the status quo there is little to no incentive to create open-sourced models as closed-source models are the only way to monetize R&D, however cryptoeconomic incentive structures introduce multiple avenues that can play a vital part in accelerating open-source AI development by allowing researchers to monetize licensed open-source models. A couple modalities of token monetization models include:

-

Bounty-hunting systems where researchers get token compensation for building open-sourced models that achieve specific purposes

-

Pay-per-inference consumption (similar to OpenAI)

-

Token-equity model where the model ownership itself is decentralized.

These are all exciting catalysts that Web3.0 can bring for open-source AI to blossom and rapidly accelerate, and are top-of-mind development efforts at Vanna as well.

On-Chain AI (AI -> Web3.0)

Question: We covered what Web3.0 can do for AI, now what can AI do for Web3.0?

Answer: Integration of AI into existing Web3.0 applications and technologies is a very real use-case that could be developed today, which is why at Vanna we think of the integration of AI into Web3.0 as a medium-term vision that we are working on realizing. Examples include autonomous algo-trading agents, risk models in lending protocols, quoting dynamic spreads in AMMs, NFTs with dynamic art, GameFi that leverages stateful LLMs…etc. It’s important to note that due to the prominence of generative AI tools, people often over-index on that when they hear the term “AI”. It’s critical to consider the fact that classical machine learning can also be very powerful in Web3.0 especially in the context of DeFi with the aforementioned use-cases as examples. Usage of sophisticated modeling techniques in trading/finance is not unprecedented either, the quant finance industry revolves around leveraging modeling to tackle asset management and trading. One of these impactful use-cases the Vanna team is actively tackling is conducting research on leveraging Vanna’s infrastructure to apply ML models for computing dynamic fees in Uniswap V4, which could ultimately reduce impermanent loss for AMM liquidity providers.

Question: If AI -> Web3.0 is really that powerful, why aren’t there more AI-empowered dApps?

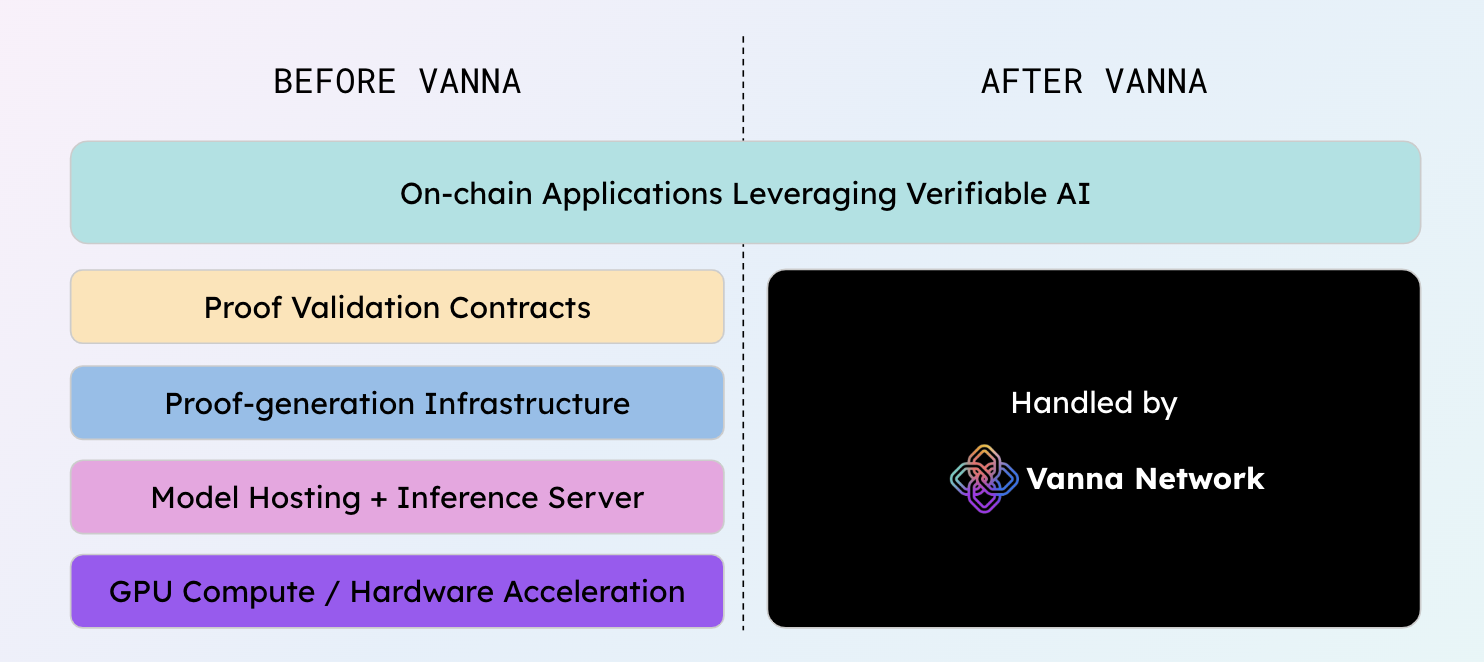

Answer: We’re already beginning to see a lot of dApps slowly leverage AI and ML in their decentralized applications, like AI-driven yield farming or AI-driven crypto price prediction. However, developing AI applications in Web3.0 is extremely challenging. Architecting production-ready AI/ML systems to service inference requests in a scalable fashion is already a non-trivial effort, on top of that developers must also worry about securing the inference. Trustlessness and security is a must for everything on-chain to prevent adversarial attacks (i.e. need to ensure the inference is executed correctly or else people could fake results as an attack vector), and trustless compute for AI/ML via cryptographic mechanisms is both very new and very difficult to develop. To build a Web3.0 application leveraging AI, developers must secure GPU compute, host models on an inference server, build a proof-generation system, utilize hardware acceleration for inference and proof-generation, and also have on-chain contracts to validate the proofs.

At Vanna we’ve recognized this as one of the major obstacles to rapid acceleration in this space: as a result we are developing infrastructure that makes it super easy for Web3.0 developers to seamlessly leverage access to AI inference directly on-chain while allowing them to choose from a variety of cryptographic security mechanisms to tailor to their use-cases. Choose a model from the decentralized filestore, pass it into a simple function call and you’re able to run secured inference!

Question: What do we need to do to accelerate and realize on-chain AI?

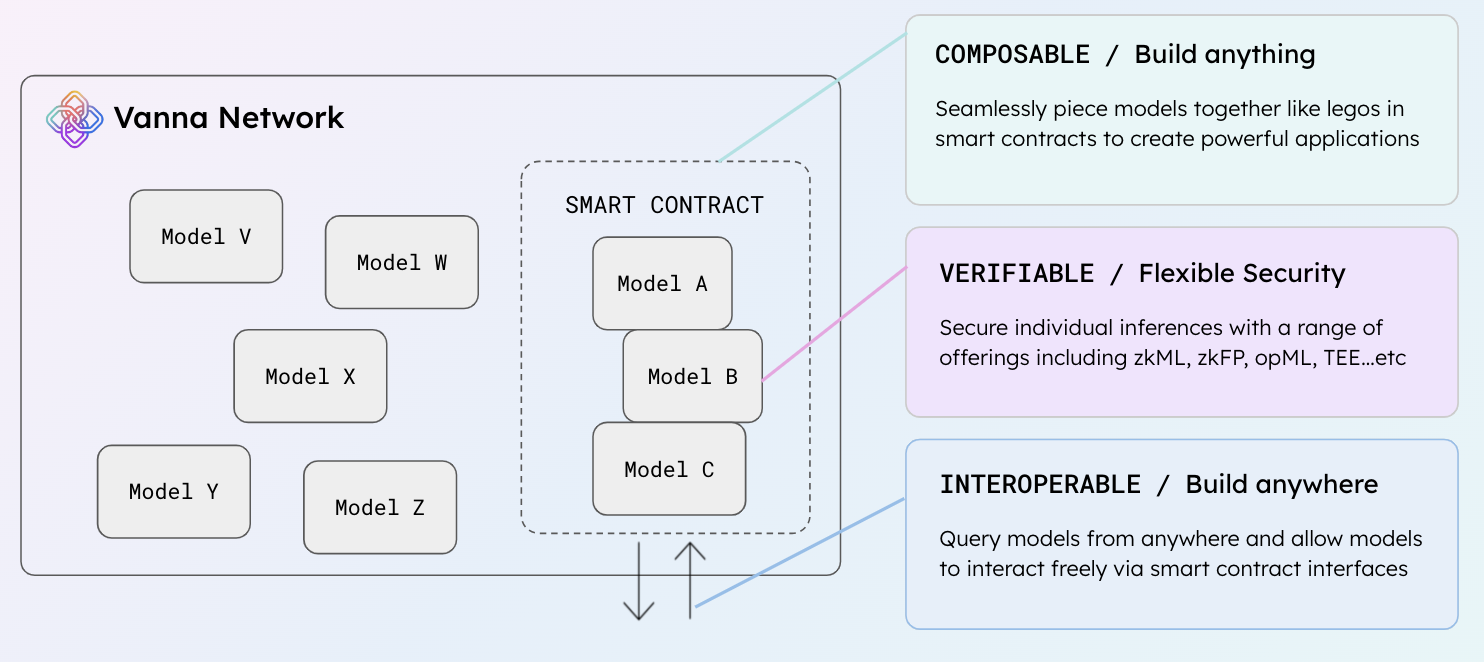

Answer: In addition to providing an end-to-end solution available via a simple function call, to ultimately reduce these barriers and realize the full potential of the Web3.0 x AI vision, we believe there are three principles to creating infrastructure that will facilitate the blossoming of this technological intersection.

-

Composability: Developers can piece together models like AI legos easily in smart contracts to create powerful use-cases and build whatever they want.

-

Interoperability: Developers can make interchain queries to models from any chain and gain access to whatever data they need for inference.

-

Verifiability: Developers can customize the security of their inference in all applications ranging from high-leverage use-cases to whimsical for-fun use-cases.

These are the design themes the Vanna Network is incorporating into its infrastructure to tailor use of AI to Web3.0 use.

Vanna: Building the Future of Web3.0 x AI

At Vanna Labs, we’re building a blockchain network that is a composable execution layer for on-chain AI inference. The network features access to scalable and secure model inference, allowing developers to seamlessly leverage AI models in composable smart contracts to create powerful applications.

We have a multi-pronged vision when it comes to the Web3.0 x AI integration. As we have discussed, there are multiple verticals of integration when it comes to Web3.0 x AI, and this is how we are comprehensively presenting an approach to this integration.

(1) AI → Web3.0 (Short-Term): Revolutionize Web3.0 protocols and dApps with smarter features through seamless and secure access to AI and ML.

(2) Accelerating Open-Source AI (Medium-Term): Accelerate open-source AI by creating a platform for Web3.0 incentive structures for AI researchers like avenues for tokenizing models, charging gas for inference, decentralizing model ownership…etc.

(3) Web3.0 → AI (Long-Term): Integrate with Web2.0 AI to become the universal inference provider for verifiable AI that is scalable, secure, and censorship-resistant.

Conclusively, we hope this article gave you a good framework of thinking about the impact of the intersection of Web3.0 and AI, and how there are two primary subclasses of problems being addressed specifically in the realm of inference.

Follow our journey here!

Twitter: https://twitter.com/0xVannaLabs

LinkedIn: https://www.linkedin.com/company/vanna-labs

Contact: team@vannalabs.ai