DANNY is a decentralized vector database for building vector search applications, powered by Warp Contracts, built by FirstBatch. It offers a robust set of tools for the development of various applications, including recommendation systems and semantic search functionalities. In this article, we will cover the foundations of DANNY and how to use it effectively for your projects.

Foundations

Why Vector Search is important?

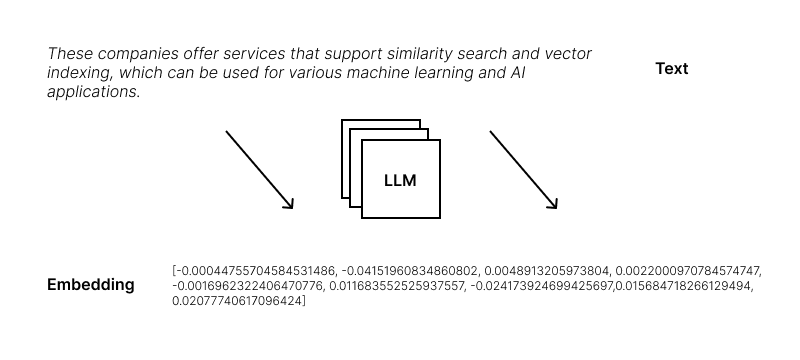

Embeddings provide an efficient and compact representation of semantic, complex data. By transforming high-dimensional data, such as text, images, or audio, into lower-dimensional vectors, embeddings enable faster and more accurate processing, similarity search, and pattern recognition. This transformation allows machine learning models to understand and capture the underlying structure, relationships, and semantic information within the data.

OpenAI recently released their newest model, GPT-4 a general purpose LLM with vast capabilities. Besides abilities like chat, completion OpenAI provides an API to produce embeddings from any given text with text-embedding-ada-002 model. HuggingFace provides many Sentence Transformers with similar functionalities to text-embedding-ada-002.

These models converts input text to a single vector, promising that output vector is an n-dimensional representation of semantics of the text.

Pre-trained models like VGG, ConvNet and Inception provides the same functionality for images, outputting vectors with n-dimensions that represents intrinsic features of the image. However embeddings are not limited to images or texts but one can generate embeddings for arbitrary data including video, audio, tabular data, EEG, etc.

What about vector search?

Representing images, videos, books, comments, articles as vectors while encoding their context, enables operations such as cosine similarity or euclidian distance between them. Meaning software can search through a dataset of vectors to find patterns and similarities. Finding the most similar/close vector based on chosen metric (cosine similarity, euclidian etc.) is at the heart of building AI applications.

But when it’s on large-scale, computing metrics such as cosine similarity or euclidian distance to find similarities gets costly or infeasible.

What is a Vector Database?

Vector databases use the power of indexing to make large-scale similarity search feasible for applications. A vector database is a fully managed, no-frills solution for storing, indexing, and searching across a massive dataset of unstructured data that leverages the power of embeddings from machine learning models. (source: Zilliz). Projects like Milvus, QDrant, Pinecone and Weaviate provide significant functionalities along with vector search mechanism. Their users have CRUD operations along with large scalability and append options to existing index.

Why make it decentralized?

Decentralized databases operating on smart contracts offer enhanced security, improved data integrity, increased trust and transparency, and greater control and ownership for users. They also reduce reliance on intermediaries.

Since DANNY is operating on Warp, computations are transparent and verifiable, making it a huge step forward towards transparent and secure AI applications.

What can you build with DANNY?

DANNY offers powerful tools for developing various applications, including recommendation systems and semantic search functionalities. By utilizing Warp Contracts, apps can deliver semantic search and/or personalized suggestions for products, content, or venues tailored to individual preferences to enhance the user experience.

On the other hand, DANNY has the potential to remove duplicates from large sets of data and detect anomalies, creating new possibilities for DAO's action space, fighting fraud on de-fi and Sybil identities.This means you can build transparent and decentralized algorithms such as:

-

Semantic search

-

Recommendation

-

Deduplication

-

Fraud detection -- and many more! w/DANNY

Using DANNY

To start using DANNY, you have to first create your dataset then, create an index using that dataset and deploy your contract. A walkthrough:

Creating Your Dataset

A dataset at its core, is metadata and embeddings. There are many models for various data inputs to create embeddings including the ones mentioned above. Here is a good read on embeddings.

To start building DANNY models, you can use static csv files with "##" as the delimiter. These files contains 4 fields: (id, metadata, timestamp, vector) . Here is a sample row with a post id, a string metadata, an integer as UTS and a 20 dimensional vector.

0x013ed6-0x0f##Lenster##1674667708##-0.00044755704584531486, -0.04151960834860802, 0.0048913205973804, 0.0022000970784574747, -0.0016962322406470776, 0.011683552525937557, -0.024173924699425697, 0.03176078572869301, -0.005960546433925629, 0.048019785434007645, 0.04324935004115105, -0.001319467555731535, 0.018381614238023758, -0.024039890617132187, 0.014896255917847157, 0.02003282494843006, -0.023054126650094986, 0.00384587817825377, 0.015684718266129494, 0.02077740617096424

Creating Index

Index creation is for creating DANNY models from datasets.

DANNY is built with Warp Contracts powerful WASM feature to ease the process of writing smart contracts that can handle intense computational tasks with complex data structures. Therefore both indexing and contracts core is written in Rust. Creating index is quite straightforward.

Running:

cargo run --package indexing --bin train -- --dimensions 1024 --num-trees 5 --path lens_dataset

Inside DANNY/indexing repo will create single or multiple JSON files ready to be deployed inside your contract.

Sharding & Deployment

DANNY automatically splits the model into shards if the data size is above a certain threshold. These “shards” contains fragments of a larger DANNY model and are uploaded to Arweave Network. However, one may upload shards to Google Cloud or WeTransfer to create a custom pipeline.

Models are fetched and written into KV states by default on contract deployment. Using the flexibility of KV Storage, there is no size limit for DANNY models in theory. We were able to run ~10GB models with feasible query times like ~2 seconds.

DANNY contract consists of three main components:

-

Contract

-

Key-Value State

-

WASM (Rust Code)

All ANN capabilities are coded in DANNY WASM module, which shares the KV state with the contract itself. Users interact with the contract with ViewState to query DANNY and these queries has zero gas fees. KV State holds the DANNY model, allowing fast retrieval of nodes. A single query calls get() methods ~100 times for medium(~5-10GB) size models, yielding fast response times around ~2.5 seconds with cached state.

Once the model has been loaded to the contract, Similarity Search can be performed by querying the contract.

Querying

DANNY's inference capabilities allow for efficient and transparent Similarity Search on the Arweave network. These capabilities enable developers to run queries for vector search, returning the most relevant results based on the input parameters. With DANNY, you can build powerful applications that require semantic search, personalized recommendations, and more.

To query the deployed model, developers can interact with the smart contract by sending transactions containing the search parameters. The smart contract then processes the query, searches the model, and returns the results in a transparent and verifiable manner. This ensures that the results can be trusted, as they are generated by a decentralized system that is immune to tampering.

import {knn} from 'danny'

// Initialize Danny Contract

const inferenceContract = new InferenceContract(contractTxId, warp).connect(wallet);

// Get closest 100 vectors

await knn(inferenceContract, vec, 100, 550)

In summary, DANNY's querying capabilities provide an efficient and secure solution for performing similarity search tasks in decentralized applications. With its easy-to-use interface and powerful inference features, DANNY is an ideal choice for developers looking to create next-generation applications powered by vector search.

Current State

Developers can use DANNY to create vector databases (train) from their custom datasets and run queries for vector search through Warp contracts. Currently, DANNY implements the ANNOY Search algorithm for vector search. DANNY doesn't fully support CRUD operations as a database yet. Developers should re-train the entire corpus to add new vectors to DANNY.

The library contains source code for contracts, workers, and utilities that allow training, deployment, and inference of DANNY models.

Future Work

DANNY is open to public contributions. We as FirstBatch will be developing DANNY to be a full-feature Vector database with multiple indexing algorithms.

-

New Indexing methods

-

Adding support for Hierarchical Navigable Small Worlds (HNSW)

-

Adding support for Locality Sensitive Hashing (LSH)

-

-

Distributed training: Creating DANNY models should also be possible in a distributed manner for larger datasets. Moving transparency a step further.

-

DANNY Node Release: DANNY Node will provide gRPC wrapped decentralized scalability tools for DANNY, making it a scalable service with competitive features.

-

CRUD operations: Instead of retraining, models should enable appending or removing of vectors.

All code is published open source on Github. Feel free to contribute!

You are welcome to our fresh Discord channel for discussions and questions!

Here is Gravitates, a personalized reader app that utilizes DANNY to deliver personalized contents from articles, news, blogs and crypto socials!