“Solana pushes Layer1 scaling to the extreme by sacrificing capability to reach out the TPS bottleneck of non-sharded chains. However, the multiple downtimes seem to foreshadow the end of sacrificing capability/security for efficiency.”

Author: Web3er Liu, CatcherVC

Technical Advisor: Liu Yang, author of Embedded System Security

Abstract

- Solana scaling is mainly based on three major aspects: efficient use of network bandwidth, reducing the frequency of inter-node communication and speeding up node computing. These measures directly shorten the time for block generation and consensus communication, but also reduce system capability (security).

- Solana discloses the list of Leaders in advance, revealing a single trusted data source to reduce the consensus communication overhead. But this may introduce security risks such as bribery and targeted attacks.

- Solana treats consensus communications (voting messages) as transaction events, with over 70% of the TPS component being consensus messages and around 500-1000 TPS associated with user transactions.

- Solana's Gulf Stream mechanism replaces the global transaction pool, which makes transaction processing quicker while the spam transaction filtering less efficient, potentially leading to downtime of Leader.

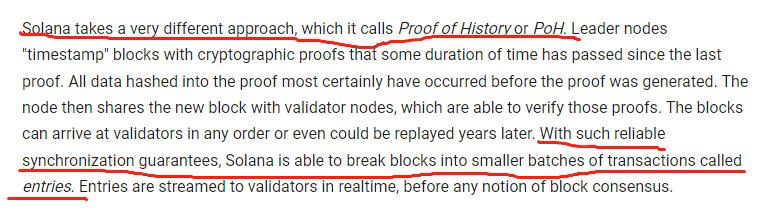

- Solana's Leader nodes publish transaction sequences rather than real blocks. Combined with the Turbine transfer protocol, transaction sequences can be sliced and distributed to different nodes, making the data synchronization extremely fast.

- POH (Proof Of History) is essentially a timing and counting method that stamps different transaction events with serial numbers to generate transaction sequences. The leader essentially publishes a consistent timer (clock) across the network in the transaction sequence. The advancement of the ledger and the time are consistent across different nodes within a very short time window.

- Solana has 132 nodes occupying 67% of the staking share, of which 25 nodes occupy 33% of the staking share, basically constituting an “oligarchy” or “Roman Senate”. If these 25 nodes conspire, it is enough to lead the network into chaos.

- Solana requires a high level of node hardware since it uses huge equipment costs in exchange for vertical scaling. The entities running Solana nodes are mostly whales, institutions or enterprises, which is not in line with the vision of decentralization.

- In summary, Solana pushes Layer1 scaling to the extreme with advanced node equipment, revolutionary consensus mechanism and data transmission protocol, and basically reaches the TPS bottleneck that can be maintained by non-sharded chains. However, the multiple downtimes have already foreshadowed the end of sacrificing capability/security for efficiency.

Introduction

2021 is the turning point for blockchain and Crypto. With concepts like Web3 becoming more and more popular, the public chain world has seen the strongest traffic growth ever. Amid this external environment, Ethereum has become the titan of the Web3 world with its full decentralization and security, but the efficiency issue has become its “Achilles heel”. Ethereum, with only 10 transactions per second, pales in comparison with VISA, whose TPS easily passes 1,000. This scenario is far from its grand vision of a “world-class decentralized application platform”.

In this regard, Solana, Avalanche, Fantom, Near and other new public chains with scaling as their core have once become the main players in Web3, attracting a huge flood of capital. Just take Solana for example, the head public chain called “Ethereum killer” has soared 170 times in market value in 2021, and its influence one that time even surpassed the old public chains like Polkadot and Cardano, making it an eligible competitor for Ethereum.

But the boom does not last long. On September 14, 2021, Solana was down for the first time for 17 hours due to performance defaults, and the price of SOL token dropped rapidly by 15%; in January 2022, Solana was down again for 30 hours, which triggered extensive discussions; later in May, Solana was down twice then once more in early June. According to Solana's official statement, its main network has experienced at least 8 times of performance degradation or downtime.

Along with the emergence of many problems, critics led by Ethereum supporters took turns to question Solana, and some even gave Solana the title of “SQLana” (SQL is a system for managing centralized databases), with a flood of comments and analyses. To this day, the discussion about the real usability of Solana seems to never stop, attracting countless curious observers. Out of interest and concern for mainstream public chains, in this article, CatcherVC will give a concise explanation of the Solana scaling mechanism and explore some of the reasons for its downtime.

Solana System Architecture, Consensus Mechanism, Block Transfer Process

The efficiency of a public chain mainly refers to its ability to process transactions, that is, TPS (transactions processed per second), which is influenced by the speed of block generation and block capacity, and also affects the transaction fees and user activity. From the popular EOS in 2018 to Optimism, which recently launched a coin offering, all the scaling solutions almost can’t get around the most crucial element of “accelerating block generation”.

To generate blocks quicker, it is often necessary to “do something” in the block generation process, and Solana is no exception. Solana scaling is mainly based on three major aspects: efficient use of network bandwidth, reducing the frequency of inter-node communication and speeding up node computing. Solana's founder, Anatoly Yakovenko, and his teammates have carefully crafted every detail to improve efficiency as much as possible at the cost of system capability (security). They basically reached the real TPS limit of a non-sharded public chain and finally achieved innovation “at a cost”.

Compared with other POS public chains, Solana's biggest innovation lies in its unique consensus protocol and network node communication method, which is based on POS and PBFT (Practical Byzantine Fault Tolerance). It introduces the original POH (Proof of History) to advance the blockchain ledger to create its unique consensus system.

Solana's consensus protocol is presented in a similar way to Cardano's earliest Ouroboros algorithm, which contains two major time units: Epoch and Slot. Each Slot is about 0.4 to 0.8 seconds, which is equivalent to the time interval of a block. Each Epoch cycle contains 432,000 Slots (blocks), which are 2 to 4 days long.

In Solana's system architecture, the most important roles are divided into two categories: Leader and Validator. Both are actually full nodes staking SOL tokens, whose only difference is that Leaders are different full nodes in different Slots (block generation cycles), and the full nodes not elected as Leaders will become Validators.

At the beginning of each new Epoch, the Solana network selects Leaders based on the stake weights of each node and forms a rotating list of Leaders to decide on Leaders at different times in the future. During the entire Epoch (2-4 days), Leaders are rotated by the order specified in the list, and the Leader node is changed every 4 Slots.

By disclosing the future Leaders in advance, the Solana network essentially obtains a definite and trusted source of new block data, providing great convenience to the consensus process.

A Glance at Solana Block Generation Process

To understand Solana's scaling mechanism more clearly, we can start with the logic of block generation and have a look at the general structure of Solana.

1. After a user initiates a transaction, it will be forwarded directly by the client to Leaders, or first received by ordinary nodes and then immediately forwarded to Leaders.

2. The leader receives all the pending transactions in the network and executes them while sorting the transaction instructions to form transaction sequences (similar to a block). Every once in a while, the Leader sends the sequenced transactions to Validator.

3. The validator executes the transactions in the order given by the transaction sequence (block), generating the corresponding state information (executing a transaction changes the state of the node, for example, changing the balance of some accounts).

4. For every N transaction sequence sent, the Leader will periodically disclose the local state, and the Validator will compare it with its own State and give an affirmative/negative vote. This step is similar to the “checkpoint” in Ethereum 2.0 or other POS public chains.

5. If the Leader collects positive votes from nodes with 2/3 of the stake weights of the whole network within the specified time, the previously released transaction sequence and state can be finalized and the “checkpoint” is passed, which is equivalent to the finality of the block.

6. Generally speaking, Validators that give a positive vote and Leaders share the same transaction execution state and post-transaction state, and in this way, the data will be synchronized.

7. For every 4 Slots, Leaders will make a switch, which means each time Leaders have about 1.6 to 3.2 seconds to master the “supreme power” of the network.

Solana's Scaling Mechanism Explained

On the surface, Solana's block generation logic seems to be generally similar to other public chains using POS mechanism and they all include a process of generating blocks and voting on blocks. However, if we take a look at each step, we will see that Solana is very different from other public chains, and this is the root cause of its high TPS and low capability.

-

The most important point: Solana discloses the Leader of each Slot in advance, which significantly reduces the workload during the consensus process. In other POS public chains, the consensus communication efficiency of the network is extremely low due to the lack of a single, trusted block-generating node. This leads to their time complexity often several orders of magnitude higher than Solana, a bottleneck of most public chains in terms of TPS.

-

Taking the mainstream POS consensus protocol or PBFT algorithm as an example, most of these algorithms adopt the same time unit and role division as Solana, and also have settings similar to Epoch, Slot, Leader, Validator, and Vote, with different parameter settings and names. The biggest difference is that most of these algorithms stress security (capability) without disclosing the list of Leaders in advance.

For example, Cardano also generates a Leader rotation list in advance but keeps it private. Each selected Leader only knows when to generate blocks without knowing the information from other Leaders. This makes Leader nodes unpredictable to the outside world.

Since there is no public Leader, nodes will “distrust each other” and “go their own way”. In this case, when a node claims to be the legitimate Leader, and if people don’t trust it and will ask it to present the relevant proof, whose generation, spread and verification may waste bandwidth resources and raise extra workload (it may even be related to Zero-Knowledge). Solana can avoid such troubles by disclosing the Leaders of each Slot.

More importantly, in most POS consensus protocols or PBFT-type algorithms, the vote for a new block (a block has to be finalized with the affirmative vote of 2/3 nodes in the network) is often sent or collected by each node through a “Gossip protocol” in a manner similar to node-to-node exchange, somewhat similar to the viral random diffusion. It essentially requires communication between every two nodes, which is more complex and time-consuming than Solana's consensus protocol.

In PBFT algorithms such as Tendermint, a single Validator node has to collect single votes sent by at least 2/3 of the nodes in the network. If the number of nodes in the whole network is N, each node receives at least 2/3*N votes and the number of communications generated by the whole network is at least 2/3*N², which is obviously too large an order of magnitude (proportional to the square of N). If there are many nodes, the time spent in the consensus process will soar accordingly.

In response, Solana and Avalanche use ways to improve the node communication process to collect votes so as to reduce time complexity. In layman's terms, Leader centrally aggregates all the votes issued by Validator, then packages them together (written into the transaction sequence) and pushes them into the network at once.

In this way, nodes no longer need to exchange vote information frequently through the “gossip protocol” in a one-by-one manner, and the order of magnitude of communication frequency is reduced to a constant N or even logN, which largely shortens the block generation time and significantly improves TPS.

Presently, Solana's block generation cycle is basically the same as a Slot: 0.4 to 0.8 seconds, even 4 times as fast as Avalanche. (The block displayed by the Solana browser is essentially a transaction sequence posted by Leader within each Slot.)

However, this also brings another problem: having the Leader publish the nodes' votes within the transaction sequence (block) takes up block space. In Solana's setup, Leader essentially treats consensus voting as a transaction event, and the transaction sequence it publishes contains the node voting, which is the main component of Solana TPS (typically more than 70%).

According to the statistics from the Solana browser, its actual TPS is maintained at about 2000~3000, of which more than 70% are consensus voting messages where ordinary users are not involved, and the actual TPS related to user transactions is maintained at 500~1000. It is one order of magnitude higher than BSC, Polygon, EOS and other high-performance public chains, but still can't reach the tens of thousands of levels as officially advocated.

Meanwhile, if Solana continues to enhance decentralization in the future and allows more nodes to participate in consensus voting (currently there are nearly 2000 Validators), the transaction sequence released by the Leader will definitely contain more voting messages, which will continue to compress the TPS space for user transactions. This means that it is hard for Solana to achieve higher TPS without sharding.

To a certain extent, Solana's transaction processing capacity of 500~1000 transactions per second has already reached the peak of non-sharded public chains. With more nodes, no shards, and smart contracts supporting, new public chains will find it hard to surpass Solana's TPS unless they adopt the “committee” model, where only a small number of nodes can participate in consensus, or degenerate into a centralized server. As long as the number of nodes participating in consensus remains large, it will be difficult to achieve a higher “verifiable TPS” than Solana.

It is particularly noteworthy that Solana's consensus protocol is not fundamentally different from the original Tendermint algorithm, since the list of Leaders in each Epoch (2-4 days) is made public in advance, and there is no unpredictability guaranteed for Leaders. In this way, everyone can predict possible Leaders at a certain time point and this will raise many potential problems on security/usability.

Leaders are vulnerable to premeditated DDOS attacks, and this will increase the failure rate; if several Leaders fail in a row, the network is prone to downtime; users can bribe Leaders in advance, etc.

Gulf Stream and Network Downtime

Solana public Leader list has a more important purpose: with its original Gulf Stream mechanism to improve the speed of network processing transactions.

After a user initiates a transaction, it is often forwarded directly to a designated Leader by the client program, or first received by ordinary nodes and then quickly sent to Leaders. This approach enables Leaders to receive transaction requests as soon as possible and respond faster (this is called the Gulf Stream mechanism, one of the main causes of Solana's downtime.)

This way of submitting transactions is very different from other public chains, as Gulf Stream replaces the “global transaction pool” of Bitcoin and Ethereum, where normal nodes do not run large transaction pools. After a node receives a user’s pending transaction, it simply hands it to Leaders and does not have to send it to other nodes. This approach significantly improves efficiency. However, without the transaction pool, ordinary nodes cannot efficiently intercept spam transactions, which can easily cause the Leader node to go down.

To fully understand this point, we can compare it with ETH:

- Every full node of ETH has a storage area called a transaction pool (memory pool) for unlisted, pending transaction instructions.

- When a node receives a new transaction request, it will first filter it to determine whether the transaction instruction is compliant (whether it is a duplicate/spam transaction), and then it stores the transaction in the transaction pool and then forwards it to other nodes (similar to viral proliferation).

- Eventually, a legitimate pending transaction is passed around the network and placed in the pool of all nodes, which allows different nodes to get the same data and show “consistency”.

Ether and Bitcoin adopt this mechanism for a very clear reason: there is no way to know who is the future Leader, and everyone has the probability of picking a new block. So, different nodes must receive the same pending transactions in preparation for packing blocks.

If a mining pool node releases a new block, the node receiving the block will parse and execute the transaction sequence in order, and then clear this part of the transaction instruction from the transaction pool. At this point, a batch of pending transactions can be uploaded to the chain.

Solana replaces the kind of transaction pooling in Ethereum. Pending transactions do not need to be randomly proliferated within the network and they are quickly submitted to a designated Leader and then packaged into transaction sequences to be distributed at once (similar to the way voting is distributed in the previous section). Ultimately, a transaction only needs to be included in a transaction sequence and propagated within the network for 1 turn (2 turns in practice for Ethereum). In the case of high volumes of transactions, this nuanced difference can dramatically improve propagation efficiency.

However, according to the technical note related to the transaction pool TxPool, the transaction pool/memory pool essentially plays the role of a data buffer and filter that can improve public chain capability. All nodes run transaction pools to include all pending transactions in the network so that different nodes can independently filter spam requests, intercept duplicate transactions in the first place, and share the traffic pressure. Although the use of transaction pools slows down the speed of block generation, it can filter out and intercept duplicate transactions (requests already recorded in the transaction pool) or other types of spam requests sent by users. In this way, the filtering is shared by all nodes in the network.

Solana has taken the opposite approach. Under the Gulf Stream mechanism, ordinary nodes do not operate a consistent pool of transactions across the network and cannot efficiently intercept duplicate/spam transactions. All the normal nodes just check whether the transaction data package is in the right format, but are unable to identify malicious duplicate requests. At the same time, because the ordinary nodes push the transaction instructions to the Leader, i.e. dumping the “burden” of filtering transactions to the Leader, in the case of huge traffic and a large number of duplicate transactions, the Leader node will be overstressed and the block generation will be affected. In the end, the consensus vote will not be propagated smoothly and the network will easily collapse.

In this regard, Anatoly Yakovenko, the founder of Solana, said on January 27 this year that during the public sale hours of certain popular projects, up to nearly 2 million transaction requests per second reached the same Leader node, of which more than 90% were totally duplicate transactions, eventually causing Solana to go down

Reference*: In-depth investigation: Why do new public chains have frequent downtime accidents?*

To sum up, while Ethereum is essentially sacrificing efficiency for security, Solana is sacrificing security for efficiency, and the problems it faces can be summarized as follows:

As the Leader rotation order is predetermined, it has to be gone through in order. However, due to the imperfect traffic sharing mechanism, the failure rate of Leaders is high. If the user traffic is large in a certain period of time (such as during some hot NFT opening public sales), it may cause multiple Leaders to fail in a row (for example, the Leaders of the next 40 Slots can’t generate block smoothly), so that the consensus process is blocked, the network will fork, the Leader rotation chain will be completely broken and eventually collapse.

Turbine Propagation Protocol Similar to BT Seed:

With the cooperation of the above Gulf Stream mechanism, Leader quickly receives all transaction requests within a period of time, checks their legitimacy, and then executes transactions. At the same time, Leader uses a mechanism called POH (Proof of History) to stamp each transaction with a sequence number to give orders to the transaction events. (Details will be elaborated later)

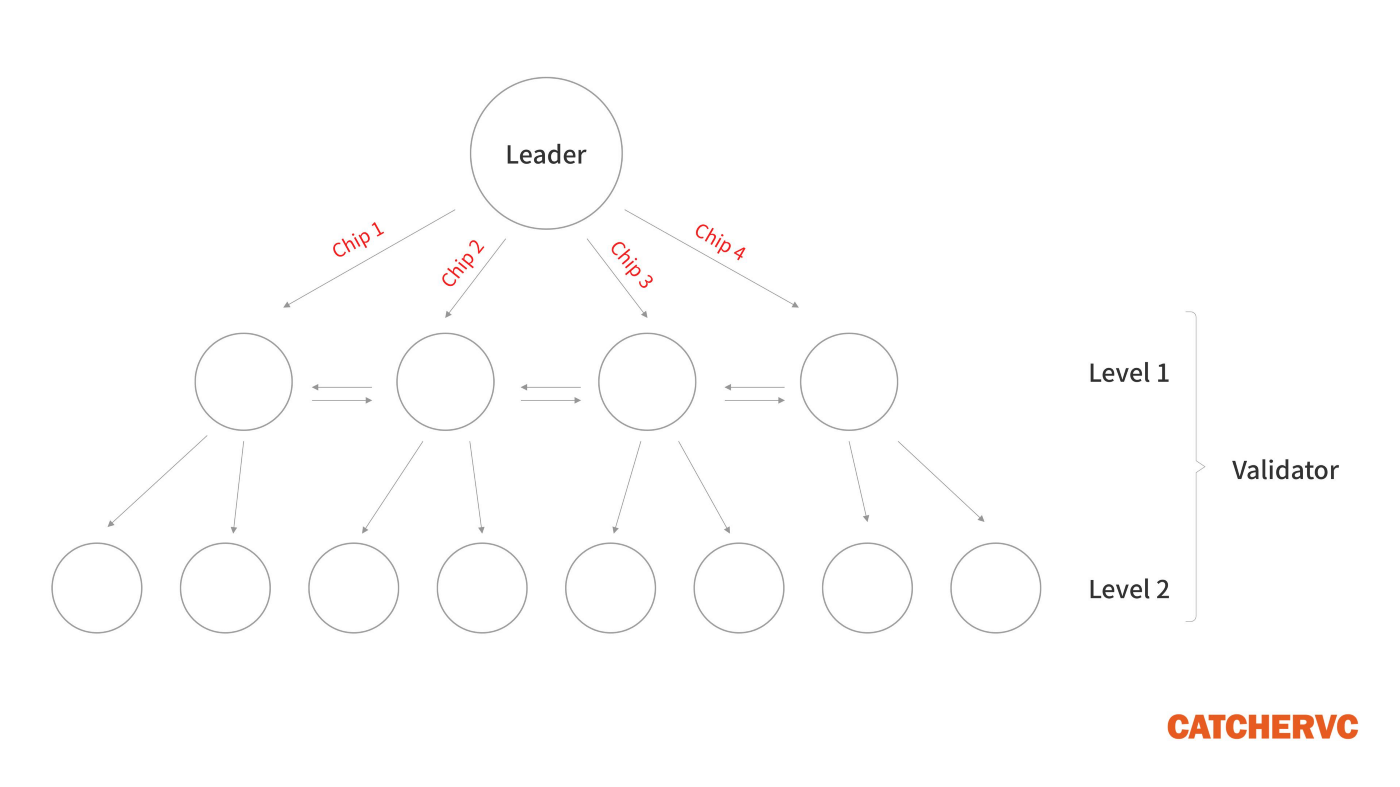

After Leader sequences the transaction events, it slices the transaction sequence into X different pieces and sends them to each of the X Validators with the most staked assets, who in turn propagate them to other Validators. Validators will exchange the received pieces by themselves and piece together the complete transaction sequence (block) locally.

To make it easier to understand, we can treat each different piece as a small block with a reduced amount of data. Leader distributes X pieces at a time, i.e. sending out X different small blocks for different nodes to receive and further spread.

This special message distribution of Solana is inspired by BT seeds. (The principle is not easy to express in words, the main idea is to use the idle bandwidth of multiple nodes at the same time to transmit data in parallel). Generally speaking, the more pieces are sliced from the transaction sequence, the faster for the node group to spread the pieces and put together the transaction sequence, and as a result the data synchronization efficiency will be significantly improved.

In other public chains, Leader will send the same block to X neighbouring nodes, meaning sending out X copies of a block instead of distributing X different pieces (small blocks), and this practice generates serious data redundancy and bandwidth waste. The root cause of this is that the traditional block-type structure is not divisible and simply cannot be transmitted flexibly, while Solana simply replaces the block-type structure with transaction sequences, combined with Turbine protocol similar to BT seed to achieve high-speed data distribution and better TPS.

Solana has officially stated that a transaction sequence can be synchronized to all nodes within 0.5 seconds when the network has 40,000 nodes under the Turbine propagation protocol.

Meanwhile, under the Turbine protocol, nodes are divided into different tiers (priorities) according to the weight of their staked assets. Validators with more staked assets are the first to receive the data sent by Leaders, after which the data are passed by nodes to the next tier. Under this mechanism, the group of nodes accounting for 2/3 of the weight of staked assets across the network will be the first to receive transaction sequences issued by Leaders, speeding up the confirmation speed of the ledger (block).

According to the data disclosed by Solana Browser, 2/3 of the stake weight is currently shared by 132 nodes. According to the propagation mechanism mentioned earlier, these nodes are the first to receive the transaction sequences issued by the Leader and to give their votes. As long as they get the votes from these 132 nodes, the transaction sequence issued by the Leader can be finalized. From a certain perspective, these nodes are ahead of other nodes, and if they conspire with each other, that may lead to some detrimental scenarios.

What is more noteworthy is that there are currently 25 nodes in Solana occupying 1/3 of the stake weight. According to Byzantine Fault Tolerance Theory, as long as these 25 nodes collectively collude (e.g., deliberately not sending a vote to a Leader), it is enough to throw the Solana network into chaos. To some extent, the problem of “oligarchy” faced by Solana should be a concern for all public chains adopting the POS voting system.

POH (Proof Of History)

As mentioned before, the Turbine protocol allows Leader to slice the transaction sequences and release the different pieces. This practice should base upon one guarantee: after the transaction sequence is sliced, it should be easy to be pieced together. To solve this problem, Solana deliberately put the error correction code (which prevents data loss) in packages and introduces the original POH (Proof Of History) mechanism for sequencing transaction events.

In Solana White Paper, Yakovenko uses the hash function SHA256 to demonstrate the principle of POH. For ease of understanding, this paper will explain the POH mechanism with the following example.

Since POH and the corresponding time evolution logic are hard to describe in words, it is recommended to read the POH part in Solana Chinese White Paper, and then use the following part as a supplementation.

-

The input and output values of the SHA256 function are uniquely mapped (in a one-to-one manner), and after inputting the parameter X, there is only a unique output result SHA256(X) = ? ; different X's will lead to different ? = SHA256(X);

-

If SHA256 is computed in a circular, recursive manner, for example:

Define X2 = SHA256 ( X1 ), then use X2 to compute X3 = SHA256 (X2), then compute X4 SHA256 (X3), and so on continue this iteration, then Xn = SHA256 ( X[n-1] ).

-

Iterating this process, we end up with a sequence of X1, X2, X3 ..... Xn, which has a characteristic: Xn = SHA256 ( X[n-1] ), the next Xn is the “descendant” of the previous X[n-1].

-

After releasing the sequence to the public, if an outsider wants to verify the correctness of the sequence, for example, he wants to determine whether Xn is really the “legitimate descendant” of X[n-1], he can directly put X[n-1] into the SHA256 function to see whether the result is the same as Xn.

-

In this mode, you can't get X2 without X1, and you can't get X3 without X2 ... Without Xn you can’t get X[n+1] that follows. In this way, the sequence has continuity and uniqueness.

-

The most critical point is, transaction events can be inserted into the sequence.

For example, After the birth of X3 and before the appearance of X4, the transaction event T1 can be used as an external input and added with X3 to obtain X4 = SHA256 (X3+T1). Here, X3 appears slightly before T1 and X4 is the descendant of (T1+X3), while T1 is essentially put between the “birthdays” of X3 and X4.

By analogy, T2 can be generated after X8, as an external input parameter to obtain X9 = SHA256 (T2 + X8), so that the appearance time of T2 is put between the “birthdays” of X8 and X9.

- In the above scenario, the actual POH sequence is of the following form.

Among them, the transaction events T1 and T2 are data inserted in the sequence by the outside world, and in the time record of the POH sequence, it is later than X3 and earlier than X4.

Just give the order number of T1 in the POH sequence, we will know how many SHA256 calculations occurred before it (T1 is preceded by X1, X2, X3, with three SHA256 calculations occurring).

The same goes for T2, which is preceded by eight Xs from X1 to X8, and eight calculations have occurred.

The non-technical explanation of the above process is as follows: there is a man with a stopwatch to count the seconds, and whenever he receives a letter, he writes down the time on the envelope according to the reading of the stopwatch. After receiving ten letters, the number of seconds recorded on these ten letters must be different and there is a sequential distinction, which gives letters different orders. According to the records on the letters, we can also know the length of the interval between two letters.

- Leader, when releasing the transaction sequences, as long as puts the value of X3 in the packet of T1 and informs the serial number of X3 (the third one), the Validator receiving the packet then can parse the complete POH sequence before T1; the same goes for T2, as long as the value of X8 and its serial number 8 are given in the packet of T2, then Validator can parse the complete POH sequence before T2

- According to the POH setting, the order of each transaction can be disclosed by marking the serial number (Counter) of each transaction in the POH sequence and giving the X value (Last Valid Hash) immediately next to it. Due to the nature of the SHA256 function itself, the order settled by hash calculation is difficult to be tampered with.

Meanwhile, Validator knows the way Leader comes up with the POH sequence, and it can perform the same operation to restore the complete POH sequence and verify the correctness of the data posted by Leader.

For example, if the Leader publishes the transaction sequence data packet as:

- T1, serial number 3, immediately adjacent to X3.

- T2, serial number 5, immediately adjacent to X5.

- T3, serial number 8, immediately adjacent to X8.

- T4, serial number 10, immediately adjacent to X10.

- POH sequence initial value is X1.

Once Validator receives the above packet, it can take X1 as the initial parameter and loop it into the SHA256 function to calculate it by itself, and parse the complete POH sequence as:

In this way, as long as we know how many Xs are contained in the sequence in total, we can know how many SHA256 calculations have been done by the calculator. By estimating the time taken for each hash computation beforehand, we can know the time interval between different transactions (e.g., if 2 Xs are separated between T1 and T2, then they are also separated by 2 SHA256 computations, which is about certain milliseconds). After knowing the seconds between different transactions, it is easier to determine when each transaction occurs, saving a lot of troublesome work.

- In general, the Leader will keep executing the SHA256 function all the time to get a new X and move the sequence forward. If there is a transaction event, it is inserted into the sequence as an external input.

- If a node tries to publish a fake sequence in the network to replace the version published by Leader, for example, replacing X2 with X2' in the above, and then the sequence becomes X1, X2', X3 ..... Xn, obviously others just need to compare X3 and SHA256 (X2'), and then they can find that the two can’t match, so the sequence is fake.

Therefore, the counterfeiter must replace all the X's after X2'. But this is very costly and time-consuming especially when there are so many Xs. In this case, the best way is not to fake the sequence and forward it to other nodes after receiving the sequence from the Leader.

Considering that Leader will add its own digital signature to each packet released, the sequence disseminated in the network is actually “unique” and “tamper-proof”.

Network-wide Consistent Time Progression

Yakovenko, the founder of Solana, has emphasized that the biggest role of POH is to provide a “globally consistent single clock” (which can be translated as network-wide consistent time advance).

This statement can be understood as follows:

Leader publishes a unique, tamper-proof transaction sequence within the network. Based on the packets of this transaction sequence, the node can parse out the complete POH sequence. POH is Solana's original timing method and can be used as a time reference.

As mentioned earlier, since the Leader will keep executing SHA256 hash computation all the time to move the POH sequence forward, this sequence records the result of N times of hash computation, which corresponds to N times of computation process and contains the time lags. And Solana treats the number of calculations as a unique timing method.

In the original parameter setting, the default is that 2 million hash calculations correspond to 1 second in reality, and each Slot is 400 ms, meaning that the POH sequence generated by each Slot contains 800,000 hash calculations. Solana also coined a term called Tick, analogous to the ticking sound of a clock hand as it advances. By the setting, each Tick should contain 12,500,000 hash calculations, one Slot cycle contains 64 Ticks, and every 160 Ticks corresponds to one second in reality.

The above is only the ideal state setting, in the actual operation, the number of hash calculations generated per second is often not fixed, so the actual parameters should be dynamically adjusted. However, the above description can explain the general logic of the POH mechanism. This design allows Solana nodes to determine whether a Slot is finished and whether it is time for the next Leader to appear (4 Slots a rotation) based on the number of hash calculations contained in it after receiving the POH sequence.

Since the starting point of each Slot can be determined, the Validator will divide the transaction sequence intersected between the starting points into a block. Once it is finalized, that means the ledger forwards one block and the system forwards one Slot.

***This can be summed up in one sentence: ***

“The hands of the clock don't turn back, but we can push it forward with our own hands.” New Century Evangelion said.

In other words, as long as the nodes all receive the same transaction sequences, the POH sequence can be parsed out and the corresponding time advancement are the same. This creates a “globally consistent single clock” (consistent time progression across the network).

In the original Tendermint algorithm, each node adds the same blocks to its local ledger, where the blocks are highly consistent and almost never fork, so according to Solana’s interpretation of “time advancement”, the time advancement should be consistent across nodes in Tendermint

In addition, since the number of transaction events in the POH sequence is predetermined, the nodes can know how many hash calculations (separated by several Xs) between different transactions just by their own calculations, and they can roughly estimate the time lag △T between different transactions.

With the approximate △T and some initial TimeStamp 0, it is possible to roughly estimate the moment of occurrence (TimeStamp) of each event, just like dominoes.

For example:

T1 occurs at 01:27:01, T2 is separated from T1 by 10,000 hash calculations (10,000 Xs), and if 10,000 hash calculations take about 1 second, then T2 occurs roughly 1 second after T1, which is 01:27:02. And so on, the time (TimeStamp) of all transaction events can be roughly estimated, which brings great convenience and allows nodes to independently confirm certain data delivery times.

At the same time, the POH mechanism also facilitates the counting of node voting time. Solana White Paper proposes that Validator should offer a vote within 0.5 seconds after each release of the State information by the Leader.

If 0.5 seconds corresponds to 1 million hash calculations (1 million X’s in the previous section), and after the Leader releases the State, 1 million consecutive hash calculations in the later sequence are not included in a node’s vote, everyone can know that the node slacks off and does not fulfill its obligation to vote within the specified time, and then the system can execute the corresponding penalty measures (Tower BFT).

Similarity with Optimism

The above is Solana’s original POH (Proof Of History), similar to Optimism and Arbitrum’s transaction sorting form, depending on hash-function-related calculations to establish a “tamper-proof” and “uniquely determined” transaction sequence, and then Leader/Sequencer publishes this sequence to Validator/Verifier.

A similar role of Leader called Sequencer exits in Optimism, which also replaces the block-structure in data transmission and periodically publishes the transaction sequences in a contract address in Ethereum, and asks Validators to read and execute it by itself. The transaction sequences received by different Validators are the same, so their state after execution must be the same. Comparing their State with Sequencer, each Validator can verify their correctness without communicating with other nodes.

In Optimism’s consensus mechanism, there is no requirement for different Validators to interact with each other or to collect votes, so the “consensus” is actually invisible. If Validator finds the State of Sequencer/Leader wrong after it had executed transaction sequences, it can send a “challenge” to question Sequencer/Leader. However, in this model, Optimism provides a 7-day window for transaction events to be finalized, and after Sequencer releases the transaction sequence, it requires zero challenge for 7 days before it is finally confirmed, which is obviously unacceptable to Solana.

Solana requires Validator to give a vote as soon as possible, to allow the network to reach consensus and finalize the transaction sequence quickly, which can be more efficient than Optimism.

In addition, Solana is more flexible in the way it distributes and validates transaction sequences, allowing a sequence to be sliced and distributed in pieces, creating the perfect ground for the implementation of the Turbine protocol.

At the same time, Solana allows nodes to run multiple computational components at the same time to verify the correctness of different fragments in a parallel manner, splitting the verification work and saving time significantly. This is not allowed in OP and Arbitrum. Optimism directly gives the transaction sequences in the form of Transaction-to-State mapping with one transaction corresponding to one post-execution State. This process can only be computed by one CPU core step by step from beginning to end to verify the correctness of the entire sequence, which will be weightier and more inefficient. In comparison, Solana’s POH sequence can be verified from any position, and multiple computing units can verify different POH pieces simultaneously, providing a basis for a multi-threaded parallel verification model.

Vertical Scaling For the Nodes Themselves

In addition to the above Solana’s improvements on block generation, consensus mechanism and data propagation protocol, Solana also creates mechanisms called Sealevel and Pipeline, and Cloudbreak, which support multi-threaded, parallel and concurrent execution modes, and support GPU as components for performing computations. This greatly increases the speed of node processing instructions and optimizes the efficiency of hardware resource utilization, which belongs to the category of vertical scaling. Since the technical details are complicated and not related to the focus of this paper, we will not elaborate on them here.

Although the vertical scaling of Solana significantly improves the speed of node devices in processing transaction instructions, it also raises the requirement bar for hardware configuration. Currently, Solana’s node configuration requirements are so high that it has been rated by many as “enterprise-class hardware level” and criticized as “the most expensive public chain in terms of node equipment”.

The followings are the hardware requirements for Solana’s Validator nodes:

12-core or 24-core CPU, with a memory of at least 128 GB, hard disk of 2T SSD, network bandwidth of at least 300 MB/s, generally 1GB/s.

Then compare that with the hardware requirements of current Ethereum nodes (before the transition to POS):

4-core (or more cores) CPU, with a memory of at least 16 GB, hard disk of 0.5 T SSD, and network bandwidth of at least 25 MB/s.

Considering that the Ethereum hardware configuration requirements of nodes will be turned down after its transformation to POS, the hardware requirements of Solana for nodes are much higher than it. Some claims that the hardware cost of one Solana node is equivalent to that of several hundred post-transformation POS Ethereum nodes. Due to the high cost of node operation, the operation work of the Solana network has largely become a privilege for whales, professional institutions and enterprises.

In response, Gavin Wood, former CTO of Ethereum and founder of Polkadot, had commented after Solana’s first downtime last year that real decentralization and security are more valuable than high efficiency. If users cannot run full nodes of the network themselves, then such a project will be no different from a traditional bank.

Full Summary

- Solana scaling is mainly based on: efficient use of network bandwidth, reduced frequency of node communication and speeding up node processing transactions. These measures directly shorten the time for block generation and consensus communications, but also reduce system capability (security).

- Solana discloses the list of Leaders in each Slot in advance, which essentially reveals a single trusted data source and thus significantly streamlines the consensus communication process. However, disclosing Leader information brings potential security risks such as bribery and targeted attacks.

- Solana treats consensus communication (voting information) as a transaction event, with often more than 70% of the TPS component being consensus messages, and the real TPS associated with user transactions being around 500–1000.

- Solana’s Gulf Stream mechanism essentially removes the global transaction pool, and although this improves the transaction processing speed, ordinary nodes will not be able to efficiently intercept spam transactions, and Leaders will face tremendous pressure, which can easily cause downtime. If a Leader is down, consensus messages cannot be published as scheduled and the network is prone to fork or even collapse.

- Solana’s Leader publishes transaction sequences instead of real blocks. Combined with the Turbine propagation protocol, transaction sequences can be sliced and distributed to different nodes, and the final data synchronization is extremely fast.

- POH (Proof Of History) is essentially a timing and counting method, which can stamp different transaction events with a tamper-proof serial number to generate a transaction sequence. At the same time, since only a single Leader releases the transaction sequence at the same time, including the POH timing sequence, Leaders essentially release a globally consistent timer/clock. So in a short window, the ledger advancement and time progression for different nodes are the same.

- In Solana, 132 nodes cover 67% of staked assets whereas 25 nodes cover 33% of the staked assets, leading to an oligarchy scenario. If these 25 nodes conspire, this is enough to throw the network into chaos.

- Solana has a high requirement for node hardware, and it achieves vertical scaling by raising equipment costs. But this also means that Solana is more reserved for whales, organizations or enterprises, which is not consistent with the vision of decentralization.

From a certain point, Solana has become a special existence in public chains. With its high-class node hardware, revolutionary consensus mechanism and network propagation protocol, it has pushed the narrative of scaling to an extreme, by reaching the long-maintained bottleneck of non-sharded public chains.

In the long run, decentralization and security will always be the core narrative of the public chain field. Solana, with its momentary TPS value and the push from financial giants such as SBF, became a hit supported by floods of capital. However, the end of EOS has shown that the Web3 world does not need pure marketing and high efficiency and only those with real usability can withstand the test of history.

Shout out to Mr. Liu Yang, author of Embedded System Security, the Rebase community and the W3.Hitchhiker team for their help with this article.

Reference

- Solana White Paper in Chinese

- Gulf Stream: Solana's Mempool-less Transaction Forwarding Protocol

- Turbine Block Propagation

- Solana Research Report

- Solana’s Journey

- How is Solana, a high-performance public chain partnered with Coinwallet, speeding up?

- Overclock blockchain to 710,000 transactions per second: a review of the Proof of History algorithm

- Solana's “Cinderella story” - SQLANA

- PoS and Tendermint Consensus Explained

- Ether source code analysis: transaction buffer pool txpool

- Ethernet transaction pool architecture design

- PBFT basic process

- Tendermint-2-Consensus Algorithm: Tendermint-BFT Explained

- Cardano (ADA) consensus algorithm Ouroboros

- In-depth investigation: why new public chains have frequent downtime accidents?

- In-depth Interpretation of Optimism: Basic Architecture, Gas Mechanism and Challenges | CatcherVC Research

- Dissecting the New Version of Metis: The Decentralization of Gas Minimum Layer2 in Progress | CatcherVC Research