Look at your surroundings.

Now, explain how you got here.

Did your answer include the creation of the universe, perhaps the The Big Bang? If not, your answer is incomplete.

A Reason is Born

Don’t be too hard on yourself. This prompt is typically interpreted as a request for the most immediate and significant reasons for being here, such as: “I fell off my bike and broke my arm, so now I am in the ER”. Your explanation did not need to be 100% complete to sufficiently answer the question. For obvious reasons, it is impractical to describe The Big Bang and then every sequential moment that led to you falling off your bike. Nonetheless, let’s examine the reasons: (1) ain’t nobody got time for that. (2) any attempt at a complete explanation would be riddled with false assumptions and inaccuracies. Nobody has observed every moment in spacetime, nor possesses the memory to remember, nor the processing capability to fully comprehend these moments. The main point here is that there is a reason for being (not to be confused with purpose, although purpose is an important part of this story). The reason that ‘you’ exist is because your dad’s sperm fertilized your mom’s egg. Somewhere along the way, ‘you’ came into existence*. However, both this explanation and the previous answer to “Why did you fall off your bike?” require infinite precision to achieve perfect accuracy (we obviously do not care about 100% accuracy in all cases, nor is 100% accuracy attainable). This is what we will explore in this post.

Elements of the Mind

We usually do not care about The Big Bang when examining our current position in life. Instead, let’s view the most important epoch of history -- your lifetime -- everyone is the hero of their own story.

Let’s begin at the moment in which ‘you’ obtain consciousness** (ironically, a moment that nobody remembers). Upon accessing reality for the first time, you are immediately introduced to the cause and effect protocol of the universe, become aware of your existence (‘consciousness’), as well as develop an awareness of ‘thoughts’ and a procedure of thoughts (‘thinking’), obtain (some level of) control over your body and thinking (‘agency’), and privately experience a sequence of sensations (‘perception’). We eventually learn about reality by perceiving stimuli and conceiving a model of its existence and relations to other phenomena (‘intelligence’, ‘cognition’, ‘understanding’, or as I like to say, ‘the ability to connect dots’). In this case, the dots are bits of observed data (‘information’) which are interpreted into truths (‘knowledge’) and stored in our ‘memory’ as associations. It is almost as if reality is a Turing-Complete system (a BIG assumption, formerly known as the Turing Principle), and our brains are a universal Turing Machine (another BIG assumption in the Church-Turing computational theory of mind). The conspicuous mystery in the brain-computer analogy is our ‘imagination’ -- the power to “see without seeing”. It is not obvious how a program for imagination would run on a Turing Machine, making humans extra interesting. The human brain (our “interpretation machine”) is unique in the world and, despite tremendous progress in neuroscience, still not very well understood. We are also skipping the sub-conscious element of this model for now … but will discuss it later.

Trapped In The Present (Mind)

A primary justification to study history is to learn knowledge that helps us in the future. At birth, the knowledge at our disposal is inherited from our birth family -- programmed in our genes or endowed via generational wealth (the type of wealth that confers power to effectively control one's environment) -- and depends on when, where, and into what circumstances we are born. At this point, our minds have yet to be infiltrated by the ideas of our contemporaries. We commence our personal learning journey by observing phenomena and deciphering which of those punish us with pain and reward us with pleasure. In the pursuit of our desires, we eventually learn to use language to communicate with others, trade ideas and inspiration with them, and trust their observations and accounts of history***. After all, we cannot physically observe everything ourselves, and humans have merely scratch the surface of permissible experiences. Furthermore, your first conscious moment is different from my first moment, and our experiences may never overlap in any significant way (disregarding butterfly effects for now ... and the fact that you just read a sentence that I wrote).

We can hardly recount the past with confidence, especially past events that we did not personally observe. (And even when we are the primary source, our memory is fuzzy since our brain stores information in a compressed and unreliable manner, like a computer hard drive, occasionally failing upon retrieval (though, we have the faintest idea of how the brain stores or recalls memory). Our memory could utilize a vastly different mechanism than modern computers. Memory caches and RAM seem to roughly correlate to short-term memory and long-term memory respectively, but the corollary has glaring disparities). You will trust your memory even less upon realizing that memories and hypotheticals are metaphysically identical, both existing as a pendulous thought in the mind. Each of these thoughts comply with our present experience and current conception of the world -- a memory supposing what happened and a hypothetical envisioning what can happen (or what could have happened in another time or space … perhaps in the future or past or in another possible world). Given the limits of our brain, it only seems fair to offload some of the processing to our peers, and in modern-day times, to our computers too. The library gives us a headstart on our learning journey by instantly exposing us to Lindy ideas and novelty, but canonical teachings can also be misleading when approached without vigilance. The famous motto from The Royal Society is pertinent here -- Nullius in Verba -- “take nobody’s word for it”.

Narratives are more convincing when there is evidence to corroborate it’s truth. However, evidence is simply circumstantial information. It does not have the power alone to prove, or even directly support, the truth of a story. The sole power of evidence is refutation -- disqualifying a conclusion in conflict with the evidence -- thus indirectly supporting the surviving conclusion(s). (And only “supports” when there is a finite set of competitive conclusions since the “support” points equally towards all remaining competitors.) Proof is discovered by proposing a valid conclusion, one that is consistent with the current evidence and most resilient to the evidence yet to be discovered in the future. We commonly “jump to a conclusion” by conflating evidence with proof. Instead, we should think “what world could have left this evidence?”

"Discovery is seeing what everybody else has seen, and thinking what nobody else has thought." -- Albert Szent-Györgyi (1893 - 1986)

Let’s imagine that we are detectives hired to investigate a crime. The objective of our investigation is to resolve the crime (as it typically is) by figuring out what happened, and how and why. In other words, we need to create an explanation of what happened to justify the repercussions. We recall from watching crime documentaries that a proper investigation commences with a combination of collecting evidence and conjecturing about what happened (to know when and where to look for more evidence). As we will discuss later, the order of these operations does not matter as long as our conclusion depends on an individually necessary and collectively sufficient set of evidence. We will inevitably introduce bias into our evaluation of the evidence since we view the world through a perceptual lens in a subjective scope. But, by keeping a grain of salt in our investigatory lens, we may account for psychological factors (motivations, ideals, cognitive bias, emotions, etc.) influencing which evidence we look for, notice and pay attention, and our affective experience of said evidence. The salt is a token to remind us that subconscious psychology is a layer of our perception. (Not to mention the neurochemistry from which our percepts emerge.)

The rest of our phenomenological experience comes from sense perception. We have sense organs with receptors tuned to receive specific input (and not other input) returning a complex neural impulse (coding for a specific mental state) which compels us to react in particular ways. We tend to forget all of this in the heat of the moment (including the physical limitations and fallible interpretation of sensory experience and of our understanding of human perception). It is reasonable to assume that photons have always behaved in the manner that we perceive and understand them now -- as a quantum of radiation in the visible light frequency spectrum. According to our cosmological theories (and for the sake of the argument), photons have almost always existed (actually existed with real historical impact, not just abstractly in the imagination of a human). However, the human instruments for biological detection and conception of photons are practically current events in the grand scheme of history. A common example of sensory tuning is the comparison between homo sapien sight and that of a mantis shrimp. Humans naturally perceive photons (light) in the visible spectrum (a very anthropocentric classification) through the retina and optic nerve. Meanwhile, mantis shrimp perceive most of the light in the visible spectrum and also light in the ultraviolet spectrum (which humans only recently discovered in 1801 and may, at best, only imagine the experience of ‘seeing’ ultraviolet radiation. This makes it more difficult to conceive of its existence and effects). In many cases, we can do a bit better than “eyes-closed” imagination because we can create artificial ‘sense organs’ with instruments that imitate a particular functionality. A prime example of a synthetic sense organ is a pair of infrared goggles that ‘sense’ infrared radiation waves (which we cannot see with the naked eye) and translate those input signals into an output pattern of pixels on a screen display emitting visible light (which we see with the naked eye). The respective tuning of sense organs (eyes in the optical case) to an environment is attributed to evolution by natural selection.

Most biologists accept the theory of evolution by natural selection applied to genetic information (DNA sequences, eg. genes). Far fewer scientists are familiar with the deeper concept of The Evolution of Everything, namely knowledge replication, which describes the propensity to observe information most adapted to its niche. The adaptiveness of knowledge is equivalent to its persistence across possible worlds (and as a measure of the quantum multiverse…more on this later) which is discovered through interactive “trial and error”. The proliferation of knowledge representations is a unique feature of conscious, intelligent life and abides by the same laws of evolution (i.e. memes and other ideas … a topic for another post). Scientists routinely apply evolution to theories without even recognizing the evolutionary structure of their reasoning. It is important to note that evolution is spacetime-dependent and exhibits locality, which means knowledge replication depends on the specifics of its point in spacetime and only interacts with proximal points. Knowledge at one point in history may not be adapted to other points. As it turns out, existence is the highest virtue.

The Truthseeker who adopts realism and objectivity, both of which have proved to be useful for the purpose of science, is well on its way to discovering replicable knowledge. Despite this noble pursuit, the Truthseeker may become frustrated as it bumps up against the limits of its understanding imposed by its present mental faculties. Like the Truthseeker, we tend to find ourselves grasping at illusions as we stumble through our perceptual simulation of the world. We discern phenomena at various scales, but are mostly confined to a small spacetime interval. Our phenomenological experience is determined by the conscious and subconscious, visible and invisible, explicit and inexplicit, conceived and inconceived, expected and unexpected, understood and misunderstood. The function of our imagination, senses, memory, and perceptual tools was selected by nature and is optimized for replication, not Truth.

Let’s resume our crime investigation and say we find an eye witness to the crime. Following forensic best practices, we scrupulously reconstruct the eye witness’s perspective to discern the truths in the account. Conveniently, humans share the same sensory apparatus with one another, which means there will be little variation in our sense perception when compared to that of the witness. We can trust our eye witness had similar sensations and shared common aspects of the phenomenological experience with us. We need a dash of cynicism to apprehend the idiosyncratic psychology and personal motives layered into the witness’s account at the interpretation level.

Since phenomenological experience depends on the observer, rather the observer’s perceptual tools, it follows that a historical account must contain subjective bias and requires faith in its resemblance to truth (and we usually realize overconfidence). For example, the discovery of fossils indicates the existence of extinct dinosaurs. And we can even imagine what they might have looked like! However, not the fossils nor the present-day graphical renderings ultimately prove dinosaurs’ existence. The evidence, fossils in this case, simply exists and humans suggest plausible explanations for its existence. A different explanation for the existence of fossils could be that an egotistical scientist synthesized organic bone materials in a lab and subsequently buried the fossils to claim fame once she later “discovers” them and proposes a breakthrough theory of dinosaurs. However, there are sound reasons that discredit this explanation. For instance, there is no evidence of a scientist or lab in 1677 (when Robert Plot found the first fossil) that had the capability to synthesize an organic material that could resemble a fossil. Also, there was no way of digging to those depths of Earth’s crust undetected since the process of digging would have left its own evidence, which was never reported. The totality of conflicting evidence (and lack of corroborating evidence) makes the “mad scientist” explanation ‘problematic’. A better explanation is that large prehistoric animals roamed the Earth many millenniums ago whose remains were naturally buried and preserved. There will always be missing context, so we will never have a totally complete nor perfectly accurate description of history. But, not all context is necessary for a valid conclusion. This is the essence of Relevant History.

The most influential theories garner mass consensus (a flawed method for finding truth … though it does provide a clue). There is a well-known saying, “wisdom of the crowd”, accompanied by a lesser known but equally prescient saying, “fatality of the mob”. The objective of consensus is to win a majority, which requires compromise****, not truth-seeking. The danger of deploying consensus as a measure of truth is it leverages groupthink. When everyone thinks that the other person has done the necessary due diligence on an idea, nobody ends up doing it. The errors bars of a consensus value also scale according to the contentiousness of the minorities. Consensus provides a signal for extrinsic sociology and psychology of the matter moreso than the intrinsic epistemology.

Here’s another trope: “history is written by the winners”. This may not be strictly true in its literal translation but captures the inextricable bias of historical accounting. The history textbooks are missing many facts, particularly from the loser’s point of view. The publisher is not entirely at fault -- there are typically less of the losers around after major evolutionary equilibriums like war, and the historiographer’s best interest was to align with the winners and paint them in the best light. Regardless, the missing context make the history book’s content sensitive to a combination of bias -- hindsight, confirmation, selection, conformity, misattribution, and survivorship to name a few.

In light our parochial worldview, it is tempting to meander into Skepticism. Reasoning is the only escape.

The Primacy of Explanations

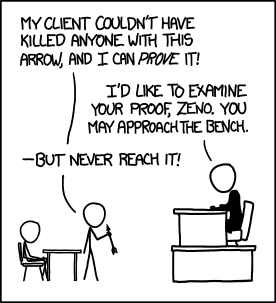

The catalyst of my philosophy career was the principle of sufficient reason (PSR) – the evident principle that a thing cannot occur without a cause which produces it. PSR resonated with me so deeply that it felt undeniable. However, a natural conclusion of PSR is Fatalism – the idea that what happens and what will happen was always going to happen and is the only happening that could have happened. This is disconcerting news for sentient beings who frequently wonder “how can I prevent undesirable happenings?”

I found a clue when I stumbled upon Laplace’s Demon, a being who sees all things at once. With its perfect knowledge of the world, chance ceases to exist. For the demon to emulate the view from everywhere, the world must be fully deterministic.

“Nothing would be uncertain and the future, as the past, would be present to its eyes.”

--Marquis Pierre Simon de Laplace

Ultimately, my fatalistic dismay was extinguished by David Deutsch’s The Beginning of Infinity*****. The main takeaway from the book is the answer to the question: What does it mean that the omniscient Demon knows everything? The answer: the Demon can correctly explain anything, and with this perfect understanding, can accurately describe and predict everything. Luckily, we share this first capability in common with the Demon because we possess ‘creativity’ -- an ability to create novel ideas (no matter that these “ideas” are determined and were always going to be created) -- and rational criticism -- an ability to spot errors in ideas. Deutsch also proposes that phenomenological emergence and computational universality jointly imply that humans do not need to know every fact that exists in order to know anything that could be known (though Gödel may have qualms with how we define knowledge, more on this later). More succinctly, you do not need to know everything to know anything. What a relief!

By marrying two concepts -- the ‘Human Brain as a Universal Computer’ and the ‘Infinite Demonic Wisdom’ -- we find that humans are universal explainers because our brains are constantly running a program that perceives and evaluates the universe. This marriage implies that anything which is explainable is explainable by us, and we improve our understanding with better explanations. That said, the universe never asked to be understood and was not necessarily designed for us to understand it intuitively. Humans have evolved to understand explanations and seek explanations to solve problems. Our scientific predictions are continuously getting more accurate and, more importantly, our understanding is more adapted, insinuating that we can make progress towards more objective knowledge. One need not look further than the trends in average human lifespan or GDP per capita or at modern medicine to be convinced that our collective understanding of the universe is far more resilient than its forebears.

Another big takeaway from the book is Deutsch’s principle of optimism: All problems are caused by insufficient knowledge. Let’s define“problem” as an undesirable state or as the obstacle to a more desirable state. Then, problems are inevitable because our knowledge will always contain errors, and there is always another question to answer. Yet, problems are soluble since everything that is not forbidden by the laws of nature is achievable, given the right knowledge. If something were not achievable, given flawless knowledge, then it would be a regularity in nature that could be explained in terms of the laws of nature.

Hence, there are only two possibilities: (1) either something is precluded by the laws of nature, or (2) it is achievable with knowledge. Deutsch refers to this as the momentous dichotomy. As we explore reality, inevitably encountering problems along the way, it becomes self-evident that both problems and solutions are limitless. Furthermore, a slight reframing of optimism shows that opportunities are ubiquitous. There is no guarantee that we will solve our problems, but it is possible that we can. Survival depends on creating the requisite knowledge at a higher rate than the onslaught of existential problems. The Demon has solved all problems.

PSR implies that there is an explanation for everything, so the quest for God-like omniscience is a hunt for good explanations. Unlike mere matters of fact, an explanation accounts for a meta-understanding of why the facts must be the case, revealing The Fabric of Reality. An explanation is simply a proposed reason for ‘being’ or ‘becoming’. PSR dictates that all claims are the consequence of an explanation. When an explanation is figuratively expressed as a statement, it describes a set of conditions that give rise to the observed world (phenomena that the explainer observes). The conditions describe the present, the future, or the past with historical significance for other possible worlds (implications of the future (prediction) or of the past (retrodiction)). An explanation is the answer to a ‘how-question’. It is this property that makes a claim explanatory vs. non-explanatory. A true explanation refers to a Real Pattern in the universe. Accordingly, explanatory knowledge transcends spacetime and may be applied repeatedly to familiar and unfamiliar situations as long as the explanatory conditions and patterns hold.

The building blocks of explanations are First Principles -- the most fundamental truths operating at a particular level of emergence. First Principles are the most critically acclaimed, battle-tested theories at our disposal. Scientific explanations (relativity, quantum, evolution, etc.) are continuously tested with crucial experiments to settle old problems and give birth to new ones. Science is an error-correcting process and its theories are engaged in an everlasting trial. If science were a judicial system, it adopts the “innocent until proven guilty” policy. When a theory is “proven guilty”, scientists seek new explanations to remedy the mistakes of its problematic predecessor.

What distinguishes a scientific explanation from a non-scientific one? We can turn to Karl Popper’s demarcation criterion -- falsifiability. In Popper’s words, a scientific explanation must have “predictive power” -- the capacity to make testable predictions comparable to evidence (a.k.a. our most persuasive arguments). Predictive power is synonymous with “consequential”, “relevance”, “salience”, “influential inference”, so a scientific explanation makes testable assertions (predictions and/or retrodictions). When Popper refers to scientific falsifiability, he is referring to experimental refutation (in the empirical sense). Non-scientific explanations are not experimentally refutable. However, this does not disqualify non-scientific explanations from containing knowledge. Just because an explanation is indisputable (now) does not mean it cannot be true (now) nor disputed at some point (in time). We can use different modes of criticism to refute bad non-scientific explanations -- Does the explanation create more problems than it solves? Could its contents easily be varied or replaced and remain valid? If an explanation solves a problem, then it constitutes knowledge (even a non-scientific explanation). Failing to acknowledge this fact is the fallacy of scientism. Popper also devised a hygienic strategy for maintaining the falsifiability criterion: when positing a theory one should also answer the question “under what conditions would I admit my theory is untenable” (Unended Quest pg. 42). In other words, which conceivable facts would I accept as refutations of my theory? (As an aside, many investors find this to be a clever schema when buying/selling a financial security: “assuming constant information, at which price should I reverse my trade?” I will have more to say on falsifiability in future posts).

Popper’s criterion says nothing about whether an explanation is meaningful or not. It only demarcates science from non-science. Any explanation that represents a conceivable, graspable reality is meaningful. This is not a claim that every meaningful explanation correctly refers to reality in the sense that its abstract representation corresponds to reality (more on correspondence later).

So what do we do when faced with two (or more) theories that are observationally equivalent? If all of their empirically testable predictions are identical, then empirical evidence alone cannot be used to distinguish which is closer to being correct. (Indeed, it may be that the distinct theories are actually two different perspectives on, or representations of, a single underlying theory.) In cases of competing explanations and in the absence of any relevant evidence, it is logical to apply Laplace’s principle of indifference (also called principle of insufficient reason, “PIR”) which says every competing explanation is equally possible (should hold the same credence, although credence is a proxy for ignorance). If there is no reason to prefer one theory to another, then a commitment to one is definitively arbitrary. Each competing explanation should stand on equal ground until observational evidence (a.k.a. our most agreeable theories) or superior arguments reveal a preference for one.

PIR leads to the underdetermination of theory by data which says that evidence available to us at a given time may be insufficient to determine what beliefs we should hold in response to it (i.e. the mosaic of explanations contains more unknown variables than equations to solve for them). To show that a conclusion is underdetermined, one must show that there is a rival conclusion that is equally well corroborated by the evidence. Let’s apply Baye’s theorem to elucidate the concept of underdetermination:

E(possible explanations | observation)

"Given this observation, the set of possible explanations to expect." Underdetermination occurs when the set of explanations is > 1.

Eventually, even if our criticism fails to eliminate bad ideas, the relentless force of evolution eradicates maladapted knowledge when an unforeseen, critical contradiction with reality causes its extinction. Lucky for us, we can use rational criticism to vigilantly anticipate problems and refine maladapted knowledge before it wipes us out. A “crucial experiment” is a test that is capable of determining whether or not a particular theory is superior to all its competitors (at least those that are presently conceived). Devising such an experiment is itself an act of creativity because the test must discern the historical implications (predictions or retrodictions) that differ amongst the competing explanations:

E(possible observations | explanation)

"Given this explanation, the set of possible observations to expect."

A crucial experiments tests the exclusive disjunction of rival theories' possible observations. If the exclusive disjunction is a null set, then all explanations are contained by a single explanation.

Experimentation is a theory about how to discriminate between theories. It is one of several modes of criticism. Other modes directly attack explanatory weaknesses, including: refutability, self-consistency, variability, conciseness, cohesiveness, complexity, leaving less unexplained, generality (addresses more domains) completeness (solves more problems/decides more propositions), etc. Therefore, experimentation is appropriate only once an explanation survives those criticisms, so many explanations are refuted without the need for a rigorous experiment. In a physical experiment, the scientist controls the experimental conditions to match those inherent in the unresolved problem and then observes which theory’s prediction matches (or not) with the results. And experiments don’t need to be empirical. Running a simple thought experiment can settle many arguments. By thinking deeply on an idea, an expert is likely to have considered more plausibilities and observed more relevant thoughts than their peers, so it is reasonable to trust their epistemic positions, not because an infallible authority has been bestowed upon them, rather assuming they have had more chances to refute an idea. (Again, they may have extrinsic reasons (political motives, etc.) for not publishing their rebuttals.) Bad ideas are usually less adapted than good ones, but the bad idea may have extrinsic properties, like virality or shock value, making it more adapted than can be explained purely by its intrinsic properties.

The purpose of observation is to testify against a theory, and it is only reasonable to abandon theories that make false predictions. Of course, experimental evidence alone cannot logically refute a theory without a theory telling us what the observed evidence is. Observations are theory-laden, and theories are error-laden. Without a next best theory to replace or amend a problematic one, it is only rational to assume that a conflict between theory and experiment is the result of experimental error or faulty measurement.

“What I cannot create, I do not understand”

-- Richard Feynman

Oftentimes, the objective of an explanation is to accurately describe reality so that we may control it to our liking. However, accuracy is not always the highest priority as demonstrated by disinformation campaigns. Since explanations are meant to solve problems, and our problems are subjective, the absolute accuracy of an explanation may be secondary to another purpose. This underlies the importance of considering information sources and seeking ‘good’ explanations. Stop to ask “why am I seeing this content instead of another? How did I receive this information?” A good explanation, like a good lie or conjuring trick, is crafted to persuade the receiver of some reality. The only difference in this case is the intention:

scientist’s goal = understanding. magician’s goal = deceit.

In either case, the burden of proof lies with the receiver. The path to the Truth is deciphered with explanations. An explanation could be true if it coheres with other true assertions and prevails against attempts to disprove it. If a contradiction arises, then we may logically ascertain that at least one of the claims is strictly false. In this way, an explanation is our best guess, and even good explanations are at risk of being wrong. This is how we create better, more powerful knowledge.

A good explanation is refutable, convincing, and precisely answers “What?”, “Why”, and “How?”. It is our best description for how something works, why something happened, or why something will happen, at any given time. In a good explanation, each constituent element plays a functional role (individually necessary and collectively sufficient) in explaining that which it purports to explain, making it succinct and hard to vary. A good explanation is hard to vary in the sense that varying its contents, say to accommodate recalcitrant data, alters its historical implications. The invariability principle is important for epistemic value, yet counterintuitive. For example, let’s say there is an original explanation named “Joe”. If alterations of Joe yield the same set of possible worlds as Joe, the world could be described by any one of Joe’s variations. If Joe is corroborated, then we could still reside in any of many possible worlds, namely the worlds in which Joe’s variants are also corroborated, so we gain little information about our specific world. Counterintuitively, the harder it is to vary Joe without altering its history, the narrower the set of possible worlds permitted by Joe, so it is less likely that Joe correctly describes our world. Yet, if Joe is true, then there are fewer possible worlds in which we can find ourselves. The hard to vary criteria is related to the conjunction fallacy which says that an explanation containing many conjoined details is always less likely to be true than a less-detailed but otherwise identical version of the explanation. The more-detailed explanation might appear more plausible, thus more likely to be true, but it makes more falsifiable claims and is more likely to be false (see the Linda Problem). In other words, the details allow the explanation to decide more propositions and generalize over more observations, so it has more chances to be wrong. As Karl Popper says, “good explanations make narrow, risky predictions” appealing to a specific line of reasoning. They dig deep to find the roots at a level of emergence. Try the 5 Why’s method to increase the reach of an explanation.

“Science is a way of thinking much more than it is a body of knowledge.”

— Carl Sagan

We also get epistemically lucky when our best explanations contain adapted knowledge but not for the exact reasons that we thought (see Gettier Cases). The actual knowledge (truly understood or not) interacts with other actual knowledge regardless of its status (or non-status) in the awareness of consciousness. For example, the Mimic Octopus, known for its camouflaging color changes, does not need to understand how or why it changes color, or have a concept of color, or be aware that it changes color, or even be conscious of anything in order to benefit from its color changes. The octopus, if it can think, may believe that it is surviving its shark encounters by closing its eyes and thinking happy thoughts that propagate repellants. An external observer (with visible light vision) would argue that it is the octopus’s changing colors that make it undetectable to voracious predators. The color changes could be purposeful (perhaps intentionally induced with happy thoughts), or they could happen automatically without an inkling of agency from the octopus. It does not matter in the eyes of evolution. The replication of knowledge hinges on satisfying an objective problem, even if that problem is subjectively hidden, not in our awareness, or so misunderstood that it may as well be obstructed from view. This is how inexplicit knowledge contributes to successful replication without recognition.

The value of explanatory knowledge is its reach, the extent to which an explanation is relevant to account for the information available******. A thorough, yet incomplete explanation might leave little to chance but is only locally applicable. A fully complete explanation is certain and essential, leaving nothing to chance. In addition to being complete, the perfect explanation is also universal, absolute, and error-free, thus globally accurate. The jury is still out on if a perfect explanation exists (Gödel says “no” but was also more concerned about math than epistemology). The perfect explanation would resemble an ultimate explanation, a Theory of Everything. From time immemorial, suitors have chased after this theory, and the annals of physics is replete with their candidate offerings, such as Michio Kaku's The God Equation (I have not read yet). None have entirely succeeded. Most attempts to formulate a Theory of Everything look more like a Theory of Anything. These faux theories are actually a Theory of Nothing because they do not exclude anything nor make testable predictions (falsifiable implications). A theory that does not rule out anything is worthless since it cannot solve problems nor yield power. For example, “supernatural magic” could explain anything, so absolutely anything is possible in such a regime. Therefore, we cannot deduce meaningful consequences from its truth (other than perhaps the warm and fuzzy feeling of epistemic security…which at times is useful enough). This is the hallmark of a ‘bad’ explanation. This type of belief system is not likely to endure.

Let’s start with a Theory of Something…

Unobserved Worlds -- The missing observations

If the universe were a painting, phenomenological experience is the paint and language the canvas. One can understand that which can be expressed and express that which can be understood. Imagine that a mantis shrimp had human conscious, cognition, language, and a means of communicating with a human … they would utterly struggle to describe their experience of ultraviolet light to a human. It would be equally impossible for a human to explain to an actual mantis shrimp (sans human conscious, cognition, language, and a means of communicating with a human) how its ultraviolet vision works. Explanation requires imagination because it incorporates the Unseen:

(1.) “Modalities” -- (im)possibilities, contingencies, necessities, counterfactuals, and hypotheticals

(2.) “Abstractions” -- abstract representations, also known as platonic objects (ideals, forms, logical structures and propositions, and concepts like numbers, objects, properties, categories, and relations)

(2.) “Emergent Structures” -- simplicity that implies something about the properties of complexity, and vice versa, often the result of scale independence (such as the economic laws governing financial markets or chemical reactions in molecular biology)

(4.) “Imperceptibles” -- things not directly apparent to our senses or scientific instruments but whose existence is conceivable

We access imperceptible phenomena by imagining them and how they interact with other things. Then, we measure their physical effects with finely-tuned instruments. For example, we can measure the weight of a backpack with a scale. The measurement is a function of gravity and the massive books inside the bag. But we do not observe the gravitational field, just its effects, which is how we know it exists. We measure temperature with a digital thermometer. We observe a digital display panel. We do not observe the motion of quarks driving the circuit in the thermometer’s digital display. The empirical data in these cases are represented with numbers. The physical things being measured and the circuitry of the panel determine which numbers are displayed, i.e. the measurements that we see are caused by things that we do not see. This is how we discovered the germ theory of disease, amongst many other breakthroughs.

Explanations are measured by their explanatory power -- its content’s capacity (descriptions of necessary and sufficient conditions) to replicate the phenomena it purports to explain. As shown in the bike example at the beginning of this post, an explanation does not need to be entirely complete to be relevant or valuable. Humans inherently recognize patterns in our experience and make inferences on the fly. Some of these inferences require intense deliberation. Others emerge spontaneously from subconscious processes. In fact, the type of explicit knowledge to which I have been referring (namely explanatory knowledge expressed as linguistic statements) are totally unnecessary for life (though explicit abstractions are the breakthrough that led to the massive success of language). We do not need an explicit explanation of our reproductive system to have sex or of our digestive system to eat food to survive. The inexplicit knowledge of ‘sex drive’ and ‘hunger’ are genotypically coded in our DNA and phenotypically expressed as instincts to guardrail our thinking towards evolutionarily adapted actions and decisions. The genes that anticipate problems with the most adaptive response are passed to subsequent generations. These inexplicit ideas are still conscious, experienced as feelings, involving a judgement of unrecognized origin.

Of course, our feelings can also be misleading and contribute to our downfall if improperly examined. We also have ideas, such as an affinity for the color blue, operating furtively in our subconscious. The explanatory power of subconscious knowledge (sometimes called tacit knowledge or intuition) exists in the background, unbeknownst to the observer, until it is consciously observed. Subconscious ideas are, by definition, not present in our attention as they are interacting in the world. These ideas consciously show up (as a sensation or percept or thought) once we reflect on the experience and try to explain the phenomena after the fact. Only when the idea is consciously observed do we have the chance to rationalize with it and turn it into explanatory knowledge. We might never consciously observe (or come to understand) the exact knowledge that keeps us alive.

Truth -- True or False?

In order to 'know' something, we need to 'know' what it means to ‘know’. Humanity’s initial guess for how knowledge works is a theory known as “justified true belief” (JTB), originally attributed to Plato. JTB says that knowledge is about justifying your “beliefs” as true. It then goes on to say that if you sufficiently justify a belief, then your belief is true. Most damningly, JTB fails to qualify ‘sufficient justification’ and suffers from the problem of induction -- no amount of supporting examples can overcome a single counterexample, and there’s no way to rule out a counterexample within JTB. What is the threshold for sufficient justification? (Spoiler, it doesn’t exist). This central misconception of JTB plagued progress for centuries, up until the abolishment of the Vienna Circle.

In 1929, Gödel’s discovered his profound incompleteness theorems. The theory tells us any formal system that can refer to infinite propositions is capable of producing infinite unprovable propositions. There will aways be truths that a truth system cannot prove logically, including the validity of the truth system itself. Therefore, it is impossible to prove whether any belief is ultimately true because proof depends on the system from which it is derived, which leaves nothing to prove the completeness or consistency of the system itself (see epistemic regress). Since Truth (with a capital ‘T’) exists independently of any such proof system, induction within the system cannot logically prove a Truth. One can put forth evidence to hone in on truth, but this is not Proof (as discussed earlier).

The first step to finding truth is to develop a theory of knowledge (epistemology). The method of knowledge-finding to which I subscribe (and have been describing in this blog post) is Popperian critical rationalism (CR). This theory says that if a statement cannot be logically deduced (from what is known), it might nevertheless be possible to logically falsify it. CR asserts that knowledge is fallible, which is another way of saying situational or contextual, so what we come to know should not be determined by what we already know. CR is like the scientific method on steroids because it utilizes imagination to extend the criterion of falsifiability beyond the archives of empirical evidence and deductive reasoning. CR includes the Unseen (such as new conjectures, missing assumptions, or counterfactuals) as a means to refute knowledge and thereby deliver future knowledge from any dependence on current knowledge.

Therefore, a critical rationalist (provisionally) accepts the truth of knowledge contingent on a set of assumptions (axioms or premises) which may eventually be refuted (always tentatively) by superior knowledge. The beauty of CR is that it is leaves the door open for its own destruction and correction. Although CR may be the best explanation of knowledge today, it is overwhelmingly likely that CR misrepresents knowledge and/or the means of acquiring it. When CR is refuted, a critical rationalist would willingly adopt the succeeding, better explanation of epistemology. (Even though it is likely false, CR has been a fun way to view the world for me.)

Even our cherished “laws of nature” are ideas about the world that emerge from phenomena that can be understood more fundamentally. Knowledge that withstands criticism is likely to be considered useful and persist; yet, we can never be absolutely certain that a piece of knowledge is a Truth. There is always a chance that we discover new information at odds with our theory. Therein lies the tension of truth. The only rational thing to do is to take our best explanations seriously while also knowing that they may be wrong. Karl Popper describes this friction as the Growth of Scientific Knowledge. New information can destroy deeply entrenched, foundational knowledge. When the rest of the knowledge edifice topples with it, we suffer cognitive dissonance. Instead of trying to forcefully harmonize our prior knowledge with new information, a critical rationalist plainly declares the old knowledge to be problematic and creates better knowledge. Truth is boolean -- it is either right or wrong, not probably right or probably wrong. One can cling to an ostensible truth while speculating it is not totally (or even remotely) correct. This is okay because we should not be believing theories; rather, we should just tentatively accept and critique the best one(s). We can use a framework called “Strong Opinions, Weakly Held,” developed by technology forecaster and Stanford University professor Paul Saffo, to enforce rational criticism.

“For superforecasters, beliefs are hypotheses to be tested, not treasures to be guarded.” ― Philip Tetlock

At this point, we have discussed information as an obscure sort of ‘data’ to be taken as fact, such as our empirical observations. However, this conception is misleading because information is actually a concept used to communicate data. According to Claude Shannon’s information theory: information is the difference between the maximum entropy (maximum possibilities or configuration states) and the present entropy (remaining possibilities or configuration states) of a system. Therefore, the value of information is relative to the universe of relevant possibilities, and a state of information is a set of eliminated possibilities (aka impossibilities). The universe of logical possibilities is constrained by our theories of what is logically (im)possible, such as a violation of physics. Though, actual possibility is only constrained by the actual laws of nature, whatever they are. It is also important to distinguish the countable set of logical possibilities from the uncountable set of conceivable possibilities. The former is a subset of the latter, and the latter is uncountably infinite due to the unboundedness of imagination. Logical possibilities depend on a formal system, and the formal system is an abstraction of a physical state (namely a brain configuration), so even logical possibilities depend on the physics of a (conceivable) formal system. (…modern artificial intelligence techniques are good at pruning logical possibilities given a formal system (by the programmer), but lack the capability to conceive abstractions not represented in its training data … a topic for another post.)

It follows that knowledge is a proposition with a truth value. Knowledge is a type of information that contributes to its replication by solving an objective problem in its environment. Though knowledge might be abstractly represented in the consciousness of a mind, it is always a physical state, in this case, a brain state. Knowledge exists in the context of other knowledge and holds its truth value relative to the other propositions of the knowledge base. A proposition holds true by remaining consistent (logically possible -- free of contradictions) with other true propositions or by outcompeting a tenet of the base, and vice versa for false propositions. Hence, a valid critique of knowledge must address the formal system (knowledge base and interpretation machine) that created it. We like classical logic structure because it seems to map well onto our experience. Information requires an interpretation machine because there must be a theory to discover data in the first place, and a theory of what it is, how to measure it, and what it tells us. Even our sensory data has built-in interpretations (a topic for another post).

The primary theory of neuroscience is Carl Friston’s free energy principle which says biological systems want to minimize uncertainty and maximize computational efficiency. In this theory, the human brain behaves like a computer running software that continuously learns by updating its predictive model of the universe based on experiential input. Intelligence is a software package that encodes an entropy-decreasing function to minimize the surprise of the model’s output. As such, “intelligence” and “surprise” are both terms that stand for computational metrics -- the iteration rate on a possibility/proposal/problem space in pursuit of a satisfactory solution and the magnitude of an error, respectively. Learning is an evolutionary program that applies and stores knowledge to gain a progressive adaptation. Generally, intelligence describes how effective an entity is at learning (i.e. its capacity to improve its personal knowledge via error-correction). Intelligent beings search a proposition space by re-orienting its perspective to discover new possibilities, accumulating latent knowledge (learning) in the process, of which the objective bits are recycled in a new foundation. Because the set of conceivable possibilities includes falsities, an intelligent being can learn falsities and corrects those falsities only with future learning.

At this point, it is worth reiterating the conjectural nature of knowledge as well as human fallibility. When a person is said to possess certain knowledge, one is saying that they have a specific idea in their mind. The idea corresponds to a brain configuration (a physical information state) that influences the body (another physical state) which impresses an affective experience (emotional or qualitative state). Thoughts induce feelings, and feelings induce thoughts, and our mood affects how we interpret data signals. The truth (or adaptiveness) of knowledge depends on its context (or niche), which contains unknowns (and surprises). When speaking in everyday conversation, the majority of context goes unstated, either as an implicit or inexplicit proposition. Also, the content of a statement can mean different things based on its context. These holes are left for the receiver to fill. Since truth is predicated on relevant context, and context is embedded in a perspective, it should come at no surprise when interpretative holes are filled differently than intended or when subjects come to see contrasting truths from one another.

A subjective truth depends on the observer’s perspective (your truths may not be my truths) and it is highly sensitive to new information. An objective truth is the unique interpretation of an unbiased observer, impervious to new information, holding a constant truth value from a given perspective. An absolute truth holds a truth value from every attainable perspective (Gödel's Completeness Theorem: if a formula is true in all models, then it is provable within the system). This spurious “view from nowhere” could show context-independent truth. The scientific community values this mark of invariance as the criterion for what is “real”. This view is rejected by fallibilists who recognize the incompleteness and context-dependence of all knowledge. Instead, fallibilists adopt the "view from everywhere" where a shift in perspective allows one to see different truths and learn by entertaining plausible viewpoints.

Experience is perspectival, so a difference in perspective yields a different experience. Perspective is determined by brain chemistry and circumstantial history, amongst many other factors. You feel as though your beliefs are how the world really is. Other people embedded in different perspectives and situations may feel like they are inhabiting a different world from you. Each of these subjective experiences is objective to an individual Self. The disagreement amongst Selves is not to be confused with obstinance. The world feels different to them - even if they are in fact embedded in the same reality. Ultimately, each human wields an interpretation engine trained on ephemeral signals from its biological hardware, originally inherited at birth and developed over time with genetic functions and phenomenological memory. The brain is manually conditioned by itself because the affective response to certain stimuli is entrenched in evolutionary sapience, and we learn which stimuli yield which responses (and even learned to manually adjust them with drugs). With a deeper understanding of medicine, humans can delve beyond ephemeral therapies. Humans have manipulated health with medicine for centuries, but the medical breakthroughs of modern history -- drug discovery, gene editing, cyborg prosthetics -- are transformational milestones in human history, not just for healthcare but also the proliferation of consciousness. These interventions promise to modify the human experience by engineering humans at the level of biological hardware.

So how does a Truth become knowledge or knowledge become Truth? Well, despite the effort of countless philosophers, it can’t. In the end, knowledge is a fallible hypothesis about what Truth is (and is not) that is discovered by iterating through possibilities. Humans seek highly objective knowledge because we get rewarded with an understanding that yields better predictions and control over our future experiences. Nassim Taleb describes a concept of non-observables in his Incerto (my favorite is The Black Swan), which focuses on the problem of induction in predictions. Taleb’s non-observables are events that have yet to be observed, or even conceptually recognized, which is a version of the “Modalities” aspect of the Unseen********. For example, every swan that I have observed has been white, so I assume that all swans are white. The logic of the statistical conclusion rests on priors and inductive inference, namely the uniformity of the universe of swans; so, if I were a pure Bayesian, I would place complete faith in the fact the next swan I see will be white. I feel quite insecure about this prediction because I have not seen every swan in existence (or all the swans that will ever be). No matter how many white swans I see, I cannot confirm my supposition that “all swans are white” because I may eventually come across a single black swan, falsifying my prediction. I would much prefer to have an explanation for the uniformity of swans. The claim that “all swans are white” seems naked because it is missing explanatory content, say genetics, that precisely guarantees the condition in which a swans will be white (making black swans impossible under those conditions). On the contrary, it is abundantly clear from our best explanations in genetics that black swans are permitted (because of genetic or epigenetic mutations in DNA). Explanatory contents are the reasons, the abstractions deriving the observations. The explanatory contents of the statement “all swans are white” is the implicit proposition that there exist a universal law mandating the whiteness of swans. Who created that law??? none of your business.

There will always be non-observables, and by definition, we do not know the significance of those non-observables. The highly significant non-observables are the “Black Swans”. We don't know for sure what will effect us, kill us, or save us. It may not exist (to us) today. Because non-observables could undermine our best explanations of reality, we must acquiesce to our fallibility.

According to Plato, ideal propositional knowledge has always been available. It is just a matter of whether or not we come to grasp it. When a scientists contemplates a novel explanation, they bring an abstraction into existence that did not previously exist (or at least did not exist to ‘you’). It is quite liberating upon realizing that imagination enables your universal explainer program, so in theory, you can understand anything! Knowledge is not scarce, regardless of the possibility space*********. It is the product of experience, unbounded imagination, and intelligent criticism. Our problems are unpredictable, as are their solutions. If we already knew an infallible solution to a problem, then by definition, the problem goes away and (hopefully) leads us to better problems. Because of our unpredictable problem situation, knowledge is not guaranteed to progress towards anything in particular. It simply evolves. This is the essence of Joseph Schumpeter’s Creative Destruction. Furthermore, reality is the ultimate feedback for knowledge. An idea’s replicability, and its host’s survival, depends on its environmental adaptiveness more than its ultimate truth. According to Gödel’s incompleteness theorems, if the universe is infinite, there are infinite propositions that are undecidable (unprovable) under a set of assumptions. This means that deciding these truth propositions can only be accomplished by including a new, non-derivable assumption (the product of a creative act). Of course, the newly added assumption is itself undecidable, so there will always be new assumptions to make. Thus, Popperian epistemology is a Beginning of Infinity.

Keep in mind, Truth is the foundation of reality. It is what it is**********. It is pure objectivity. It is the ultimate arbiter. Frankly, reality does not care about you (or anything). It is unbiased. It has no preferences. Its laws are pre-ordained. Humans pass judgement on a world that does not need a judge. The peculiarities of reality are subject to human interpretation -- a translation into meaning. The most useful application of this revelation is to practice a posteriori acceptance (more in an Amor Fati Stoic sense and less in a Zen Buddhist sense) because the universe is not conspiring against you, and a deterministic world could not have been otherwise. On the other hand, humans are change agents capable of effecting their own lives. Our choices have consequences, so we should practice a priori self-determination by proactively intervening to solve our problems and reactively reflecting on our problem situation. Self-determination opposes the Buddhist practice of re-interpreting problematic situations as acceptable and denying desire entirely. Not all problems are of equal importance and priorities are a form of knowledge (subjective perspective = wiring of interpretation machine … a topic for another post).

We may not have a choice in which cravings we have, but our appetite for satisfaction determines which experiences we prioritize. We are pre-disposed to have certain preferences, perhaps fully determined, by factors outside of our control. Yet, desires are nonetheless real to the “emergent Self”, and it makes a priori, rational choices amongst possible actions (i.e. desires are innately determined and The Self acts upon them). A decision is often misconstrued as an illusion of our minds’ ability to imagine multiple courses of action, mistakenly leading to the rejection of free will. The mistake in this argument is in regarding free will as a physical entity, rather than as emerging from physical entities. Free will is an emergent phenomena, and a ‘decision’ is the sensation we feel when presented with choices. When explaining why someone walked to the grocery store instead of driving, we can use use the abstraction of free will to generalize the physical conditions that determined the decision, namely the present neural configurations and relevant ecological history that impact things like memories.

Since we all have access to the same reality, the difference in our subjective experience of reality is determined by our differing points of view. Our rational perspective is built from the abstractions at our disposal. Abstractions can be physical (such as atoms, chemicals, neurons), or they can be musical (such as tone, pitch, tempo), or anything else, all existing at a level of emergence. If our best explanation invokes an abstraction, then it exists because the abstraction is necessary in making sense of the world. It exists until a better explanation comes along that may or may not include that abstraction in its contents. Sure, we are simply delaying the inevitable when we ponder decisions or deliberately sift through our options in a deterministic world. But practically speaking, creating good explanations takes time, and we can use good explanations to make better decisions.

For every effect, there is a cause***********. For every output, there is an input. You may notice the continuity of this universal model. But what happens when there is a discontinuity in the model? And what does a gap represent?

The Odds It Is Random

Randomness is the patternlessness of a non-PSR world. It is identical to the unpredictability that occurs with a lack of information about relevant reasons. The land of uncertainty is where we can rationally assign probabilities and use probability theory to model our ignorance (which turns out to be all the time) rather than make guarantees about future events. However, it is not useful (or rationally tenable) to assign a degree of credence to truth since probability is always a mere substitute for the lack of an explanatory theory. PSR says that reality is not intrinsically random or uncertain about itself; but our understanding of reality most definitely is. There is one theoretical exception to this rule -- quantum mechanics.

Quantum theory is an explanation of the “possible states” in which we find discrete units of energy, especially observed at infinitesimal scales (as spacetime approaches zero), aligning with the natural stochasticity (ontic randomness) in our observations. Many scientists posit an interpretation of quantum mechanical observations that elicit an ontic randomness to the universe, generally by invoking the “wave function” which behaves according to probability theory. In such theories, we can make predictions about quantum states (the properties of quantized particle distributions) that are as accurate as other interpretations. However, the instrumentalist “collapse” of the wave function is a “shut up and calculate” theory with a flawed metaphysics. It leaves many unanswered questions, such as what “the collapse” is and how and why it happens, so we have little use for these explanation beyond calculating predictions. Quantum randomness gets more interesting when considered in a geometric sequence of n states. To an observer in the reference frame (at t = 0), quantum predictions involve calculating a geometric series of n elements, where the common ratio is given by the wave function. As one can imagine, the information in each sequential future moment gets increasingly difficult to predict from the reference frame.

In classical physics, wherein a physical system is highly sensitive to the specifications of its initial conditions and dynamical laws, the system’s complexity explodes because approximation errors compound exponentially in subsequent moments. Using classical computation, an inkling of imprecision in the initial conditions quickly makes the system intractable. This led physicists to chaos theory. However, classical physics is now just an approximation scheme for physical reality since it was refuted by a crucial experiment and superseded by quantum physics. In quantum physics, wherein a physical system is sensitive to the other possible states of the system, the complexity scales according to the number of quantum interactions (quantum-entangled entities) in the system. Using quantum computation, the system’s tractability scales linearly with the number of quantum variables. The value of a quantum variable follows a distribution specified by Schrödinger’s wave function. Taken literally as a description of reality, quantum mechanical equations denote interactions in a multiverse, though not all quantum physicists share this conclusion*************.

I suggest watching Vsauce's YouTube video: "What is Random" and Veritasium’s sister episode: "What is Not Random" to further explore the link between randomness and ignorance.

All other probability is subjective and epistemic in nature, which means it can be explained by ignorance. Of course, an event either happens or it does not, so any likelihood that you attribute to an event is a function of your parochial knowledge. When we say “I am uncertain”, we are referring to a feeling of doubt in the presence of uncertainty. We are admitting that we do not know a critical piece of information that would make the truth apparent, and its absence unveils a set of possibilities, some of which might be unfavorable, hence the unsettling feeling. We can mitigate uncertainty and increase the accuracy of our predictions by creating better explanations. Here are two ways to close information gaps and improve our predictions:

1.) exploratory method -- surmise a hypothesis from observational data.

2.) explanatory method -- imagine a world that could create the observational data.

These methods are most powerful when deployed in tandem.

Exploratory research is meant to stimulate our curiosity and generate interest in a problem. It is a form of empiricism that begins with blind data collection. Of course, the collection process is not really blind because we need a good explanation of what the data is and how to interpret it. Once this explanation is in hand, we may continue to make hypotheses about what the data implies and what to expect. Subsequently, exploratory researchers extrapolate statistical patterns in the data to help find, describe, and define problems worth solving. It is common practice to conjecture about which factors in the data are ‘independent’ and then infer their ‘dependencies’ (also known as causal inference). While causal inference may seem inductive in nature, it is actually hypo-deductive reasoning disguised as inductivism. The process always starts by hypothesizing a relationship between observations, in which the observations are mere deductive consequences. Typically, the researcher will put their hypothesis to the test by conducting an experiment in which they alter the conditions of the experiment to gain information about the possible relations amongst factors (i.e. varying the independent variable while controlling for extraneous variables and observing changes in dependent variables). For example, you can vary the temperature of your thermostat (the independent variable) and measure its effect on sleep quality (the dependent variable) via an Oura Ring, or similar biomarker device.

Even with a meticulous experimental setup, we run into the Duhem-Quine Thesis, which says it is impossible to experimentally test a scientific hypothesis in isolation, because an empirical test of the hypothesis requires one or more background assumptions (also called auxiliary assumptions or auxiliary hypotheses). The set of associated assumptions supporting a theory is sometimes called a bundle of hypotheses (similar to what I have been calling a knowledge base). Although a bundle of hypotheses (i.e. a hypothesis and its background assumptions) can be empirically tested and falsified if it fails a critical test as a whole, the Duhem–Quine thesis says it is impossible to isolate a single hypothesis in the bundle, a viewpoint called confirmation holism; therefore, unambiguous scientific falsifications are unattainable. The scientific community refers to this as the Duhem-Quine ‘Problem’, but this is another unfortunate misnomer because science is not about using experimentation to once-and-for-all justify or falsify a theory. Science is about identifying and solving problems, and that is exactly what happens when a crucial test contradicts a theory. Experiments discover conflicts between theoretical content and its reflection in reality. When an experiment refutes a theory, it does not prove the theory ultimately false for the same reasons we cannot prove that a theory is ultimately true. An experimental refutation can logically mean one of two things: (1.) the theory is indeed false (2.) the experiment is flawed in some way, such as in design or false background assumptions or linguistic misinterpretations (or faulty equipment that was presumed to be perfectly functional). The conflict can only be resolved with a better explanation, which may retain much of the knowledge from the problematic theory to explain everything it could, but must include alternative assumptions that also explain the conflicting experimental results. Therefore, the consequences of experimental results are tentative. Corroborating evidence is a piece of information that eliminates all other competing explanations. The other possible explanations stood on equal footing with the prevailing explanation until the corroboration revealed terminal contradictions with each. Not a single empirical observation can positively confirm a theory. Yet, a single counterexample can indicate a flawed theory (more precisely, a flawed bundle of theories wherein at least one theory is strictly false). What about when we observe a new thought or idea that leads to a better theory? In this case, it is an observation that did the corroborating.

Here’s a useful hint for statistical models: when correlations between variables approach 100%, there is a chance of a causal relationship between the variables. Since correlations are caused by common determinants, we should always consider what is causing the correlations. Otherwise, an observed correlation could have been a chance occurrence. Also, there is always the chance that “hidden variables” ************** are the true root cause of the observed correlation, in which case the model is reliable by virtue of being associated with those hidden factors in some way. Without a complete account of the conditions, a correlation between conditional states is not determined to persist because the correlation could be conditioned on an unnamed variable or impacted by an exogenous factor. CORRELATION ⇏CAUSATION***************

…. so … how do you know if the measured response in the ‘effect variable’ was caused by varying the ‘cause variable’ or if the independent factors are truly independent themselves?

Statistical theory, like all branches of mathematics, describes an idealized world of possibility. To be effectively applied in predictions, an explanatory theory, beyond statistical theory, is required to support why and how a correlation, extrapolation, or causal relationship will persist. Historical data are observations that we interpret into representations in order to connect them to the world. Otherwise, plain data could represent anything. How did the data come to be? Explanatory theories save the day by submitting a model of inputs, outputs, and transformations.

You might be asking “How are explanatory theories conjectured?” Well … the explanatory method begins by imagining an abstraction of the Seen in terms of the Unseen. We need the Unseen to comprehend the Seen. An (accurate) understanding of Seen phenomenon is limited only by our ability to conceive of the (correct) Unseen phenomenon and their relations.

We describe the relationship between Unseen entities using symbols. We obviously do not see symbols, like the variable “X” or the number “1212”, floating around doing things in the world. What we actually care about (and only occasionally see) is what “X” depicts in reality. For an explanation to be (subjectively) meaningful and communicable, the symbols must portray something real to its source and receiver. (sometimes there is a mismatch between the intention and interpretation of an expression, and such misinterpretations can lead to substantial misunderstandings … a topic for another post.) Supposedly, the symbols written on the paper correspond to discernibles (aka observables) that make up our experience. Specifically, a symbol refers to reality as a representation of a real entity and, as an explanation, explain how the entities interact. The explanation may implicate an observed or previously unobserved entity as the root cause of an effect. Nothing is impervious to reason and nothing in our experience is inexplicable, so surrendering to the supernatural is always an admission of ignorance. Such a depressing concession indicates a severe information gap or a lapse in imagination, or both. Although symbols on a piece of paper can effectively convey an explanation, the representations do not need to be instantiated as printed symbols. (An abstraction could also be instantiated as Braille characters or rendered as a VR environment. Some abstractions are easier to implement than others and make subtle distinctions in their representation, eliciting different intuitions about that which they represent. The need for speed and intuition help decide which abstractions to use).

Conclusion

By now, the need for epistemic humility is obvious. The least we can do is acknowledge our tendency to over-infer causality between unrelated things (or related through layers of dependent correlations). Explanatory knowledge drives epistemic progress, and the fuel is creativity. We leverage imagination as a tunnel into possible and impossible worlds, abstractly expanding our search space. We learn from trial-and-error. We can seek better explanations to make better predictions. After all, criticizing our best explanations is the best way to hunt for Truth. Since First Principles and Laws of Nature could turn out to be chimeras, we can lean on Zeroth-Principles Thinking to acquire deeper explanations of the world. On the other hand, we are fallible idea creators and will always have to make decisions with imperfect knowledge, incomplete information, and even systematic bias. If an explanation withstands criticism, then it is plausibly the Truth, but never certainly the case. Certain knowledge endures for reasons that we can never know with certainty. We are perpetually exposed to uncertainty … so get used to it!

I glossed over the fact that “meaning” is an important part of the brain’s interpretation machine. Sooo … what is meaning? How and why does it exist? Is meaning relative or intrinsic, subjective or objective, or all of the aforementioned? What do determinism and free will have to say about meaning? If we assume that life is meaningful, then what is the meaning? How is meaning related to purpose? My best answer right now: the meaning of life is to create objective knowledge. More on that in the next post…

*I enjoyed Tom Clark’s philosophical article on the birth/death of consciousness. Undoubtedly, we can improve our understanding of consciousness with better explanations of how/why it emerges.

**Metaphysics is the ontology of existence, i.e. the study of what kinds of things exist and how and why? I subscribe to a criterion of existence which says existence is to interact with something (similar language for “interact with” are “be relative to” or “be observed by”). The relation is the raison d'etre. I assumed ownership of consciousness for the analogy’s sake; rather, consciousness spontaneously “exists” as a mind-dependent subject without a precise initial moment. Then, consciousness is a continuous awareness of “Leibnitzian discernibles” (discretized things like internal mental states, sensations, phenomenological experiences, thoughts, memories, emotions, qualia, etc.), and the Self is “the observer” of consciousness (an identity of consciousness relative to Non-self). I enjoyed Brett Hall’s metaphysical view of consciousness. The mind requires a definition for the concept of “you” (a whole exercise itself). I use a version of “you” that represents an identity of consciousness that is not myself and which is independent of experience, so “you” are not “your qualia” or “your thoughts”. I also enjoyed Evan Conrad’s article on Cognitive Behavior Therapy (CBT) which suggests many practical applications of the “independent self” view. The Self is an observer of qualia (including sensations, thoughts, and feelings) and any sort of experience appears real to you, rather than being you. Your mind (“You”) observes (interacts with) with a thought as the product of your brain (a physical machine that performs physical thinking procedures and serves the next thought). We have agency over thinking in the sense that we may choose to pay attention to a thought or sensation by being aware of its relation to “you” (an interpretation machine) and to the non-self (the rest of reality, though our body and brain matter belongs to this same reality). People who extend the “mind as a computer” analogy argue that an “interpretation machine” is just a universal computer and an “interpretation” is actually a computation. Therefore, a mental state (qualia) corresponds to a specific state on a universal computer. Theoretically, a universal computer (quantum or otherwise) must be able to model the phenomena of a mind (see Church-Turing-Deutsch Principle). The argument is as follows: The mind emerges from a brain. A brain is a physical system obeying quantum mechanics, so reproducing that physical system with the right combination of quantum logical circuitry and inputs could simulate a brain’s functions, thus the mind. The physical implementation also seems to be substrate independent (i.e. does not require biological matter), but it could turn out that our memory and/or computational process depends on a unique mechanism only possible with biological machinery. It is still unclear how consciousness emerges from a physical system, such as a quantum computer. Consciousness seems to be a sort of user interface (UI) between physical reality and abstractions (API?) that emerges (as a HUD?) and to which qualia appear. I draw the distinction between consciousness and computational universality because of the seeming incoherence of creative imagination and Turing Completeness. A counterargument against physically-independent consciousness is that creativity (and its apparent randomness) can be explained by quantum possibilities in a universal quantum computer. We are also different from modern computers in that we may be aware of ourselves (self-aware) as a computing entity within the system being computed. The fact that we are knowledge-creating entities aware of the fact that we are knowledge-creating entities is a dilemma of the human condition. Humans can understand the world better than anything else, as far as we know, which can be a blessing and a curse. This is not the same as being the most adapted.