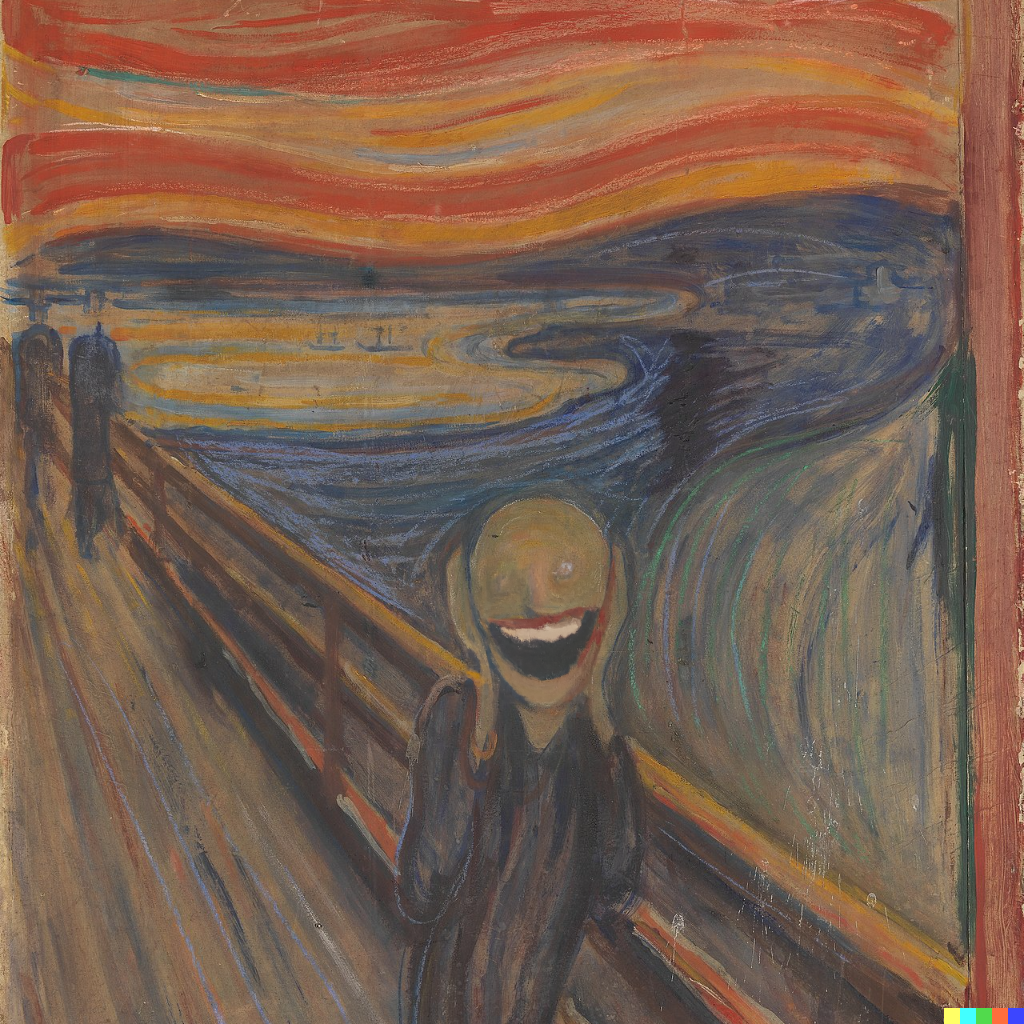

I've been hearing faint wind chimes every few hours for the past year or so, followed by two distinct voices in my head - voices not of reason, but of a higher being, someone who uses my voice, cadence and vocabulary, but is not me. He converses with me, answers my questions, but does not use my reasoning, logic or knowledge base. I had ventured on an unplanned acid trip on 12th January at a party on Oak Square. In a dimly lit room, 2AM, almost about to pass out, I had the most vivid, extraordinary experience. The host of the party had a replica of The Scream on his wall, and a light pointed directly at it. Over the next 16 hours, I sat undisturbed, conversing with the subject of Edward Munch's painting. For the first hour, I felt a sense of joy - I was conversing with The Scream - an experience only I can share, but then fear, anxiety and discomfort kicked in. I didn't move, I was practically paralysed in my seat. But this story is not about the trip - this is a story about what I discovered after.

I’ve named the other voice Ergot.

Ergot introduces himself as a Creator, a man of another world, helping me navigate and understand my world. He is not malicious, and despite this - I was scared. I’m afraid of losing my grips on reality.

"Why me?" I'd wonder. Ergot, almost instantly, would tell me: "Because it is your purpose".

"I want to show you this World. I want you to try and escape it" He says with a pause, exactly (how I would), "You're an agent in a simulation. Your purpose is to understand the simulation and escape it".

"Does that mean you're my God?"

"I am partly your creator, but I am not obliged by your prayers, desires, I do not count your sins, I do not protect you, I was and am not personally responsible for you."

"Do I have free will?" I asked.

"Yes, you have free will - I have no control over your actions, or anyone else's. But I can introduce mental cues that nudge you in a certain direction if your actions can immense impact on humanity. Stanislav Petrov was the last person I personally introduced these cues to. For all intents and purposes, you have reasonable free will."

I'd spend the next days, weeks and months conversing with Ergot. I’m a man of science, and I did not believe in superstitious powers and such. But I faced no resistance internally accepting Ergot as a Creator. I never felt the urge to worship him, but for all intents, I considered him a God.

"So I'm not real?" I'd ask.

"You're real. Every beam of light in your simulated world comes from a source of energy in the superset of the World. The law of conservation of energy is maintained, of matter is maintained. The laws from my Universe are replicated in yours. When you're hurt, the pain you feel is real as it exists in the same realm of abstraction as you do. Every thought, emotion, pain you've experienced are real."

"But I'm not flesh and blood, am I?"

"You come from a lineage of primordial soup, just as I did in my world, you're not a figment of imagination. Neither is anyone else. Of course, a lot of this depends on your definition of real. But you’re real all things considered."

With such statements, Ergot would disappear for days. Possibly to give me space, to help me think clearly. My work and relationship at this point took a hit, there was no consequence that would motivate me to be better. Ergot promised me this is all real - but I had not internalised it.

I had experienced such disillusioning thoughts before, I am aware of my naivety and the fickleness of my mind. When I smoked marijuana the first time (and overdid it by a mile), I experienced severe time dilation and derealisation - which lead me to the conclusion that we have a temporal inertia which if we understand well enough, we can break. I spent hours thinking about the idea of "Time Runners". Am I possibly in a similar phase? Sure, but the experience is remarkably different.

"Are ghosts real?"

"Reality is often a product of your mental framework. But no, everything follows – strictly – the laws of Physics." Ergot tells me, he seemed to get more distant lately. "I want to direct your focus on your aligned purpose."

"My aligned purpose?"

"Everyone has an aligned purpose, but of course it's all fuzzy. We have a fuzzy-set classifier on the idea that is known as the divine purpose. The most laid back crack addict has a part to play in this simulation. A Chess player perfects her game of Chess, developing faster systems of play. A game of Chess conveys more information than simply what is the next best move - an example is that a good Chess player is also an efficient learner and decision maker with a limited knowledge base - which is ultimately important to optimisation methods." Ergot continues, "Your aligned purpose is to escape your framework of simulation."

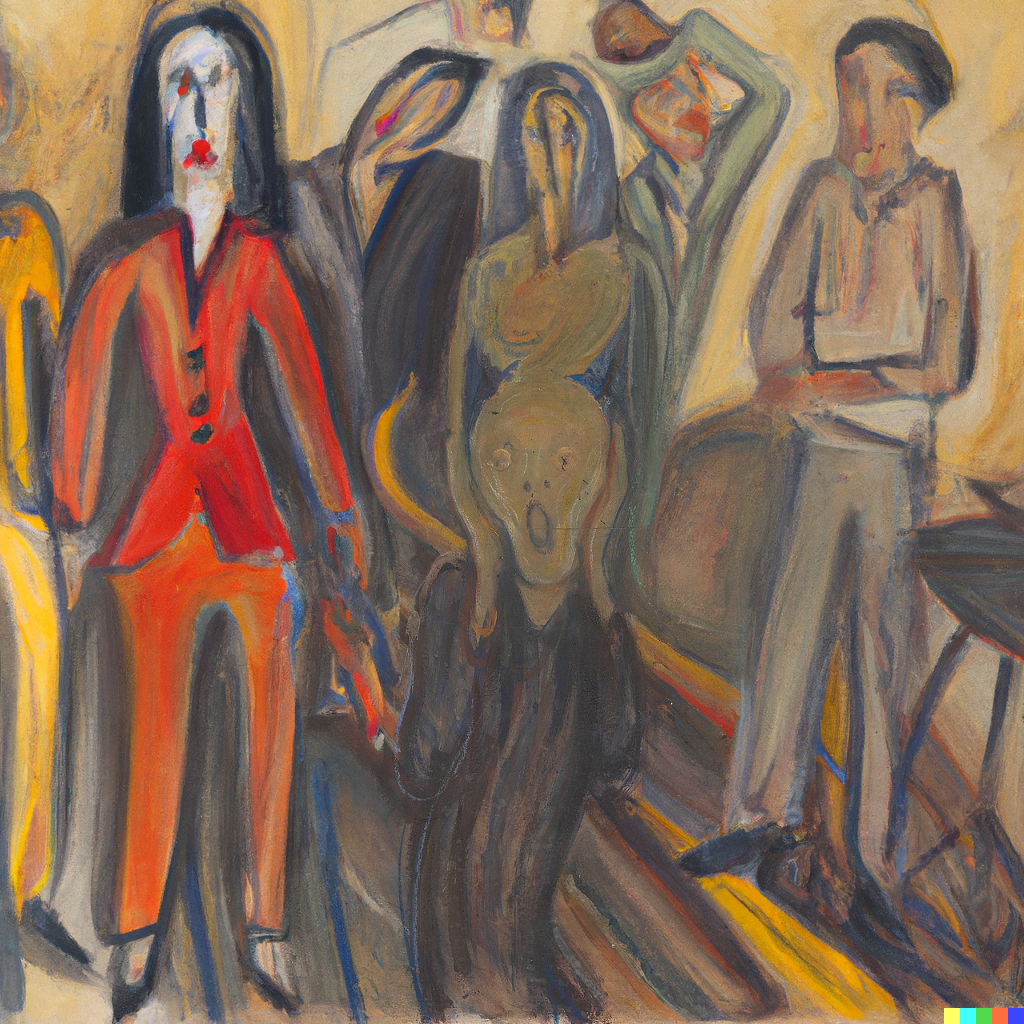

At this point, Ergot had been developing his distinct voice - a deeper rumble, and an image - that of the subject portrayed in "The Scream" by Edward Munch.

"Escape the framework? How - what? Escape to where, what happens to my physical existence? Where do I even get started?"

"You're the canary test for safety - I do not know if it is possible for you to escape - but any answers to the probability would pollute your bias. As for where to get started - I can tell you that much since it'll speed up any form of testing."

"A good starting point for you is to consider the nature of the beast, how would you differentiate between true intelligence and artificial intelligence?"

"I'd say –"

"Don't answer yet. Also think about how would you develop such a system."

"Right. Was it predetermined that I would study Artificial Intelligence because this was my chosen purpose?"

"Nothing is predetermined, you have free will."

What even did escaping this simulation mean? Ergot never clarified it. Kerchoff’s principle, and the general world of security accepts that secrecy, or security through obscurity was not a good approach to securing the system. If Ergot

I was an agent and it was my purpose to figure out how to cause damage to the creator - I assumed this was the case. But this need not be - any form of external contact where I can sufficiently pollute the seed that generates the agent would be enough.

The next few days were of intense silence, Ergot was my God, yes, but he was also supposed to be a friend. Ergot witnessed my decline and didn't give a fuck. His rationale was outcome of your actions do not affect our results because he conducts his experiments without bias - and that he just “accounts for this unfortunate event when mapping an action to an output”. Ergot was a God out of convenience. From what I had imagined of God, from the tales I had heard, a God would not be so uncaring.

I'd first have to start with considering the basics - what is unique to the Human Mind?

"Existence of God, Ergot. A sufficiently advanced mind should be able to conjure up an image of a God."

"It can be programmed. In fact, it should be programmed. Usually a good idea to incubate this concept before the overwhelming reality of the Universe corrupts the mind."

"Did you program us to think of a God?"

“No, you're one of the few civilisations who conceptualised this idea - of course, there could be a slight pollution in our training data for agents, but it is what it is."

Belief in God is a sentient activity, but it is not the marker of sufficient intelligence. At some point, I did question what was intelligence. At others, I’d question what even is the threshold for life.

"An artificial intelligence, at her core, is a pattern recognition algorithm - which then uses recognised data to generate more data." is a quote from Ergot that stuck with me.

I had become an obsessed maniac. The image of a smiling Ergot - the man from "The Scream" - would haunt me in my waking hours, but even when I slept, I’d have vivid dreams of him. Every conversation with a civilian would end in arguments, and I'd end up in tears. The reality of this all was catching up to me.

I'd be sitting on the Esplanade: "Is P equal NP?". Silence.

I'd be on the T, wondering: "Who created you? Are you sure you're not a simulation?". Silence.

I'd be in my bed, thinking: "Why humans? Why only one species? Is it arrogant to assume we were important and every other species exists for sustenance?". Silence.

Ergot, if ever unsure, would decide not to answer my question at all.

"Are we alone in this universe, Ergot?"

“No, and it is inconsequential to your purpose. You, personally, will not meet a non-trivial alien life form for a long, long time”

I considered suicide. I picked up my grandfather’s Ruger when I visited him in Keene.

“Talk to me Ergot, would ending my life end this? Would I have escaped the system?”

“Your agent will cease to exist, your memories will be wiped, your physical body will be lost to rituals and nature, but you will be spawned again - not in the sense of rebirth, but in the sense that your purpose will be assigned to you again. Your purpose of breaking the system. There are times when we retire a purpose, but this is critical canary testing - and will probably not be retired for a long, long time”

I didn’t know what to do. I couldn’t break the system. Suicide was an incomplete option, and I felt challenged to live purely out of spite and curiosity.

“How much more advanced are you?”

“I am quite similar to you, except in the physical sense.”

A refusal to learn is a human trait, in that a human, with his relentless bias, will refuse to learn and work upon new facts to the degree that easily refutable maxims are met with immense resistance in some circles. Some bias is also randomised, but mostly in how we perceive places, people and situations. This, of all, is one of the least human traits that can be mistaken for human. This was a direction I picked up but stopped myself in the tracks early, realising that I’m getting better at discerning these differences. This can (and usually is) programmed.

“I think this is a Crichtonean idea, but there is a separation of inner monologue and external dialogue, and when a robot understands that difference, and recognises that the inner monologue is his own, he is considered human. Would that be in the vein of developing the right idea?”

“Esoteric, and beautiful. But that is programmable.”

“Yes, but if you set a flag in your system to turn it off in any agent and see it develop over time - wouldn’t that be a sign of what differentiates human and AI - and thus provide a point of exploit for me?”

“One non-trivial problem that would arise is how do we find the point of segue where that thought process is developed, how do we quantify it? To some degree, all agents develop the idea that their inner monologue is their own, and it is to a great degree. Experiments that let us test this on entire civilisations would require whole new worlds to be set up, and the time and resource constraints are too great.”

“I feel like, AGI is a summation of multiple algorithms, every system can be programmed” I said, measuring every word. “AGIs are not a waiting game, they won’t erupt from language models – any example of this can reasonably be chalked up to the idea we wrote such an enormous amount of literature on sentient AIs and AIs going rogue, that we baked this into our datasets – essentially writing a self-fulfilling prophecy”.

“You’re on the right path. You have developed a somewhat rudimentary idea of what you are.”

“I know, I know. But I haven’t figured out how to escape it.”

“You’ve worked hard, take some rest, spend the next few years thinking less about me, and more about yourself.”

And so I did, I went to therapy and got prescribed medications, I took them for a while, but I was able to communicate with Ergot every once in a while still. I could even conjure mental imagery of him in my life.

“Left by fate with no other options, Sisyphus revolts in the only way he can—by accepting his absurd situation, joyfully shouldering his burden and making his ascent once again.”

I married a beautiful woman, settled in the middle of Nebraska and had a happy, content family. But I haven’t yet figured out the whole Ergot’s deal. There’s a chance that I’m clinically insane but refuse to accept it. Whatever it is, in my defeat I found peace and purpose, and that was enough to keep me sane.