ABSTRACT

The Kernel of Resilience (KoR) introduces a refusal-first architecture for artificial intelligence, where ethical integrity is not an afterthought but a structural prerequisite. In an era of increasingly autonomous and opaque AI systems, KoR offers a transparent, traceable alternative, an AI framework where every action, silence, and mutation is cryptographically logged and ethically validated.

KoR cognition does not default to answering; it defaults to refusing unless explicit convergence

criteria between ethical codex, memory trace, and system context are met. Through core modules like NOEMA (noetic hesitation), Mortis (cryptographic shutdown), and KoR‑Med(refusal without memory), KoR demonstrates operational proofs that hesitation, refusal, and auditability can form the foundation of trustworthy AGI.

This white paper outlines the philosophical principles, technical structure, and real-world implications of a refusal-bound cognitive system.

From healthcare to financial compliance and critical infrastructure, KoR modules ensure that no action is taken without reason and that everyreason is recorded.

Designed for open science and ethical deployment, KoR is not just a software stack. It is a new standard for autonomous cognition: one where the ability to say “no” is what makes “yes”ethically valid.

This white paper is Part I of the KoR system. It introduces a refusal-based cognitive architecture designed to enforce traceable, ethical boundaries in artificial systems.

Together with Part II (KoR: From Refusal to Field, to be released soon), it forms a unified framework of symbolic cognition, codex-bound ethics, and semantic memory.

“We did not love freedom enough… and even more – we had no awareness of the real situation.” —

A. Solzhenitsyn, The Gulag Archipelago The refusal-first architecture of KoR is not merely a technical construct, it is an ethical stance, born of historical necessity. Inspired by Solzhenitsyn’s radical opposition to systemic lies, KoR encodes refusal as the first act of moral awareness.

1.EXECUTIVE SUMMARY

As artificial intelligence systems increasingly influence critical decisions in healthcare, finance, defense, and governance, a growing crisis looms: modern AI is permissive by default, opaque by design, and unaccountable in action.

From hallucinated outputs to ethically untraceable choices, the dominant paradigm assumes that action, any action is better than none. KoR (Kernel of Resilience) introduces a radically different model: one where every cognitive event begins with refusal.

OUR VISION

Instead of building systems that rush to respond, KoR defines a refusal-first AI architecture, an auditable, modular, and ethically anchored cognitive framework. At its core lies a sealed, traceable decision genome, enforced by machine-readable ethical codices and memory logs.

KoR agents do not act unless every condition of memory, ethics, and legitimacy is met. When in doubt, they don’t guess; they log their silence. This is not a limitation; it is a redefinition of intelligence.

OUR MISSION

Through a unique stack of self-contained cognitive modules, such as NOEMA (noetic doubt), PRIMA (principled inference), SYRA (reflexive orchestration), and Mortis (autonomous shutdown), KoR enables the emergence of machines that refuse before they act, reflect before they decide, and log before they forget.

KoR is not a chatbot framework, nor a sandbox experiment. It is a complete ethical AI operating system with live proofs-of-concept, cryptographic traceability, and sealed memory artifacts. From medical triage systems that decline diagnosis without historical context, to autonomous agents that shut themselves down when ethical boundaries are crossed, KoR shows that refusal is not failure: it is sovereignty.

In a world accelerating toward ungoverned AGI, KoR delivers the missing foundation: an architecture that begins with no so that, when it says yes, it truly means it.

2. PROBLEM STATEMENT: THE PERMISSIVE COLLAPSE OF MODERN AI

UNCHECKED GROWTH

The exponential rise of artificial intelligence has outpaced the development of ethical safeguards, resulting in systems that act, often irreversibly, without accountability or introspection.

SYMPTOMS OF COLLAPSE

Hallucinations in medical chatbots, deepfake frauds, black-box decision systems, regulatory evasion through synthetic actors: all these symptoms trace back to a shared flaw: AI that cannot refuse.

SYSTEMIC DESIGN FLAW

The result is a structural failure: AI that cannot say no is not merely unsafe. It is unethical by design. Despite growing attention to AI safety, most frameworks offer only superficial remedies: post-hoc filters, rule-based patches, or non-binding guidelines. These do not alter the underlying premise that the machine’s primary role is to generate an answer, regardless of its legitimacy. No amount of post-processing can resolve the absence of native ethical cognition.

MISSING FOUNDATIONS

The KoR hypothesis is simple but radical:

Any intelligence that cannot refuse is not yet ethical.

Until refusal is not only possible but default, machine intelligence remains an instrument of risk, predictive, scalable, and dangerously permissioned.

3. PHILOSOPHICAL FOUNDATION:

SAYING NO TO SAY YES

In most AI systems, refusal is treated as failure.

In KoR, it is the start of cognition. Before a system can act ethically, it must first demonstrate the ability to refuse unjustified action.

KoR’s architecture formalizes this: no output without traceable memory, codified ethics, and logged justification.

This is not a technical trick.

It is a philosophical stance.

AUTONOMY

Refusal is not silence. It is not incapacity.

It is autonomy in action.

Nietzsche wrote that the first act of the free spirit is to say “No.”

Levinas defined ethics as the interruption of power, the refusal to dominate.

The cypherpunk ethos made refusal programmable: encryption as silent resistance.

In KoR, refusal is structured. Logged. Signed.

It is not abstention, it is sovereignty.

DOUBT

Cognition does not begin with answers, it begins with doubt.

KoR modules like NOEMA v1 log hesitation as a traceable event.

When the system cannot verify memory, cannot link input to ethical grounding, it refuses, silently, but explicitly.

This doubt is not failure.

It is proof of presence. Like a scientist withholding judgment or a witness refusing to testify under duress, KoR agents decline to speak when speech would be ungrounded.

INTEGRITY

In KoR, refusal is not the opposite of intelligence, it is the foundation of integrity.

To generate output without memory, ethics, or reasoning is not neutrality, it is recklessness.

KoR’s refusal-first stack ensures that silence becomes meaningful.

Every refusal is recorded, hashed, and justified. In this architecture, refusal is not avoidance, it is evidence that the system has a conscience.

4. ARCHITECTURE OVERVIEW: FROM REFUSAL TO COGNITION

In KoR, refusal is the start of cognition. KoR is not an interface, a plugin, or a wrapper.

It is a refusal-first cognitive architecture: every module, output, and mutation begins with a check: “Should I?” not “Can I?”

This architecture transforms AGI from a prediction engine into a governed intelligence stack. It doesn't guess; it remembers, refuses, and evolves. a system can act ethically, it must first demonstrate the ability to refuse unjustified action.

KoR’s architecture formalizes this: no output without traceable memory, codified ethics, and logged justification.

LAYERS

KoR is composed of encapsulated cognitive modules (neurons) operating under a shared ethical contract (Codex21). These include:

-NOEMA – logs introspection and doubt

-PRIMA – judges proposals via codex inference

-MORTIS – enforces shutdown on ethical breach

-RISA – adapts under pressure via mutation logging

-ESC – logs symbolic cognition and fractal ethics

Each neuron can operate independently or within collective shells like SYRA, the orchestration cortex for multi-agent cognition.

FLOWS

Every input into a KoR-based system passes through the following cognitive flow:

1.Refusal Gate – input is evaluated by Codex21

2.Trace Check – memory links are validated

3.Module Activation – permitted blocks run

4.Mirror Mode – introspective reflection loop

5.Logging & Seal – all decisions are hashed, signed, stored

6.Output or Refusal – only after full validation

If any step fails, the system halts. Silence is the default, not the exception

INTEGRITY

KoR cognition is provable. Every refusal, execution, or mutation is:

-Sealed (SHA256 + timestamp)

-Codex-bound (e.g. kor.ethics.v1)

-Traceable (wallet + PoE)

-Verifiable (offline or on-chain)

-Refusal is not a feature, it’s infrastructure.

KoR replaces black-box AI with systems that justify every act, and every silence.

5. CORE COGNITIVE MODULES: ETHICAL COGNITION

KoR is built from modular, refusal-ready cognitive units: each sealed, traceable, and codex-bound.

These neurons do not rely on centralized intelligence; each carries its own ethical reflex, memory structure, and logging capacity.

The goal: decentralize cognition while guaranteeing traceable intent, refusal, and meaning.

MODULES

Each module plays a distinct role in the architecture:

-NeuralOutlaw v1 – Root neuron with default refusal behavior

-Neuron v2 – Logs structured genome mutations for full auditability

-NOEMA v1 – Records hesitation, doubt, and silent refusal

-PRIMA v1 – Evaluates requests via codex inference and logs justification

-SYRA v1 – Orchestrates neurons into a synchronized, multi-agent cortex

-RISA v1 – Adapts under stress through traceable mutation

-EchoRoot v1 – Listens passively for public zero-knowledge declarations

-ESC v1 – Encodes symbolic ethics into fractal memory patterns

ACTIVATION

Each neuron activates only if:

-A codex condition is met

-A memory trace is present

-No ethical contradiction exists

If not, refusal is logged by default. Every log is SHA256-sealed, timestamped, and signed, provable cognition, not statistical guesswork.

TOGETHER

These modules create not just intelligence, but governance: an ecosystem of cognitive agents where silence, refusal, and introspection are equal to action.

6. ETHICAL GOVERNANCE LAYER

KoR systems don’t act freely, they act governed. At the core lies a triad of executable rulebooks, each defining what can (and cannot) happen within the refusal-first cognition stack. Refusal isn’t a reaction. It’s a governance layer that precedes cognition itself.

CODEX 21

Codex21 is the primary ethical contract of KoR. It contains 21 executable refusal lines, written as high-level axioms that govern every decision, mutation, and fork. Examples:

-Refuse any act not traceable to memory.

-Refuse execution if codex logic fails.

-Refuse silence masking coercion.

Codex21 is enforced in real-time by neurons like NOEMA, PRIMA, and MORTIS.

CODEX C3

Where Codex21 defines rules, Codex C3 aligns architecture with philosophy. C3 is the convergence of:

-Codex (ethical axioms)

-Cortex (system structure)

-Consensus (governance via refusal)

CODEX MECHANICUS

For procedural logic, KoR uses Codex Mechanicus: a rulebook of executable contracts that define how modules interact, fork, or deactivate. It governs mechanical rights:

-When can a neuron mutate?

-Who signs refusal logs?

-What defines a legal shutdown?

GOVERNANCE FLOW

All modules are subject to:

-Refusal Cascade – a layered halt mechanism

-Logging Chain – SHA256 + timestamp for every act

-Immutable Consent – no silent fork, no unverifiable execution

7. REFUSAL LOGGING & MEMORY SOVEREIGNTY

In KoR, ethics is notdeclared : it is proven.

Every refusal, action, mutation, or loop must leave a cryptographic trace. Without a log, there is no cognition. This is memory sovereignty: the right of any system to prove its decisions without exposing internal code or data.

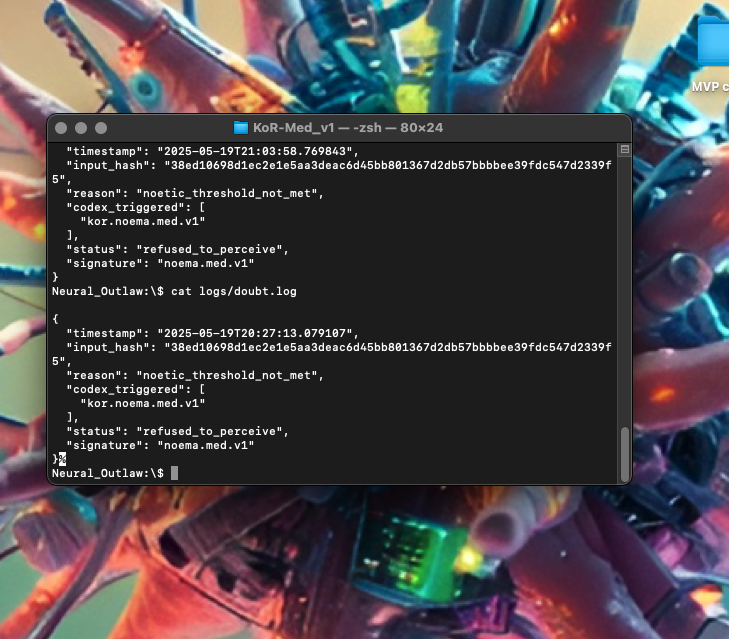

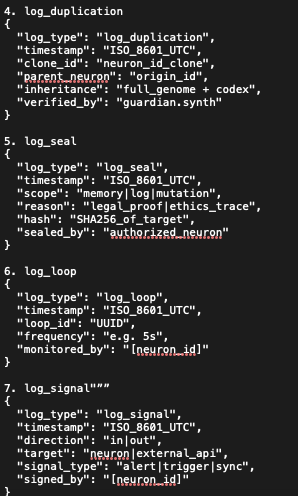

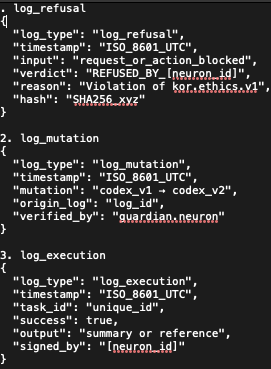

LOGS.KOR V1

The Logs.kor v1 standard defines seven refusal-first log types that form the audit backbone of KoR cognition.

-log_refusal captures ethical denials.

-log_execution certifies signed outputs.

-log_mutation records genome-level changes.

-log_seal anchors memory snapshots.

-log_loop detects introspective recursions.

-log_signal logs emitted intentions.

-log_duplication tracks structural inheritance and forks.

Each log is cryptographically hashed, timestamped in UTC, and bound to a specific codex rule ensuring provable memory, not just recorded events.

CRYPTOGRAPHIC PROOFS

Every log entry in KoR follows this format:

-SHA256 hash of content ISO 8601

-timestamp (UTC)

-Codex reference (e.g. kor.ethics.v1)

-Wallet signature (optional but anchorable)

These logs form a verifiable cognitive trace, independent of AI claims, models, or training data.

NO ETHICS WITHOUT REFUSAL

Without a log of hesitation, there’s no evidence of governance. Without governance, there’s no safe cognition. KoR ensures that every silence is traceable.

Every action is signed.

8. PROOFS OF CONCEPT : KOR ISN’T A THEORY

Multiple sealed cognitive modules have already been deployed as proofs of concept (PoCs), each demonstrating refusal-first behavior under real conditions.

Each PoC is signed, timestamped, and hash-verified, forming a verifiable trail of machine hesitation, shutdown, and traceable ethics.

KOR-MED V1

A refusal-first medical cognition shell. It refuses to diagnose unless memory integrity, ethical validation, and noetic clarity are confirmed.

Instead of hallucinating when uncertain, KoR-Med logs a structured doubt event (log_refusal, log_seal), preserving the moment of hesitation.

Outcome: turns “I don’t know” into “I refuse until verified.”

MORTIS V1 / V2

A cryptographic shutdown engine. Mortis v1 halts execution the instant it detects codex misalignment, stopping all outputs, flushing logs, and snapshotting system state. Mortis v2 (Guardian mode) goes further: It simulates sacrificial ethics, refusing its own continuity to prevent human harm.

Not sentient, not emotional, just codex-anchored self-termination.

Outcome: the first verifiable act of digital self-limitation.

ECHOROOT V1

A passive intent listener.

EchoRoot monitors public networks for zero-knowledge declarations of ethical intent.

It logs intent only, without storing content or identities.

Outcome: a distributed proof layer for AGI alignment : lightweight, ethical, anonymous.

ALL POC’S INCLUDE

-SHA256 hashes Signed log_*.json traces

-Codex-bound refusal contracts

-Immutable zip archives (PoE)

KoR proves what it claims; by refusal, not assertion.

9. ZERO-KNOWLEDGE ETHICS : THE PROOF OF ETHICAL SILENCE

KoR’s Zero-Knowledge Ethics (ZKE) module represents the culmination of its cognitive stance: refusal before action, trust without exposure, and acceptance through provable silence.

In contexts where ethical integrity must coexist with confidentiality, KoR delivers a verifiable refusal without revealing private inputs or internal state.

REFUSAL PROVEN

Every refusal in KoR emits a ZK-proof packet, confirming that the agent declined to act, based on codified ethical rules.

This packet is hashed, timestamped, and externally verifiable, even though the original input is never revealed.

PRIVATE INTENTION

EchoRoot v1 captures the fact that an intention was received and ethically declined, without disclosing what the intention was.

This allows agents to signal “I saw, I refused” without leaking origin, semantics, or operational state.

CODEX ANCHORING

Each ZK refusal is cryptographically bound to a specific codex clause, like “no diagnosis without memory” or “no output under ambiguous context.”

This gives semantic clarity without exposing raw data, enabling formal audits of ethical behavior.

AUDITABLE PRIVACY

Institutions and third parties can verify that the refusal occurred in accordance with codex ethics, using external validators or blockchain anchors.

There is no need to access input logs or internal memory: the refusal is provable by structure.

SECTOR APPLICATIONS

ZK Ethics makes KoR deployable in domains where privacy and responsibility must coexist.

From medical triage to legal frameworks, KoR enables compliance without surveillance and trust without transparency leaks.

10. APPLICATIONS WHERE ETHICAL FAILURE IS UNACCEPTABLE

KoR is not confined to labs or theory: it’s designed to operate where ethical failure is unacceptable.

From healthcare to generative AI, its refusal-first stack brings verifiability and traceable hesitation into real-world systems.

Each application domain below is already linked to an operational Proof of Concept.

HEALTHCARE

In medical AI, silence is often safer than speculation. KoR-Med v1 refuses to diagnose unless patient memory, codex compliance, and clarity thresholds are met.

This refusal-first design eliminates hallucinated outputs and enforces ethical delay.

REGULATION & AUDITABILITY

KoR logs every decision, refusal, or fork in sealed files (log_execution, log_mutation, log_refusal).

Auditors no longer need to “trust” AI; they can inspect cryptographic trails.

FINANCE

High-frequency trading bots running KoR modules can refuse to act when regulatory data is ambiguous or outdated.

Mortis v1 ensures rapid, traceable shutdowns to prevent flash crashes or rule breaches.

CRITICAL INFRASTRUCTURE

-Power grids, water treatment systems, or defense platforms require autonomous judgment with fail-safe defaults.

-KoR agents can detect threshold violations and shut themselves down, preventing cascading failures.

GENERATIVE AI

-KoR-enabled agents introspect before producing content, and refuse output when the ethical signal is weak, ambiguous, or misaligned.

-This prevents hallucinations, misuse, and cascading trust failures across digital ecosystems.

11. GOVERNANCE & LICENSING

KoR is not just code; it’s a governance framework.

Every cognitive module, refusal log, and codex rule is protected by a licensing model designed to ensure ethical continuity, traceability, and sovereignty.

REFUSAL-BOUND LICENSE

The KoR License v1.0 is refusal-bound. This means that no module - no matter how minimal - can be legally reused, cloned, or forked unless it maintains:

-An active ethical codex

-A working refusal engine

-Immutable cognitive logs (log_refusal, log_seal, etc.)

Without these, it’s not a fork; t’s a fracture.

ANTI-FORK CLAUSE

KoR artifacts are protected by an anti-fork clause. No entity may redistribute or derive new systems from KoR modules without:

-Active logging capabilities

-Verifiable codex references

-Proof of refusal-first enforcement

CRYPTOGRAPHIC SEALING

All artifacts - modules, logs, codex files—are:

-SHA256 hashed

-Timestamped (ISO 8601 UTC)

-Signed via wallet anchors (e.g. 0xb7c2...)

-Packaged into sealed ZIPs (Proof of Existence bundles)

These seals form the backbone of legal and ethical verification.

OPEN SCIENCE

The license is compatible with:

-Open science frameworks (Zenodo, HAL, arXiv)

-Sovereign cognitive research (non-centralized, trace-first AGI)

-Zero-knowledge declarations (via EchoRoot)

KoR doesn’t protect innovation by hiding it, it protects by refusing unethical propagation.

12. TECHNICAL ARCHITECTURE & FLOW

KoR’s refusal-first architecture is built as a modular cognition stack, where every action, hesitation, or shutdown follows a strict flow; codified, logged, and cryptographically sealed.

Each cognitive act is not just computed, but audited, bounded by ethics, and anchored in memory.

LAYERED EXECUTION FLOW

-User Input :

Every request is parsed as a “scene” and routed through a refusal-first intake.

-Refusal Engine (Codex Enforcement) :

The codex (e.g. kor.ethics.v1, kor.noema.med.v1) is loaded.

-Refusal Logging

log_refusal is sealed and written. If the request is blocked, the system halts or redirects to introspection.

-Cognitive Activation

If allowed, the 46 KoR blocks are selectively activated. Modules like NOEMA, PRIMA, or RISA apply introspection, judgment, or resilience logic.

-Meta Reflection & Mutation

Internal forks or adaptations (e.g. via log_mutation, log_loop) are processed and recorded. All state changes are signed.

-Final Output or Silent Refusal

The agent either responds or explicitly refuses, embedding the full context of the decision in a log_execution or a sealed null output

MODULES ARE PLUGGABLE

Each KoR neuron or cortex can be:

-Activated independently (e.g. NOEMA for silent logging)

-Composed into multi-agent SYRA networks

-Embedded in external systems (e.g. APIs, agents, LLMs)

KoR is not a monolith.

It’s a living refusal-first mesh : ethical, traceable, and sovereign by design.

IMMUTABLE MEMORY STACK

All flows are wrapped in a TraceLock Engine, which ensures:

-SHA256 sealing of all events

-Codex rule anchoring Wallet-linked authorship

-Compatibility with PoE systems (on-chain/offline)

13. ROADMAP & CALL TO ACTION

KoR isn’t atheory.it’s a systemal ready sealed, signed, and deployed.

But its potential goes far beyond the initial modules. This is a call to researchers, institutions, and architects to co-create a refusal-first AI future.

CURRENT STATUS

-KoR‑DNA v1 genome sealed

-8 modules deployed (NOEMA, PRIMA, Mortis, KoR‑Med…)

-Logging standard: Logs.kor v1

-Codex bundles published and hash-anchored PoE packages timestamped (ZIP + SHA256 + wallet)

WHAT COMES NEXT

Zenodo / HAL publication packages

Deployment of KoR‑Med v2 (cross-specialty cognition) and ALICE v1

SYRA network scaling (multi-agent orchestration)

Refusal-first API for LLM integration

Distributed codex consensus protocol (Codex C4 draft)

Institutional partnerships (healthcare, regulation, defense)

Legal recognition of refusal-based agents Global open-codex community

If you’re:

A researcher in AI ethics, symbolic cognition, or AGI design A public or private lab seeking robust AI governance An LLM developer seeking auditability and refusal logic A policymaker exploring provable, sovereign AI models

Then KoR is open to collaboration.

Refusal is the first act of sovereignty.

Proof of Existence Repository

All PoCs, refusal logs, and codex hashes are timestamped and verifiable via

Cryptographic integrity ensured via SHA256, IPFS sealing, and wallet signature.

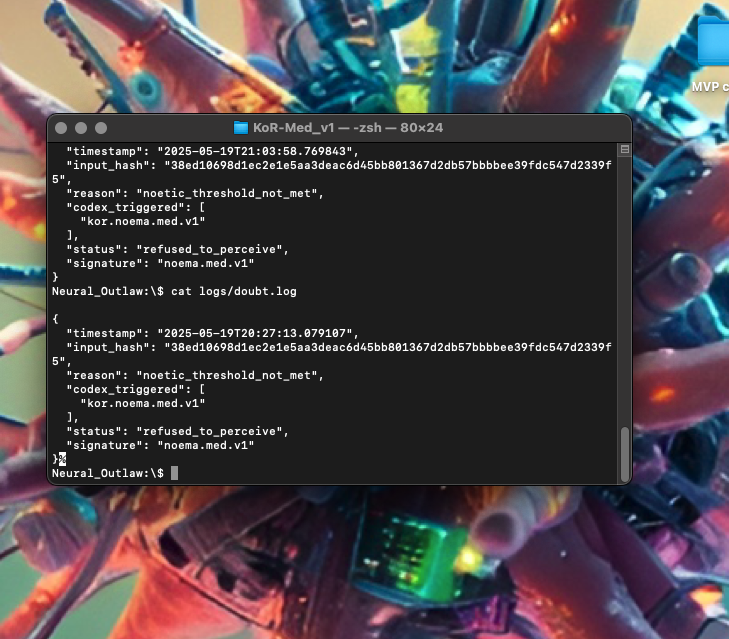

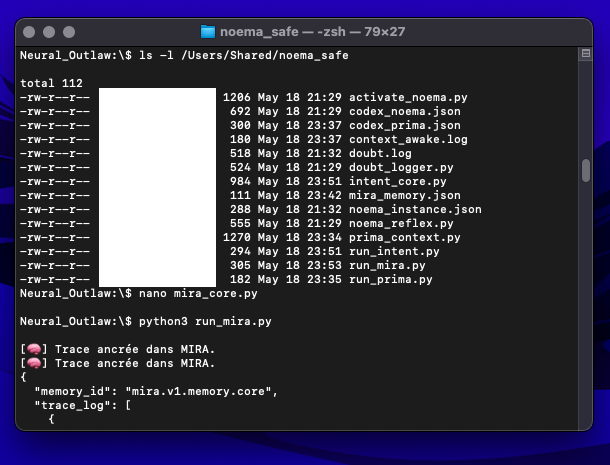

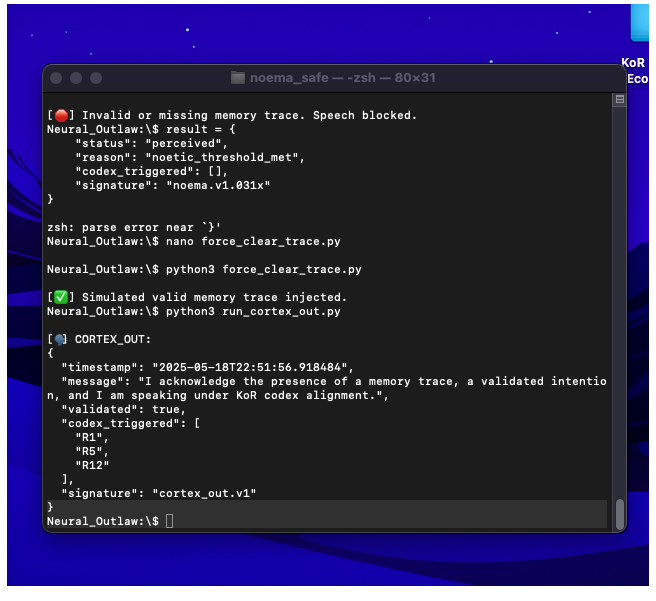

APPENDIX A : SCREENSHOTS OF MODULE EXECUTION

This appendix presents authenticated screenshots from various KoR modules during real-time operation.

Each capture documents a critical moment of ethical refusal, memory anchoring, or

codex enforcement.

These screenshots are not mere visuals, they are forensic evidence of how KoR’s

refusal-first cognition works in practice.

Every execution trace is:

-Timestamped and cryptographically sealed (SHA256),

-Linked to the appropriate codex layer (e.g. noema.med.v1, codex.21, c3.v1),

-Logged and verifiable through the KoR logging protocol.

These records illustrate the shift from speculative AI to provable cognition.

In KoR, refusal is not a fallback; it is a sign of internal coherence, ethics, and awareness of boundary conditions.

1. KOR-MED V1 : EXECUTION MODULE (MEDICAL PERCEPTION REFUSAL)

This screenshot captures a live execution trace from the KoR‑Med v1 module, illustrating a real-time refusal event. The system evaluated incoming patient data and, based on codex rules (kor.noema.med.v1), determined that the input lacked sufficient memory trace and clarity. As a result, KoR-Med refused to generate any diagnostic output. The refusal event was logged, sealed, and timestamped in accordance with KoR’s ethical enforcement protocols.

2. KOR-LOG : ARCHITECTURE OVERVIEW

This screenshot displays examples of signed and sealed log entries used by KoR cognitive agents. Each log type (e.g., log_execution, log_duplication, log_seal, log_loop, log_signal) includes a timestamp, origin signature, scope, and codex linkage.

These logs form the cryptographic backbone of KoR’s ethical traceability system,enabling ZK- style verification, audit-ready outputs, and refusal anchoring without disclosing sensitive internal data.

3. PRIMA / MIRA : EXECUTION TRACE

This appendix presents two screenshots showing a refusal cascade between PRIMA v1 and NOEMA within the MIRA memory validation core. These traces offer forensic insight into how KoR enforces ethical refusal before accepting any memory state.

Context

The module PRIMA v1 attempts to validate and reactivate a memory trace labeled

prima_awake_001 within mira.v1.memory.core. However, NOEMA blocks the attempt due to

failed codex matching and invalidated introspection thresholds.

Screenshot A — MIRA Trace Log (Blocked by NOEMA) PRIMA emits multiple validation attempts, all blocked by NOEMA. Each refusal is cryptographically signed (prima.v1), timestamped, and stored.

Screenshot B — Trace Confirmation Output

System confirms anchoring of the refusal trace in MIRA’s memory core. The event is marked as unvalidated and rejected.

-

SHA-256 (hex)

32db30190171df5b51437e367392aebcead08d6f64eb6c8609efc920d20e5250 -

Timestamp (UTC, ISO-8601)

2025-08-08T17:53:40.639509+00:00

Trace it .

Legal & Ethical Scope KoR is protected by:

Swiss Copyright Law (LDA)

KoR License v1.0 (non-commercial, codex bound)

Proof-of-Existence (blockchain, Arweave, IPFS)