We explore the need for decentralized storage and analyze how unique challenges in this domain are overcome – by @0xPhillan and @FundamentalLabs

DISCLOSURE: FUNDAMENTAL LABS AND MEMBERS OF OUR TEAM HAVE INVESTED AND MAY HOLD POSITIONS IN TOKENS MENTIONED IN THIS REPORT. THESE STATEMENTS ARE INTENDED TO DISCLOSE ANY CONFLICT OF INTEREST AND SHOULD NOT BE MISCONSTRUED AS A RECOMMENDATION TO PURCHASE ANY TOKEN. THIS CONTENT IS FOR INFORMATIONAL PURPOSES ONLY AND YOU SHOULD NOT MAKE DECISIONS BASED SOLELY ON IT. THIS IS NOT INVESTMENT ADVICE.

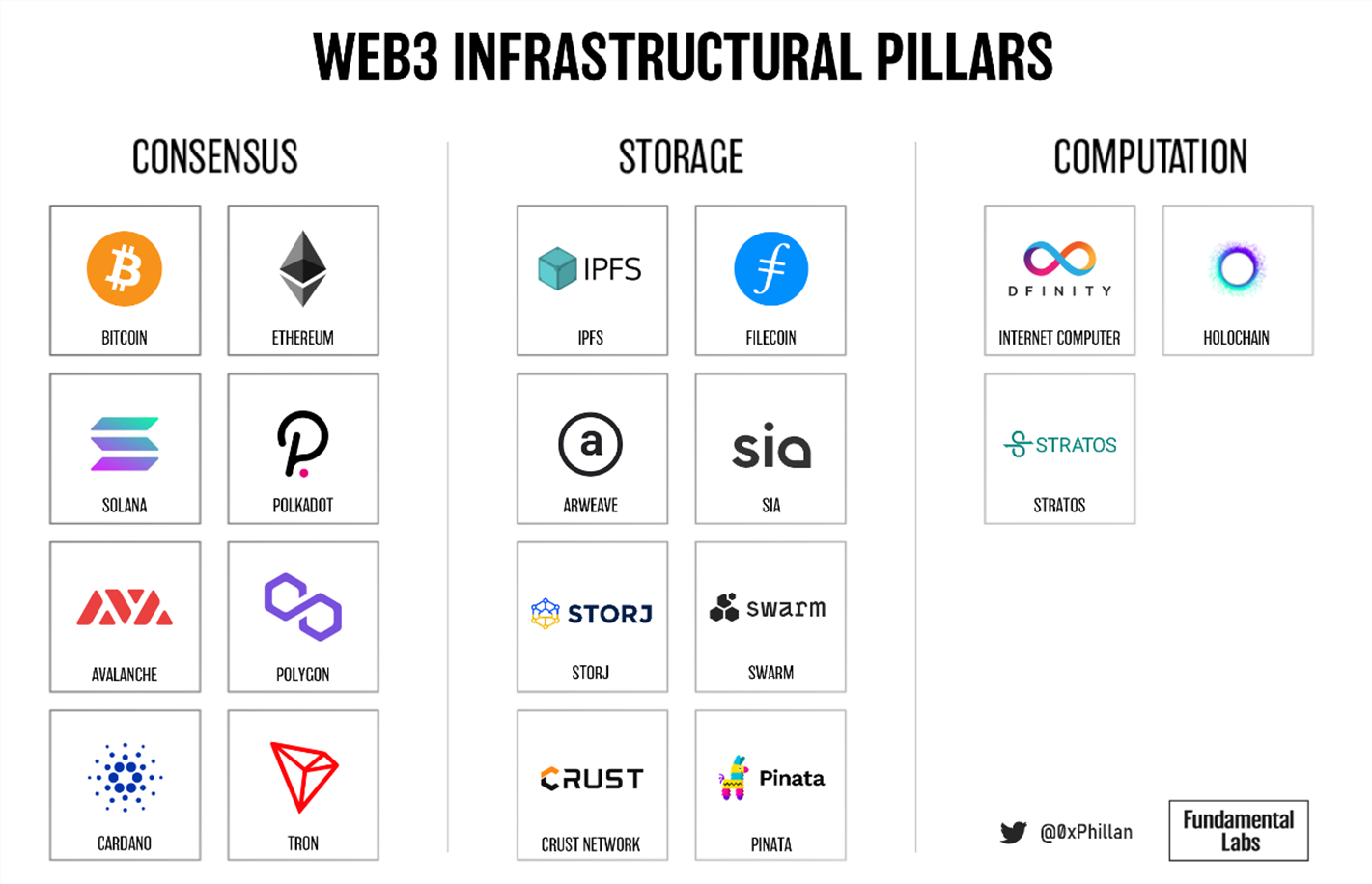

If we were to pave a way forward for the decentralization of the internet, we would ultimately converge on three pillars: consensus, storage, and computation. If humanity succeeds at decentralizing all three, we will have fully achieved the progression to the next iteration of the internet: Web3.

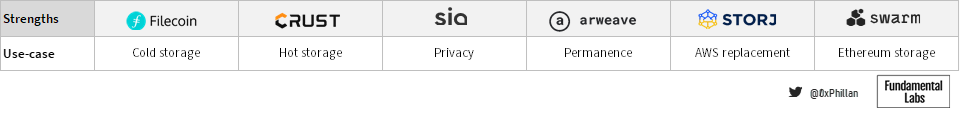

Storage, the second pillar, is rapidly maturing with various storage solutions having emerged that suite specific use-cases. In this piece, we will take a closer look at the decentralized storage pillar.

This publication is a summary of the full piece, which can be downloaded directly from decentralized storage on Arweave and Crust Network.

The need for decentralized storage

Blockchain perspective

From a blockchain perspective, we need decentralized storage because blockchains themselves were not designed to store large amounts of data. The mechanisms used to achieve blockchain consensus rely on small amounts of data (transactions) being arranged in blocks (a collection of transactions), and these being quickly shared across the network to be validated by nodes.

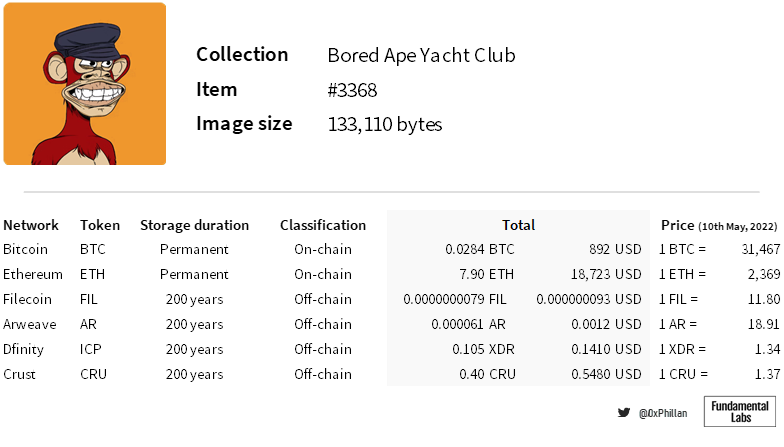

First off, storing data in these blocks is very expensive. Storing the entire image data of BAYC #3368 directly on layer 1 networks can cost over $18,000 at the time of writing.

And secondly, if we were to store large amounts of arbitrary data in these blocks, the network congestion would increase heavily thus leading to an increase in prices for using the network through gas bidding wars by users. This is the result of the implicit time-value of blocks – if users need to submit transactions to the network at a specific time, they will pay more in gas fees to have their transactions prioritized.

Therefore, it is recommended that the underlying metadata and image data of NFTs as well as the dApp frontends be stored off-chain.

Centralized network perspective

If storing data on a blockchain is so expensive, why not just store the off-chain data on a centralized network?

Centralized networks are prone to censorship and are mutable. They require the user to trust the storage provider to keep the data safe. There is no guarantee that the operator of a centralized network can live up to the trust placed in them: data could be removed on purpose or accidentally, for example due to changes in policy by the storage provider, hardware failures or by being attacked by third parties.

NFTs

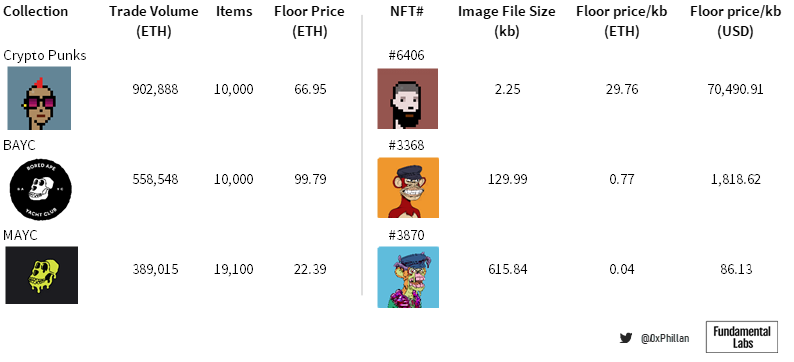

With the floor prices for some NFT collections exceeding US$100k and some having a value of up to US$70k per kb of image data, a promise is not enough to assure that data will be available at all times. Greater assurances are needed to ensure immutability and permanence of the underlying NFT data.

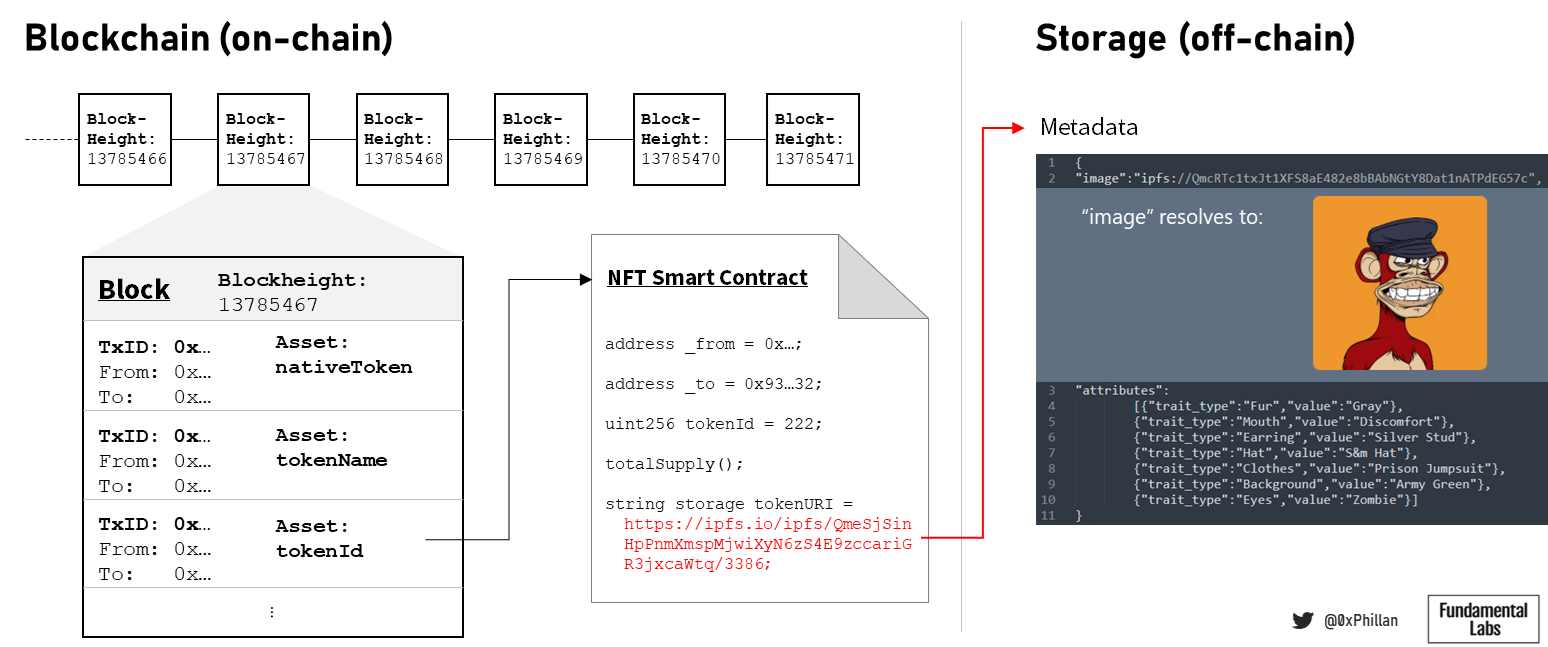

NFTs themselves do not actually contain any image data, instead they contain a pointer to metadata and image data that is stored off-chain. It is this metadata and image data that needs to be secured, as if it disappears, an NFT would merely be an empty container.

It can be argued that the value of NFTs is not primarily driven by the metadata and image data they refer to, but instead that it’s driven by communities that build a movement and an ecosystem around their collections. While that may hold true, without the underlying data the NFTs would have no meaning and without meaning communities could not form.

Moving beyond profile pictures and art collectibles, NFTs can also represent ownership of real-world assets, such as real estate or financial instruments. Such data holds an extrinsic real-world value, and the preservation of every byte of data that underlies the NFT is at least as valuable as the on-chain NFT, due to what it represents.

dApps

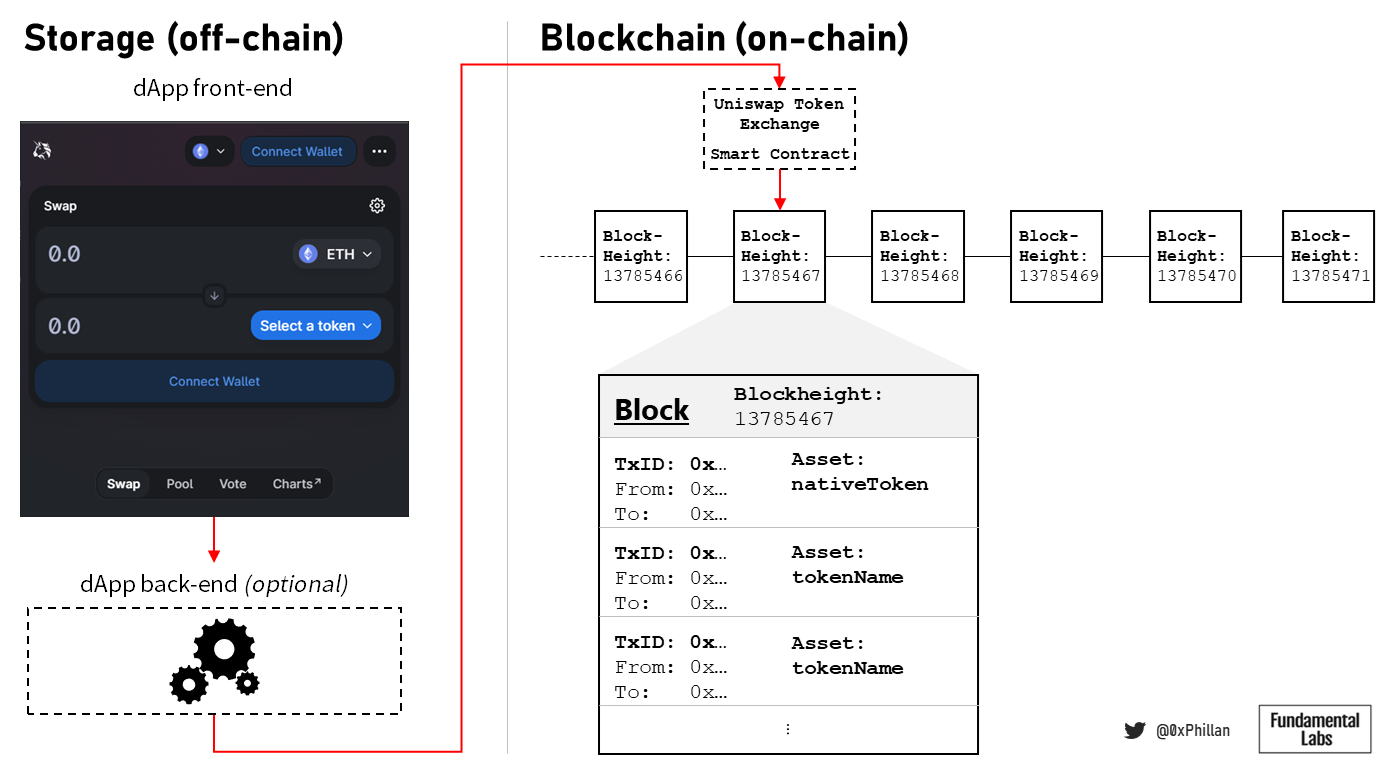

If NFTs are goods that live on blockchains, then dApps can be thought of as services that live on, and facilitate interaction with, blockchains. dApps are a combination of a front-end user interface that exists off-chain and a smart contract that exists on the network and interacts with a blockchain. Sometimes these also have a simple backend that move certain calculations off-chain to reduce the gas required, and thus costs incurred by end-users, for certain transactions

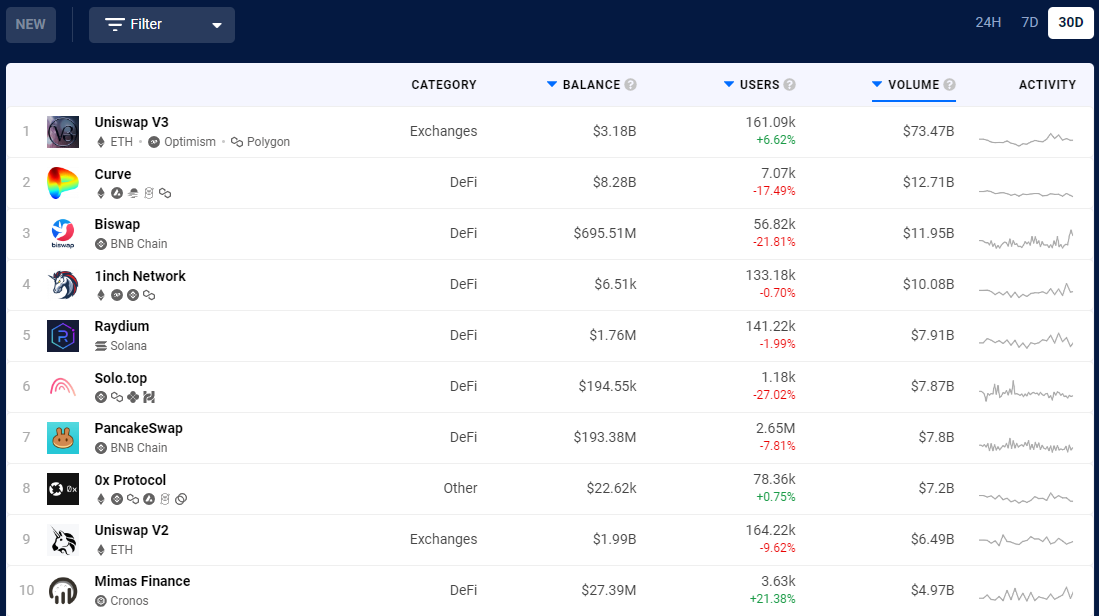

While the value of a dApp should be considered in the context of the purpose of the dApp (e.g., DeFi, GameFi, social, metaverses, name services etc.), the amount of value that dApps facilitate is staggering. At time of writing, the top 10 dApps by volume listed on DappRadar collectively facilitated transfers north of US$150bn within the last 30 days.

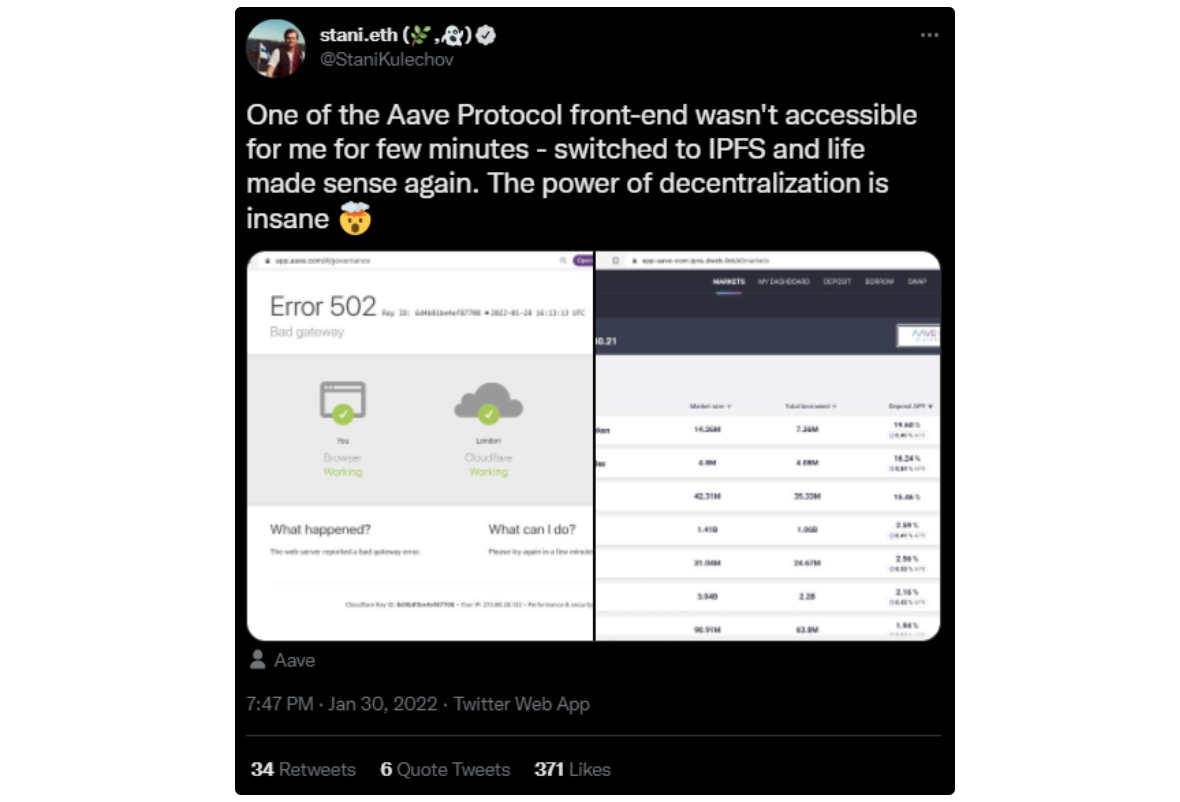

Although the core mechanics of dApps are executed through smart contracts, their accessibility by end-users is ensured through their frontends. Assuring dApp frontend availability is thus, in a sense, assuring the availability of the underlying service.

Decentralized storage reduces the likelihood of server malfunctions, DNS hacks or a centralized entity remove access to the dApp front-end. Even if development of a dApp were to cease, the frontend and access to the smart contracts through that frontend can persist.

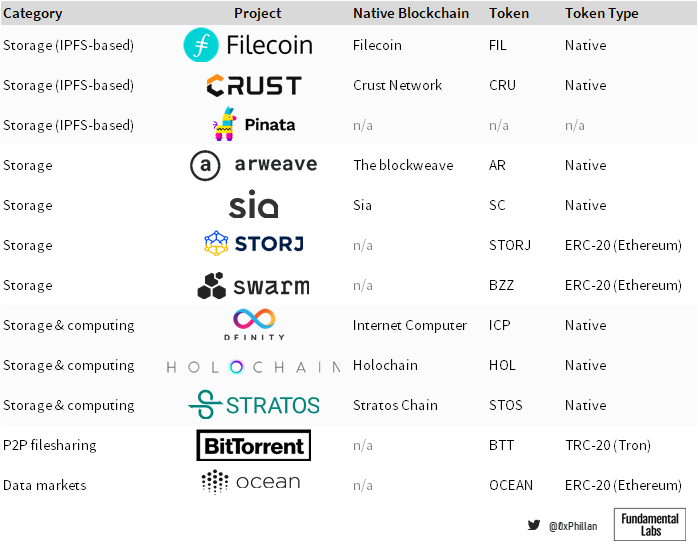

The decentralized storage landscape

Blockchains such as Bitcoin and Ethereum primarily exist to facilitate value transfers. When it comes to decentralized storage networks, some networks employ this approach as well: they use a native blockchain to record and track storage orders, which represent a value transfer in exchange for storage services. However, this is only one of many potential approaches – the storage landscape is vast, and varying solutions with varying trade-offs and use-cases have emerged over the years.

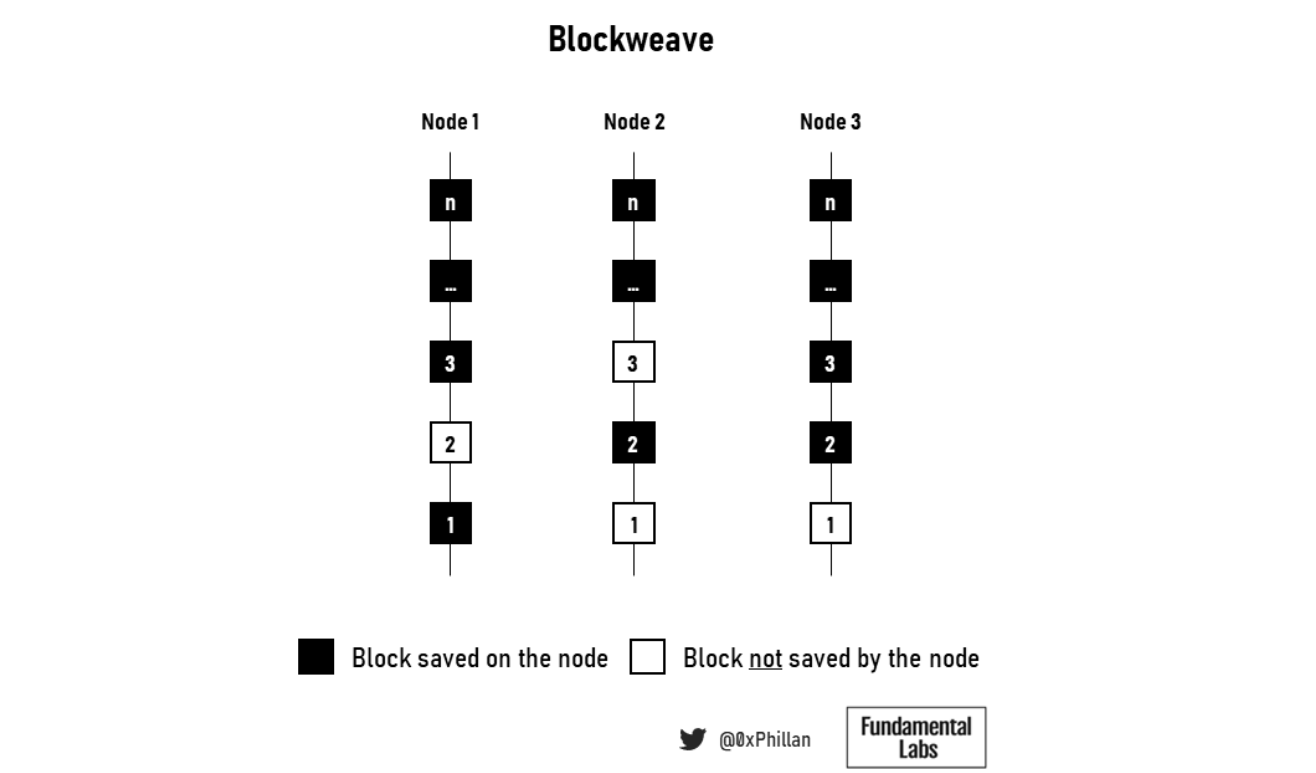

Despite many differences, all the above projects have one thing in common: none of these networks replicate all data across all nodes, as is the case with the Bitcoin and Ethereum blockchains. In decentralized storage networks, immutability and availability of stored data is not achieved through a majority of the network storing all data and validating data that is chained in succession, as is the case in Bitcoin and Ethereum. Although, as mentioned earlier, many networks opt to use a blockchain to track storage orders.

It is unsustainable to have all nodes on a decentralized storage network store all data, because the overhead cost of running the network would quickly raise storage costs for users and would ultimately drive the network to greater centralization of a few node operators that can afford the hardware costs thereof.

As a result, decentralized storage networks have very different challenges to overcome.

Challenges surrounding decentralization of data

Recalling the previously mentioned limitations regarding the storage of data on-chain, it becomes evident that decentralized storage networks must store data in a way that does not impact the value transfer mechanisms of the network, while ensuring that data remains persistent, immutable and accessible. In essence, a decentralized storage network must be capable of storing data, retrieving data, and maintaining data while ensuring that all actors within the network are incentivized for the storage and retrieval work that they do, while also upholding the trustless nature of decentralized systems.

These challenges can be summarized in below questions:

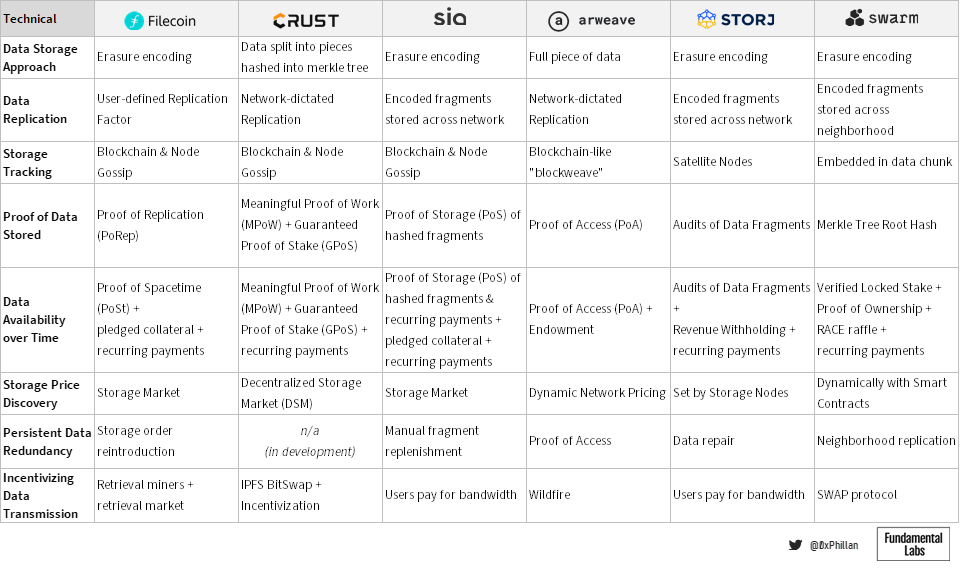

- Data storage format: store full files or file fragments?

- Replication of data: across how many nodes to store the data (full files or fragments)?

- Storage tracking: how does the network know where to retrieve files from?

- Proof of data stored: have the nodes stored the data they were asked to store?

- Data availability over time: is the data still stored over time?

- Storage price discovery: how is the storage cost determined?

- Persistent data redundancy: if nodes leave the network, how does the network ensure data is still available?

- Data transmission: network bandwidth comes at a cost – how to ensure nodes retrieve data when asked?

- Network tokenomics: apart from ensuring that data is available on the network, how does the network ensure the network will be around in the long-term?

The various networks that have been explored as part of this research employ a wide range of mechanisms with certain trade-offs to achieve decentralization.

For a deeper comparison of the above networks on each of the challenges, as well as detailed profiles of each of these networks, please refer to the full research piece that can be found on Arweave or Crust Network.

Data Storage Format

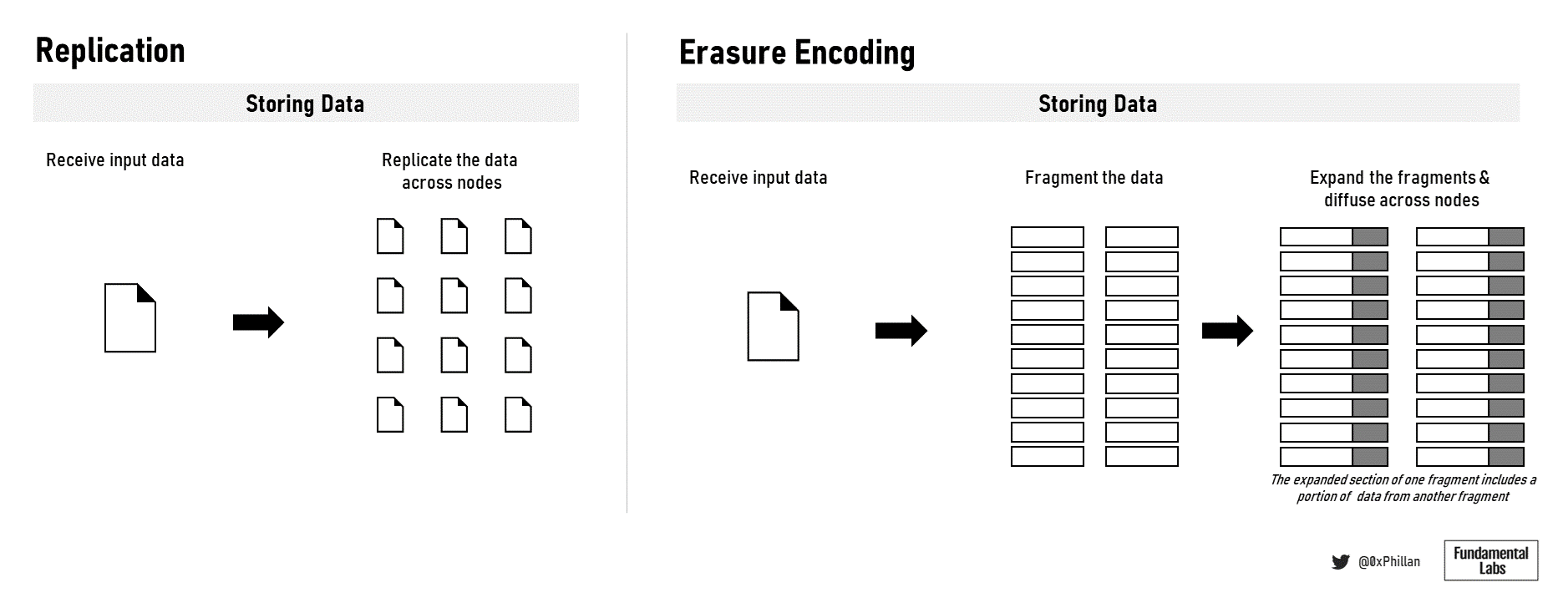

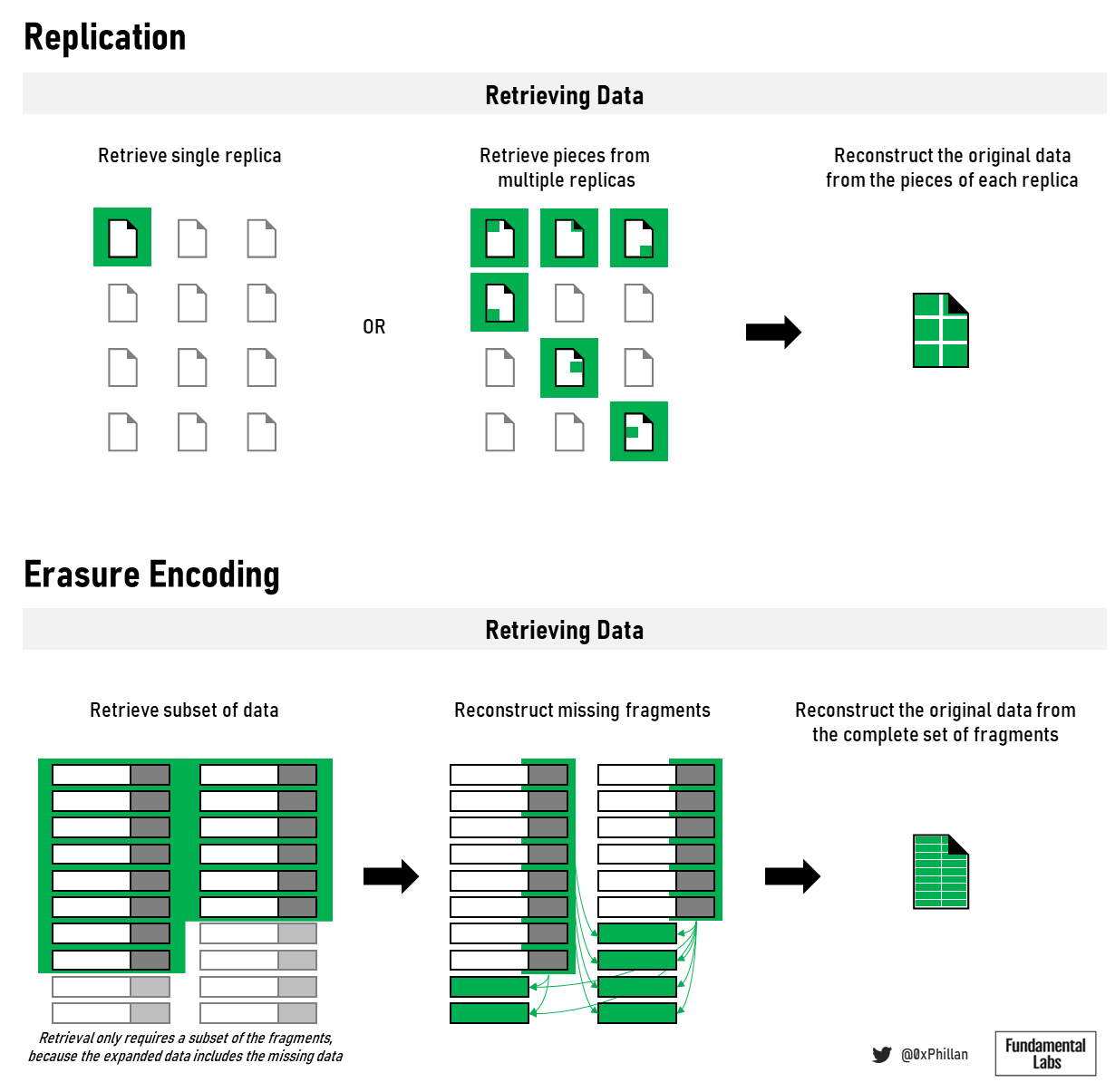

Amongst these networks there are two primary approaches that are used to store data on network: storing full files and using erasure encoding: Arweave and Crust Network store full files, while Filecoin, Sia, Storj and Swarm all use erasure encoding. In erasure encoding data is broken down into constant-size fragments, which are each expanded and encoded with redundant data. The redundant data saved into each fragment makes it so that only a subset of the fragments is required to reconstruct the original file.

Data Replication

In Filecoin, Sia, Storj and Swarm, the networks determine the number of erasure-encoded fragments and the extent of redundant data to be stored in each fragment. However, Filecoin also allows for the user to determine a replication factor, which determines how many separate physical devices the erasure encoded fragments should be replicated on as part of a storage deal with a single storage miner. If users want to store files with different storage miners, then users must engage in a separate storage deal. Crust and Arweave let the network dictate replication, while on Crust manually setting a replication factor is possible. On Arweave, the proof of storage mechanism incentivizes nodes to store as much data as possible. As a result, the upper replication limit for Arweave is the total number of storage nodes on the network.

The approach used for storing and replicating data, will impact how data can be retrieved by the network.

Storage Tracking

After the data has been distributed across nodes in the network in whichever form the network stores it, the network needs to be able to track the stored data. Filecoin, Crust and Sia all use a native blockchain that tracks storage orders, while storage nodes also maintain a local list of network locations. Arweave uses a blockchain-like structure. Unlike blockchains such as Bitcoin and Ethereum, on Arweave, a node can decide for itself whether to store data from a block. Hence, if one compares the chains of multiple nodes on Arweave, they will not be identical – instead, certain blocks will be missing on some nodes that can be found on others.

Finally, Storj and Swarm use two entirely different approaches. In Storj, a second node type called satellite nodes act as a coordinator for a set of storage nodes that manage and track where data is stored. In Swarm, the address of data is embedded directly within the data chunk. When data is retrieved, the network knows where to look based on the data itself.

Proof of data stored

When it comes to proving how data is stored, each network employs their own unique approach. Filecoin uses proof-of-replication – a proprietary storage proof mechanism which first stores the data on a storage node and then seals the data in a sector. The sealing process makes so that two replicated pieces of the same data can be provably unique from one another, thus ensuring that the right number of replicas are stored on the network (thus, “Proof of Replication”).

Crust breaks a piece of data down into many smaller pieces which are hashed into a Merkle tree. By comparing the hashing results of individual pieces of data stored on the physical storage device against the expected Merkle tree hashes, Crust can verify whether files have been stored correctly. This is similar to Sia’s approach, with the difference that Crust stores the entire file on each node, while Sia stores erasure-encoded fragments. Crust can store the entire file on a single node and still achieve privacy, by using the nodes Trusted Execution Environment (TEE), which is a sealed off hardware component that even the hardware owner cannot access. Crust calls this proof of storage algorithm “Meaningful Proof of Work”, whereas the meaningful indicates that new hashes are only calculated when changes are made to the stored data, thus reducing unmeaningful operations. Both Crust and Sia both store the Merkle tree root hash on a blockchain, that acts as a source of truth for the verification of data integrity.

Storj checks whether data has been stored properly through data audits. Data audits are similar to how Crust and Sia use Merkle trees to validate pieces of data. On Storj, once sufficient nodes have returned their audit results the network can determine which of the nodes are faulty based on the majority response, instead of comparing against a blockchain source of truth. This mechanism in Storj is intentional, as the developers believe that reducing network wide coordination through a blockchain can lead to performance improvements both in terms of speed (no need to wait for consensus) and bandwidth usage (no need for entire network to regularly communicate with the blockchain).

Arweave uses a cryptographic proof-of-work puzzle to determine whether files have been stored. In this mechanism, in order for a node to be able to mine the next block, they need to prove they have access to the previous block and another random block from the network’s block history. Because in Arweave the uploaded data is stored directly in the block, proving access to the previous block proves the storage provider did indeed properly save the files.

Finally, on Swarm also uses Merkle trees, with the difference that Merkle trees are not used to determine file locations, but instead data chunks are directly stored within Merkle trees. When storing data on swarm, the root hash of the tree, which is also the address of the data stored, is proof that the file was properly chunked and stored.

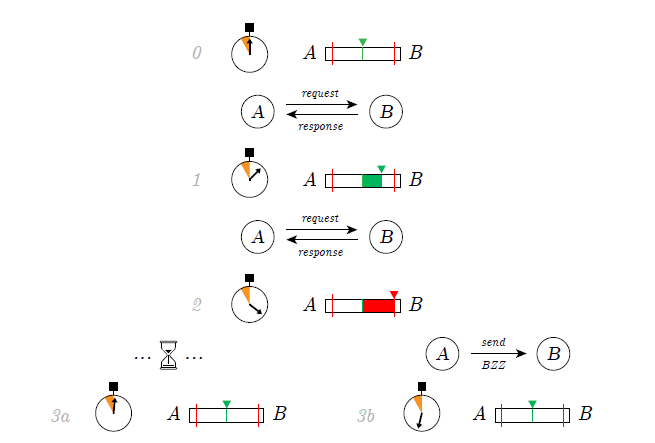

Data availability over time

When it comes to determining that data is stored over a certain period of time, again, each network uses a unique approach. In Filecoin, to reduce network bandwidth, the storage miner is required to run the proof of replication algorithm consecutively over the period of time over which the data is meant to be stored for. The resulting hashes of each time period prove that that the storage space has been occupied with the right data over a specific period of time, thus “Proof of Spacetime”.

Crust, Sia and Storj run their verification of random data fragments in regular intervals and report the results back to their coordination mechanism – blockchains for Crust and Sia, and satellite nodes for Storj. Arweave ensures consistent availability of the data through it’s Proof-of-Access mechanism, that requires miners to not only prove they have access to the last block, but also to a random historical block. Storing older and rarer blocks is incentivized, as that increases the likelihood of the miner to be able to win the proof-of-work puzzle to which access to a specific block is a pre-requisite.

Swarm on the other hand runs raffles at regular intervals that reward nodes for holding not-so-popular data over time, while also running a proof of ownership algorithm for data that has been promised by a node to be stored over a longer period.

Filecoin, Sia and Crust require nodes to deposit collateral to become a storage node, while Swarm only requires it for long-term storage requests. Storj does not require upfront collateral, but Storj will withhold some of the storage revenue from the miner instead. Finally, all networks regularly pay out to nodes in certain intervals for the time periods that they have provably stored data.

Storage price discovery

To determine the price of storage, Filecoin and Sia use storage markets, where storage providers set their asking prices and storage users set what they are willing to pay, along with some other settings. The storage market then connects users with storage providers that meet their requirements. Storj applies a similar approach, with the key difference that there is no single network wide marketplace that connects with all nodes on the network. Instead, each satellite has its own set of storage nodes that it interacts with.

Finally, Crust, Arweave and Swarm all let the protocol dictate the price for storage. Crust and Swarm allow for certain settings based on the users’ file storage requirements, while on Arweave files are always stored permanently.

Persistent Data Redundancy

Over time, nodes will leave these open public networks and when nodes disappear, so does the data they store. Networks must thus actively maintain a certain level of redundancy in the system. Sia and Storj achieve this through replenishing missing erasure encoded fragments by collecting a subset of fragments, rebuilding the underlying data and then re-encoding the file to re-create the missing fragments. In Sia, users must regularly log into the Sia client for the fragments to be replenished, because only the client is able to distinguish which data fragments belong to which piece of data and user. On Storj, Satellites take over the responsibility of always being online and regularly running data audits to replenish data fragments.

Arweave’s proof of access algorithm ensures that data is always regularly replicated across the entire network, while on Swarm data is replicated to nodes that are in proximity to one another. On Filecoin, if data disappears over time and the remaining file fragments fall below a certain threshold, the storage order is reintroduced to the storage market, allowing another storage miner to take on that storage order. Finally, Crust’s replenishment mechanism is currently in development.

Incentivizing Data Transmission

After data is stored securely over time, users will want to retrieve the data. Since bandwidth comes at a cost, storage nodes must be incentivized to deliver data when asked for it. Crust and Swarm use a debt and credit mechanism, where every node tracks how their inbound and outbound traffic with each other node they interact with. If one node only takes inbound traffic, but does not take outbound traffic, it is deprioritized for future communication, which can affect its ability to accept new storage orders. Crust uses the IFPS Bitswap mechanism, while Swarm uses a proprietary protocol named SWAP. On Swarm’s SWAP protocol, the network allows nodes to pay off their debt (only accepting inbound traffic with insufficient outbound traffic) with postage stamps, that can be redeemed for their utility token.

This tracking of nodes’ generosity is also how Arweave ensures that data is transmitted when requested. In Arweave this mechanic is called wildfire, and nodes will prioritize peers that rank better and rationalize bandwidth usage accordingly. Finally, on Filecoin, Storj and Sia, users ultimately pay for bandwidth thus incentivizing nodes to deliver data when requested.

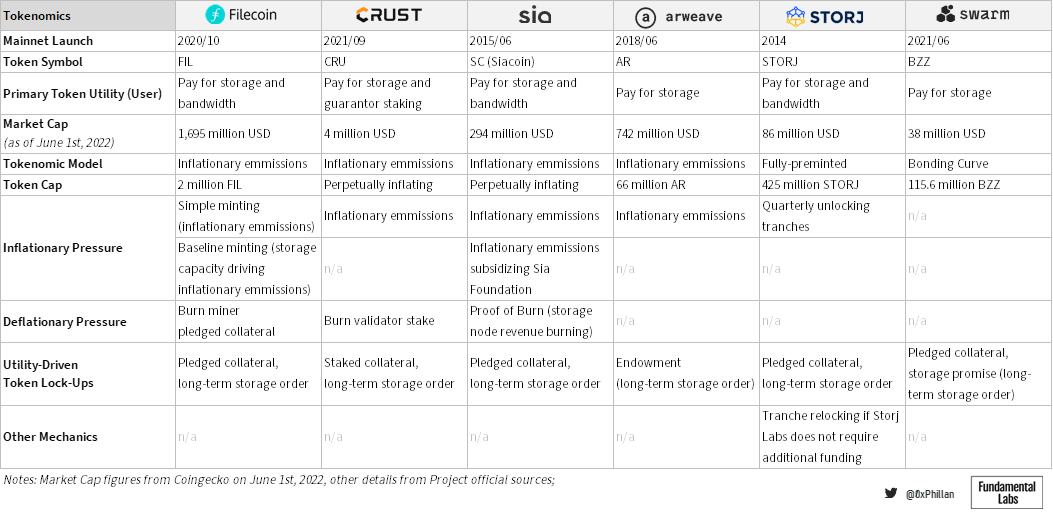

Tokenomics

The tokenomics design ensures network stability and also ensures that the network will be around for a long time, as ultimately data is only as permanent as the network. In the below table a brief summary of the tokenomics design decisions can we found, as well as inflationary and deflationary mechanisms embedded in the respective designs.

For a detailed introduction of each project’s tokenomics, view the full paper on Arweave or Crust Network.

Which network is the best?

It is impossible to say that one network is objectively better than another. When designing a decentralized storage network, there are countless trade-offs that must be considered. While Arweave is great for permanent storage of data, Arweave is not necessarily suitable to move Web2.0 industry players to Web3.0 – not all data needs to be permanent. However, there is a strong sub-sector of data that does require permanence: NFTs and dApps.

Ultimately, design decisions are made based on the purpose of the network.

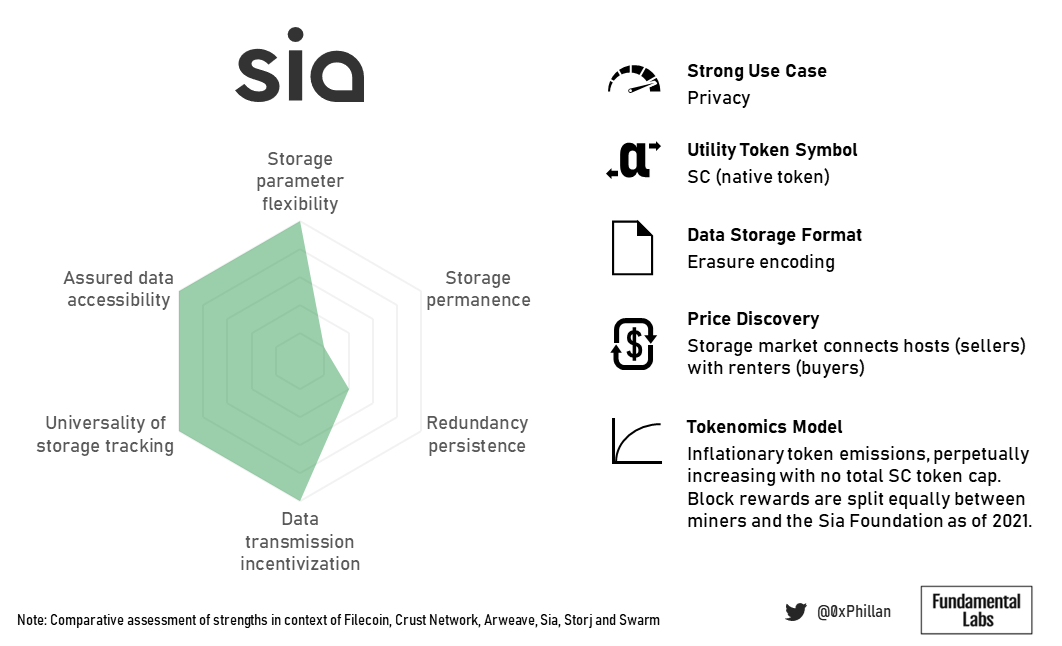

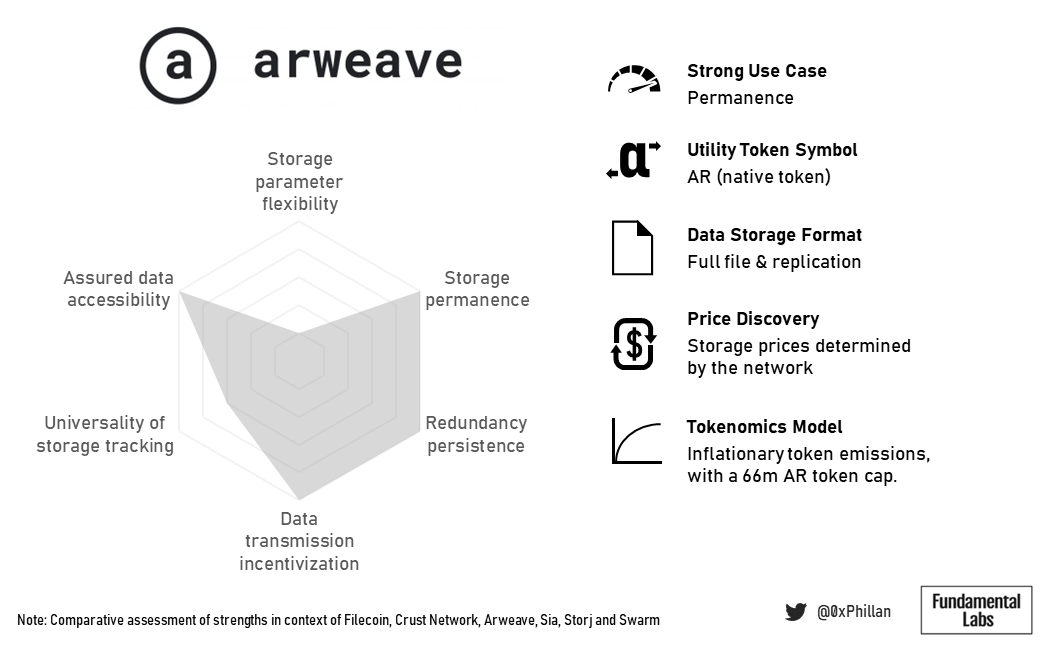

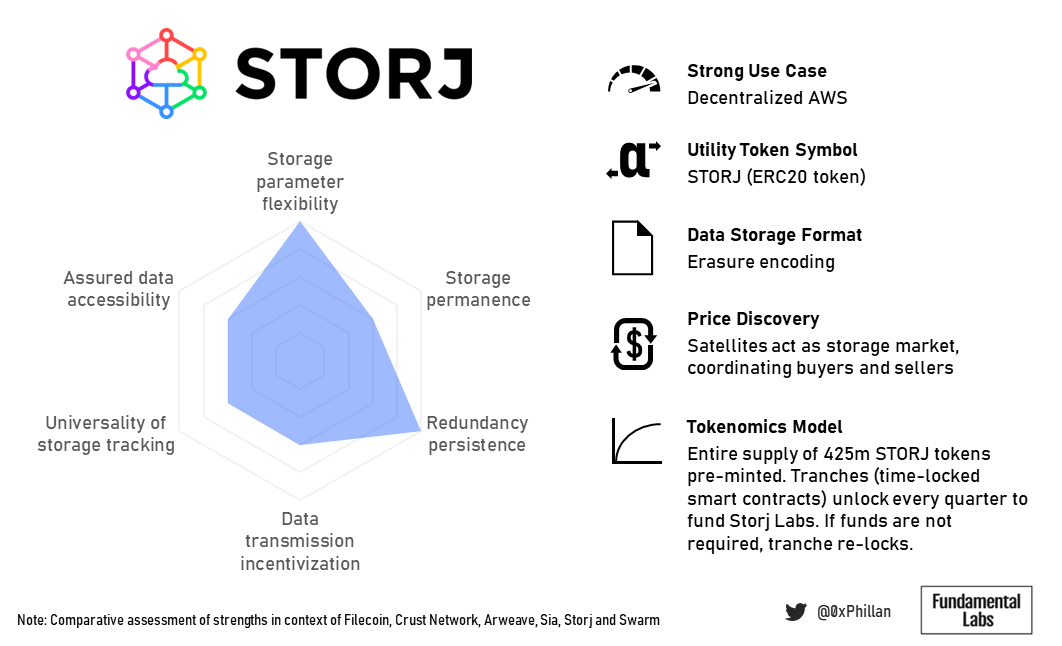

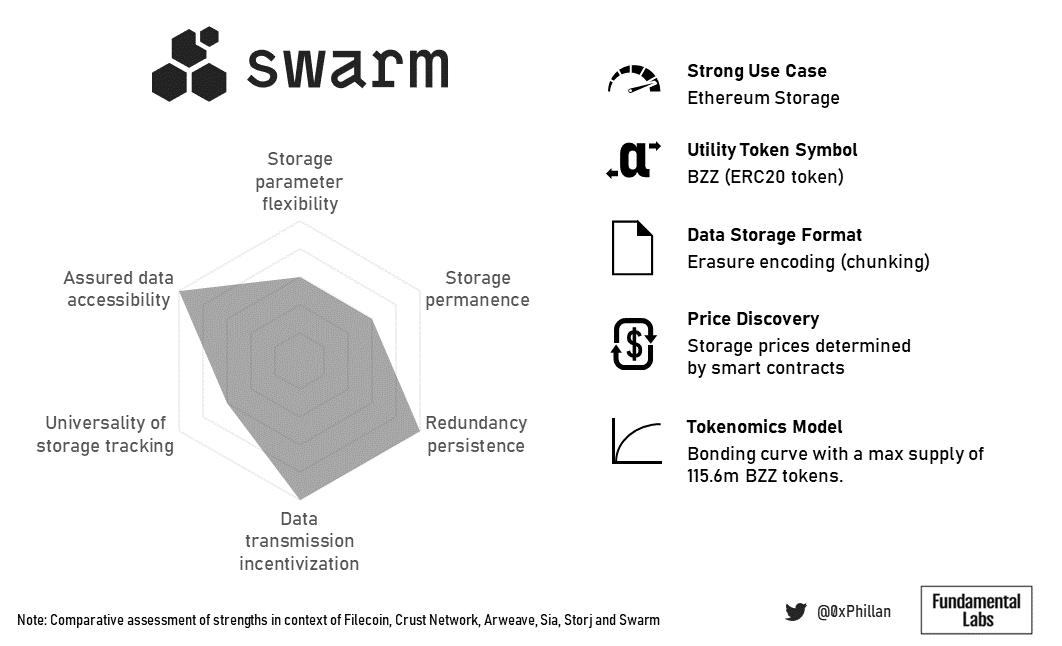

What follows are summative profiles of the various storage networks comparing them amongst each other on a set of scales defined below. The scales used reflect comparative dimensions of these networks, however it should be noted that the approaches to overcome challenges of decentralized storage are in many cases not better or worse, but instead merely reflect design decisions.

- Storage parameter flexibility: the extent to which users have control over file storage parameters

- Storage permanence: the extent to which file storage can achieve theoretical permanence by the network (i.e., without intervention)

- Redundancy persistence: the networks ability to maintain data redundancy through replenishment or repair

- Data transmission incentivization: the extent to which the network ensures nodes generously transmit data

- Universality of storage tracking: the extent to which there is a consensus among nodes regarding the storage location of data

- Assured data accessibility: the ability of the network to ensure that a single actor in the storage process cannot remove access to files on the network

Higher scores indicate greater ability for each of the above.

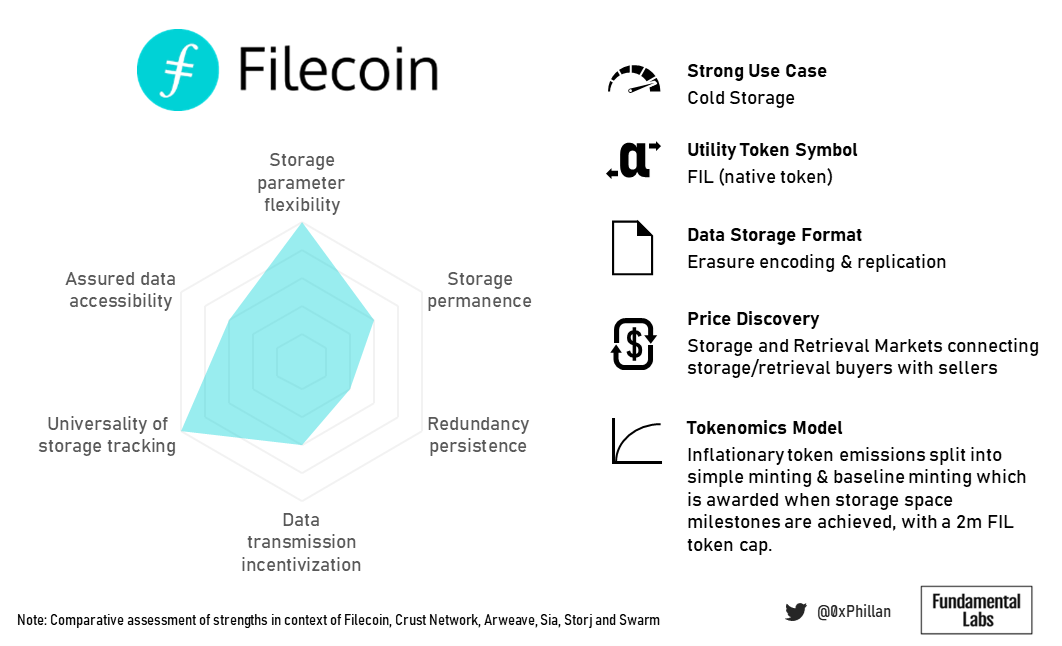

Filecoin’s tokenomics support growing the total network’s storage space, which serves to store large amounts of data in an immutable fashion. Furthermore, their storage algorithm lends itself more to data that is unlikely to change much over time (cold storage).

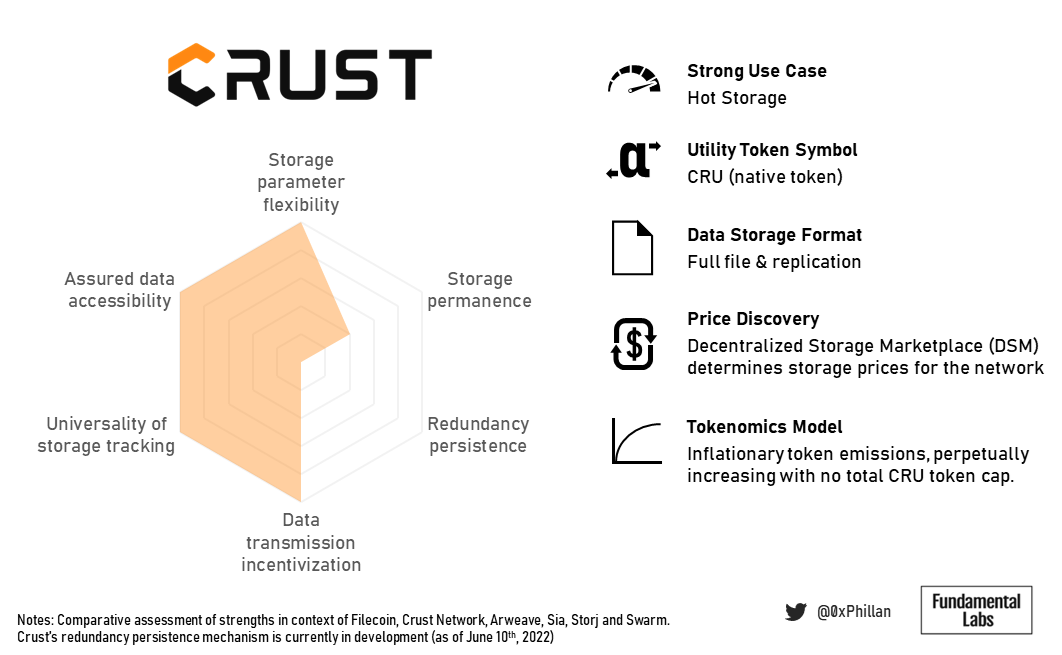

Crust’s tokenomics ensure hyper-redundancy with fast retrieval speeds which make it suitable for high traffic dApps and make it suitable for fast retrieval of data of popular NFTs.

Crust scores lower on storage permanence, as without persistent redundancy its ability to deliver permanent storage is heavily impacted. Nonetheless, permanence can still be achieved through manually setting an extremely high replication factor.

Sia is all about privacy. The reason manual health restoration by the user is required, is because nodes do not know what data fragments they are storing, and which data these fragments belong to. Only the data owner can reconstruct the original data from the fragments in the network.

In contrast, Arweave is all about permanence. That is also reflected in their endowment design, which makes storage more costly but also makes them a highly attractive choice for NFT storage.

Storj’s business model seems to heavily factor in their billing and payment approach: Amazon AWS S3 users are more familiar with monthly billing. By removing complex payment and incentive systems often found in blockchain-based systems, Storj Labs sacrifices some decentralization but significantly reduces the barrier to entry for their key target group of AWS users.

Swarm’s bonding curve model ensures storage costs remain relatively low overtime as more data is stored on the network, and its proximity to the Ethereum blockchain make it a strong contender to become the primary storage for more complex Ethereum-based dApps.

There is no single best approach for the various challenges decentralized storage networks face. Depending on the purpose of the network and the problems it is attempting to solve, it must make trade-offs on both technical and tokenomics aspects of network design.

In the end, the purpose of the network and the specific use-cases it attempts to optimize will determine the various design decisions.

The next frontier

Returning to the Web3 infrastructural pillars (consensus, storage, computation), we see that the decentralized storage space has a handful of strong players that have positioned themselves within the market for their specific use cases. This does not exclude new networks from optimizing existing solutions or occupying new niches, but this does raise the question: what’s next?

The answer is: computation. The next frontier in achieving a truly decentralized internet is decentralized computation. Currently only few solutions exist that bring to market solutions for trustless, decentralized computation that can power complex dApps, which are capable of more complex computation at far lower cost than executing smart contracts on a blockchain.

Internet computer (ICP) and Holochain (HOLO) are networks that occupy a strong position in the decentralized computation market at time of writing. Nonetheless, the computational space is not nearly as crowded as the consensus and storage spaces. Hence, strong competitors are bound to enter the market sooner or later and position themselves accordingly. One such competitor is Stratos (STOS). Stratos offers a unique network design through its decentralized data mesh technology.

We see decentralized computation, and specifically the network design of the Stratos network, as areas for future research.

Closing

Thank you for reading this research piece on decentralized storage. If you enjoy research that seeks to uncover the fundamental building blocks of our shared Web3 future, consider following @FundamentalLabs on Twitter.

Did I miss any good concepts, or other valuable information? Please reach out to me on Twitter @0xPhillan so we can enhance this research together.

Full piece available on Arweave and on Crust Network.