Abstract: Lumoz Decentralized AI enables developers worldwide to access top AI models and computing resources fairly while ensuring data privacy, bringing a paradigm shift to the AI industry.

Lumoz Decentralized AI:https://chat.lumoz.org

Introduction

As AI technology rapidly advances, the high cost of computing resources, concerns over data privacy, and the limitations of centralized architectures have become major barriers to AI adoption and innovation. Traditional AI computing relies on centralized servers controlled by large technology companies, leading to a monopoly on computing power, high costs for developers, and insufficient guarantees for user data security.

Lumoz Decentralized AI (LDAI) is leading a revolution in decentralized AI computing. By integrating blockchain technology, zero-knowledge proof (ZK) algorithms, and distributed computing architecture, LDAI has built a secure, cost-effective, and high-performance AI computing platform that fundamentally reshapes the traditional AI computing landscape. It enables developers worldwide to access top AI models and computing resources fairly while ensuring data privacy, bringing a paradigm shift to the AI industry.

In this article, we will explore LDAI's core technologies, architectural design, and diverse applications, analyzing how it is driving the AI industry toward a more open, fair, and trustworthy future.

1. What is Lumoz Decentralized AI (LDAI)?

LDAI is a decentralized AI platform designed to address three critical issues in traditional centralized AI ecosystems: single points of failure, high computing costs, and data privacy concerns. By integrating blockchain technology and zero-knowledge proof (ZK) algorithms, LDAI creates a new, trustworthy AI infrastructure.

LDAI provides a flexible computing architecture through a decentralized node network. Traditional AI systems typically rely on centralized server clusters, which are vulnerable to single points of failure that can cause service disruptions. In contrast, LDAI leverages a distributed node network to ensure high availability and reliability, guaranteeing 99.99% continuous AI service uptime.

LDAI disrupts the monopoly on computing resources by offering global, distributed computing power. Through the Lumoz Chain, LDAI integrates computing resources from multiple countries, allowing developers to access top AI models such as Deepseek and LLaMA at low or even zero cost. This democratization of computing resources removes the financial barriers associated with expensive hardware, accelerating AI innovation and adoption.

LDAI also addresses data privacy concerns. By utilizing zero-knowledge proof encryption algorithms and decentralized storage protocols, LDAI ensures that user data assets are securely encrypted while maintaining full data sovereignty. This three-layer protection mechanism not only enhances security but also safeguards user privacy, putting an end to the era of data colonization.

2. Lumoz Decentralized AI Architecture

The architecture of LDAI is designed with decentralization, modularity, and flexibility in mind, ensuring efficient operation in high-concurrency and large-scale computing scenarios. The key components of the LDAI architecture are as follows:

2.1 Architectural Hierarchy

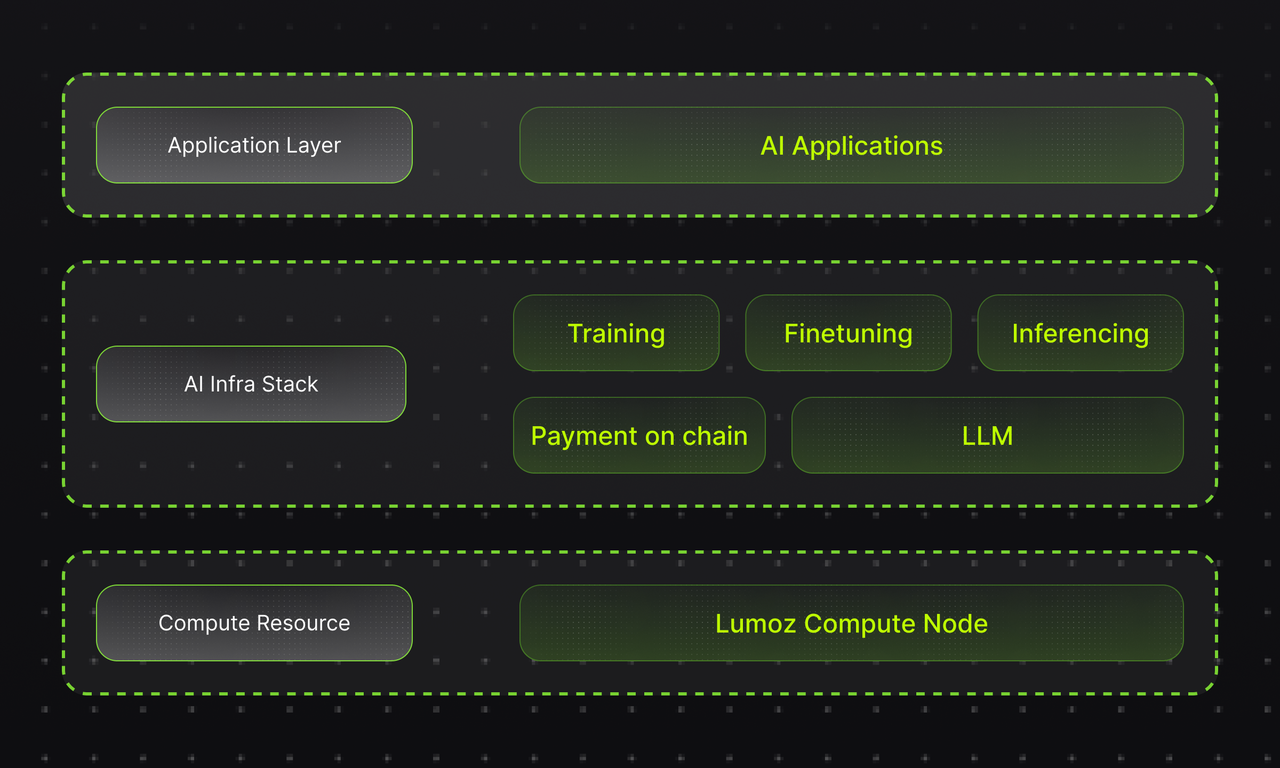

LDAI's architecture is divided into three main layers: the Application Layer, the AI Infrastructure Layer, and the Computing Resource Layer.

-

Application Layer: This layer handles interactions with AI applications, including model training, fine-tuning, inference, and on-chain payments. It provides standardized API interfaces, allowing developers to easily integrate LDAI’s computing resources and build various AI applications.

-

AI Infrastructure Layer: This layer includes essential functions such as training, fine-tuning, and inference while supporting efficient AI task scheduling. Blockchain technology plays a crucial role in ensuring the stability and transparency of AI execution in a decentralized environment.

-

Computing Resource Layer: LDAI delivers decentralized computing power through a combination of Lumoz Compute Nodes and computing clusters. Each compute node not only provides computing services but also participates in resource scheduling and task allocation. This layer is designed to ensure the elasticity and scalability of AI computing.

2.2 Architectural Design

LDAI's computing resources are scheduled through a decentralized cluster management mechanism. Each compute node collaborates via the Lumoz Chain, maintaining efficient communication and resource sharing through decentralized protocols. This architecture enables the following key functionalities:

-

Node Management: Oversees node participation in the network, including node registration, exit management, and the administration of user rewards and penalties.

-

Task Scheduling: Dynamically allocates AI tasks to different compute nodes based on their load, optimizing resource utilization.

-

Model Management: Stores popular models as image snapshots to accelerate user onboarding and model computation.

-

Node Monitoring: Continuously tracks the health status and workload of each node in the cluster, ensuring high system availability and stability.

Core Architecture and Resource Scheduling

LDAI’s core architecture is based on multiple computing clusters, each consisting of multiple nodes. These nodes can include GPU computing devices or a combination of compute and storage nodes. Each node operates independently but collaborates through LDAI’s decentralized scheduling mechanism to collectively complete task computations. The cluster employs adaptive algorithms that dynamically adjust computing resources based on load conditions, ensuring that each node’s workload remains optimal and enhancing overall computing efficiency.

LDAI uses an intelligent scheduling system that automatically selects the best node for computation based on task requirements, real-time resource availability, and network bandwidth. This dynamic scheduling capability ensures that the system can flexibly handle complex computational tasks without manual intervention.

Efficient Containerized Deployment and Dynamic Resource Management

To further enhance the flexibility and utilization of computing resources, LDAI utilizes containerization technology. Containers can be quickly deployed and executed across multiple computing environments and dynamically adjust resources based on task demands. Through containerization, LDAI decouples computing tasks from the underlying hardware, reducing reliance on specific hardware and improving system portability and elasticity.

LDAI’s containerized platform supports dynamic GPU resource allocation and scheduling. Specifically, containers can adjust GPU resource usage in real-time based on task needs, preventing bottlenecks caused by uneven resource distribution. The platform also supports load balancing and resource sharing between containers, utilizing efficient resource scheduling algorithms to enable concurrent processing of multiple tasks while ensuring proper allocation of computational resources for each task.

Elastic Computing and Auto-Scaling

The LDAI platform also introduces an auto-scaling mechanism. The system can automatically scale up or down the cluster size based on fluctuating computational demands. For example, when certain tasks require extensive computation, LDAI can automatically launch more nodes to share the computational load; conversely, when the load is low, the system will shrink cluster size to reduce unnecessary resource consumption. This elastic computing capability ensures that the system efficiently utilizes every computing resource when handling large-scale tasks, lowering overall operational costs.

Highly Customizable and Optimized

LDAI’s decentralized architecture is highly customizable. Different AI applications may require different hardware configurations and computing resources, and LDAI allows users to flexibly customize the hardware resources and configurations of nodes according to their needs. For example, some tasks may require high-performance GPU computing, while others may require large amounts of storage or data processing power. LDAI can dynamically allocate resources based on these needs to ensure efficient task execution.

Additionally, the LDAI platform integrates a self-optimization mechanism. The system continuously optimizes scheduling algorithms and resource allocation strategies based on historical task execution data, improving long-term operational efficiency. This optimization process is automated, requiring no manual intervention, significantly reducing operational costs and enhancing resource utilization efficiency.

3. Lumoz Decentralized AI Application Scenarios

The decentralized architecture of LDAI enables a wide range of application scenarios, giving it broad potential across various fields. Below are some typical application scenarios:

AI Model Training

AI model training typically requires large amounts of computing resources, and LDAI provides a cost-effective and scalable platform through decentralized compute nodes and flexible resource scheduling. On LDAI, developers can distribute training tasks across globally distributed nodes, optimizing resource utilization while significantly reducing hardware procurement and maintenance costs.

Fine-tuning and Inference

In addition to training, fine-tuning and inference of AI models also require efficient computing power. LDAI’s computing resources can be dynamically adjusted to meet the real-time demands of fine-tuning and inference tasks. On the LDAI platform, AI model inference can be performed more quickly while maintaining high precision and stability.

Distributed Data Processing

LDAI’s decentralized storage and privacy computing capabilities make it particularly effective in big data analytics. Traditional big data processing platforms often rely on centralized data centers, which face storage bottlenecks and privacy risks. In contrast, LDAI ensures data privacy through distributed storage and encrypted computation, while also making data processing more efficient.

Smart Contracts and Payments

By combining blockchain technology, LDAI enables decentralized payments on the platform, such as for AI computation tasks. This smart contract-based payment system ensures transparency and security in transactions while reducing the cost and complexity of cross-border payments.

AI Application Development

Lumoz’s decentralized architecture also provides robust support for AI application development. Developers can create and deploy various AI applications on Lumoz’s computing platform, enabling seamless operation of applications ranging from Natural Language Processing (NLP) to Computer Vision (CV) on the LDAI platform.

4. Conclusion

Lumoz Decentralized AI, through its innovative decentralized computing architecture combined with blockchain and zero-knowledge proof technology, provides a secure, transparent, and decentralized platform for global AI developers. LDAI breaks down the barriers of traditional AI computing, enabling every developer to fairly access high-performance computing resources while safeguarding user data privacy and security.

As LDAI continues to evolve, its application scenarios in the AI field will expand, driving global AI technology innovation and adoption. Lumoz’s decentralized AI platform will serve as the cornerstone of the future intelligent society, helping developers worldwide build a more open, fair, and trustworthy AI ecosystem.