Shh… Don’t spill my data

1. Data Privacy Matters

1.1 The Double-Edged Sword of AI

Since the launch of ChatGPT, powered by GPT-3.5, on November 30, 2022, the world has been abuzz with talk of AI. AI services have become so deeply embedded in daily life that they’re now almost indispensable. Major tech companies like Google and Microsoft are expected to exceed $250 billion in AI-related spending by 2025. Even as I write this, I’m relying on AI tools for research and translation.

While AI undeniably promises massive productivity gains and immense wealth for humanity, it’s equally critical that we manage this unprecedented technology wisely and minimize its potential downsides. If humanity fails to control AI—and, further down the line, AGI—society could collapse entirely, as warned by Leopold Aschenbrenner in “Situational Awareness.”

1.2 AI Safety

Moreover, if we move in the direction of making machines which learn and whose behavior is modified by experience, we must face the fact that every degree of independence we give the machine is a degree of possible defiance of our wishes. - Nobert Wiener (1949)

AI safety focuses on ensuring stability and mitigating potential risks throughout the development, deployment, and application of AI technologies. While the benefits of AI are vast, numerous experts have voiced concerns about its dangers—an ongoing topic of debate for years.

From Geoffrey Hinton, the “Godfather of AI” and 2024 Nobel Physics laureate, to figures like Elon Musk, Bill Gates, and Stephen Hawking, leaders across various fields have warned about the risks of AI. Even within OpenAI, tensions between rapid AI advancements and robust safety measures led to the resignations of Ilya Sutskever and Mira Murati this year.

As Delphi Digital highlighted in “DeAI I: The Tower & the Square,” AI tends to reinforce traditional hierarchies (the "tower"). For this reason, achieving AI safety requires interdisciplinary efforts spanning social systems, technology, and policy to prevent misuse or malicious applications. So, what does AI safety entail?

-

Robustness & Reliability: Ensuring AI systems operate consistently and do not fail when encountering unexpected inputs.

-

Fairness & Bias Mitigation: Identifying and eliminating biases during training to produce equitable outcomes.

-

Transparency & Explainability: Enhancing clarity about how AI generates outputs, making processes understandable to users.

-

Accountability & Governance: Establishing clear responsibilities for AI’s development and use, and building governance systems to address unforeseen issues.

-

Privacy & Security: Protecting user data and safeguarding AI systems from malicious attacks.

-

Alignment with Human Values: Designing AI to act in alignment with human ethics and values, minimizing risks of harm.

1.3 Why Data Privacy Matters

Among the various aspects of AI safety, this article focuses on data privacy. While most people understand the importance of protecting their data, many remain unaware of the risks associated with its misuse. Instead of safeguarding their personal information, users often place blind trust in big tech platforms, exposing vast amounts of private data online. This leaves them vulnerable to cyberattacks, with data breaches escalating rapidly year by year.

In the age of AI, data is indispensable. Developing more advanced and specialized AI models requires an immense volume of high-quality data. As AI evolves, the importance of data privacy will only grow, for several key reasons:

-

Cases of unauthorized data usage are already emerging. For example, OpenAI and Microsoft have faced lawsuits for allegedly exploiting vast amounts of user data without consent. Similarly, Meta has faced comparable accusations.

-

Many existing AI models have been trained on nearly all accessible online data. To develop even more powerful models, the demand for additional data—whether synthetic or personal—is only increasing.

-

We are now entering the era of AI agents, where personalized AI agents tailored to individuals will boost productivity. However, these agents require training on users' personal data. Unless this training happens offline in local environments, it will inevitably raise serious concerns about data privacy.

The fundamental challenge for the AI industry is clear: How can we train models on personal data without infringing on privacy? While laws and regulations aim to mitigate these risks, the numerous reported breaches indicate that prevention at the technological level is the most effective solution.

2. Enter Nillion

2.1 Overview

Nillion Network provides a solution that leverages various Privacy-Enhancing Technologies (PETs) to securely encrypt and store user data, while also enabling computations on the encrypted data. For instance, in the context of AI training, Nillion allows sensitive and confidential personal information to be used safely for training AI models without ever exposing the data to external parties.

Traditionally, processing high-value data such as personal information involved decrypting the encrypted data, performing computations, and then re-encrypting it. This approach was not only inefficient but also introduced security risks during the decryption process. Nillion overcomes these limitations by utilizing PETs like Multi-Party Computing (MPC), enabling computations on encrypted data without needing to decrypt it, thereby maintaining security and efficiency.

2.2 What Are PETs?

Privacy-Enhancing Technologies are tools designed to protect data while allowing secure computation. Some of the most prominent PETs in the blockchain ecosystem include Zero-Knowledge Proofs (ZK), Fully Homomorphic Encryption (FHE), Trusted Execution Environments (TEE), and Multi-Party Computation (MPC). While Nillion initially focuses on MPC, it plans to integrate additional PETs in the future. Let’s explore the unique features and differences among these technologies.

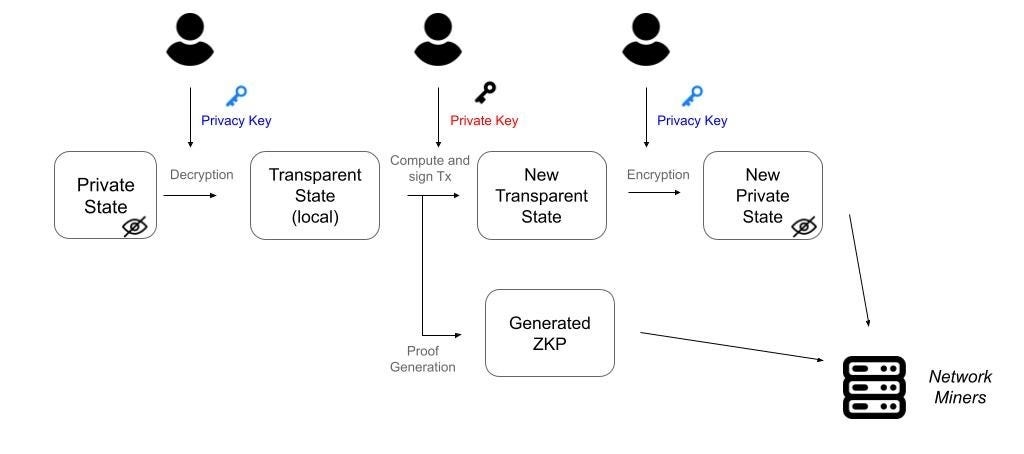

2.2.1 ZK

Zero-Knowledge technology focuses on proving the validity of data without revealing the data itself. A data owner can decrypt their data locally, make changes, re-encrypt it, and then generate a ZKP to demonstrate the validity of these changes to another party without exposing the actual data. In the blockchain ecosystem, Zero-Knowledge technology is applied in various ways:

-

Private Transactions: Users can conduct transactions involving their assets privately, processing them locally and submitting a ZKP to the network. This allows for transactions without exposing wallet balances or transaction history (e.g., Zcash, Iron Fish).

-

Scalability Solutions: ZK technology enhances blockchain scalability by generating single, succinct ZKPs for complex off-chain computations. These proofs can be verified on-chain, enabling efficient execution of operations that would otherwise be impractical on-chain (e.g., ZK rollups, ZK coprocessors).

-

Identity Verification: Sensitive personal data, such as identity information, can be validated without being disclosed to other parties (e.g., Polygon ID).

2.2.2 FHE

Fully Homomorphic Encryption (FHE) enables data owners to delegate computations on their data to a third party without revealing the actual data. In this process, the third party can perform operations directly on the encrypted data without needing to decrypt it. The resulting encrypted output can then be decrypted by the data owner using their private key. While FHE is a promising technology, its maturity level in the blockchain ecosystem remains relatively low. Notable projects exploring FHE include:

-

Fhenix: An Ethereum Layer 2 solution based on FHE technology. It allows developers to create encrypted smart contracts using Solidity and execute encrypted computations on data.

-

Zama: Zama’s fhEVM coprocessor enables developers to easily build confidential smart contracts on Ethereum.

2.2.3 TEE

Trusted Execution Environment (TEE) refers to a secure hardware-based area designed to process sensitive data safely without exposing it externally. Through an attestation process, it can verify that specific code is running securely within the TEE. Blockchain projects leveraging TEE technology include:

-

TEE-Boost: Flashbots’ TEE-Boost removes centralized relays in MEV-Boost by ensuring builders use verified software.

-

TEE Proof for Rollups: Some zk rollups, including Taiko, utilize TEE proofs as part of their multi-proof systems. TEE proofs verify the validity of computations through attestation, making them suitable for rollup proofs.

-

Privacy: Secret Network uses Intel SGX CPUs in its validators to process user transactions privately, ensuring secure handling of sensitive data.

2.2.4 MPC

Multi-Party Computation (MPC) is a technology that enables computations on inputs from multiple parties without revealing the original data. A classic example is Andrew Yao's “The Millionaire Problem,” where two millionaires use MPC to determine who is wealthier without disclosing their actual wealth.

In the blockchain ecosystem, one of the most prominent applications of MPC is the MPC wallet. These wallets split a private key into multiple shards, which are managed by different parties. No single party can reconstruct the full key, ensuring security. Even if one shard is compromised or lost, the remaining shards can maintain functionality and allow wallet recovery. Custodial services like Fireblocks use MPC technology to securely store crypto assets.

2.3 Nillion’s Novel MPC Protocol

Nillion Network leverages MPC to enable blind computing on private data. Its MPC protocol offers two major advancements compared to traditional MPC technologies:

-

Support for Non-linear Arithmetic

-

Asynchronous Computation

2.3.1 Support for Non-linear Arithmetic (Sum of Products)

Most MPC protocols are designed for linear operations like addition (e.g., x+y) or multiplication by a constant (e.g., x*3). Nillion’s MPC protocol, however, extends this capability to support non-linear operations, such as the sum of products.

The "sum of products" refers to operations where multiple terms are multiplied and then added together. For example, ab+cd. Here, a, b, c, and d are called factors, while their products ab & cd are referred to as terms.

2.3.2 Asynchronous Computation Through Pre-processing

Traditional MPC protocols often require interactive communication between parties during computations, making them dependent on synchronous environments. Nillion’s MPC protocol eliminates this limitation by introducing a pre-processing phase.

During pre-processing, the network nodes generate masks for each factor and term in the sum of products operation. These masks are shared with users in advance, enabling them to create hidden inputs that are later used for blind computation. This approach eliminates the need for real-time communication during computation, enabling asynchronous execution while improving efficiency and speed.

This innovative approach significantly enhances the performance and usability of MPC protocols, paving the way for broader applications. Detailed workflows and code examples will be explored in the next section.

2.4 Nillion’s MPC Protocol Workflow

The workflow of Nillion’s MPC protocol can be divided into two main phases:

-

The pre-processing phase, where masks for the factors and terms in a sum-of-products expression are generated.

-

The non-interactive computation phase, where computation is performed without real-time communication between nodes.

2.4.1 Assumptions

For this example, we assume the following setup:

-

There are 3 contributors (a, b, c) providing private inputs.

-

There are 3 nodes (0, 1, 2) performing blind computation.

-

The sum-of-products expression to compute is (1x2x3)+(4x5).

Let’s walk through how Nillion’s MPC protocol handles this computation. For illustration, Python code is shown to demonstrate how a non-interactive MPC protocol works.

nodes = [node(), node(), node()]

2.4.2 Setting the Workflow Signature

Contributors must first agree on the workflow signature, which specifies the number of factors in each term of the sum-of-products expression. For the expression (1x2x3)+(4x5), the signature is [3, 2]. This signature is shared with all nodes.

signature = [3, 2]

2.4.3 Pre-processing Phase

Using the provided signature, nodes perform pre-processing to generate random masks for contributors’ inputs. These masks ensure the privacy of the inputs during computation.

preprocess(signature, nodes)

2.4.4 Assigning Inputs

Contributors assign their inputs to specific positions in the sum-of-products expression. Positions are represented as (term_index, factor_index).

coords_to_values_a = {(0, 0): 1, (1, 0): 4}

coords_to_values_b = {(0, 1): 2, (1, 1): 5}

coords_to_values_c = {(0, 2): 3}

2.4.5 Requesting and Applying Masks

Each contributor requests masks for their assigned positions from the nodes. Using the node.masks() function, contributors receive the masks from each node and apply them to their input values using the masked_factors() function. Below is an example for contributor a; contributors b and c follow the same process.

masks_from_nodes_a = [node.masks(coords_to_values_a.keys()) for node in nodes]

masked_factors_a = masked_factors(coords_to_values_a, masks_from_nodes_a)

2.4.6 Broadcasting Masked Values

Contributors broadcast their masked inputs to all nodes.

broadcast = [masked_factors_a, masked_factors_b, masked_factors_c]

2.4.7 Nodes Perform Blind Computation

Each node uses the masked values to compute its assigned share of the result locally.

result_share_at_node_0 = nodes[0].compute(signature, broadcast)

result_share_at_node_1 = nodes[1].compute(signature, broadcast)

result_share_at_node_2 = nodes[2].compute(signature, broadcast)

2.4.8 Calculating the Final Result

Nodes aggregate their computed shares to produce the final result.

final_result = int(sum([result_share_at_node_0, result_share_at_node_1, result_share_at_node_2]))

2.4.9 Summary

Although the process may seem complex, it’s actually quite straightforward. Contributors define the structure of the computation, and nodes generate random masks during the pre-processing phase. Contributors then receive these masks from the nodes, apply them to their inputs to ensure privacy, and send the masked inputs back. The nodes use the masked inputs to perform the computation and produce the final result.

Because the inputs are masked throughout the process, contributors’ data remains private. Additionally, the pre-generated masks allow for asynchronous execution, making the computation more efficient and scalable.

2.5 Nillion Network Architecture

Now that we understand how Nillion leverages MPC and other PETs to enable blind computation, let's delve into the network's architecture. Nillion adopts a dual network architecture to achieve its goals:

2.5.1 Orchestration Layer (Petnet)

Protocols like MPC, ZK, FHE, and TEE each have specific strengths and limitations when it comes to privacy, and they complement each other in addressing different needs. The Orchestration Layer is designed to securely process data by enabling nodes to utilize Nillion's suite of PETs. It operates purely as a protocol layer and is not a blockchain network.

2.5.2 Coordination Layer (NilChain)

The Coordination Layer is a blockchain network built on the Cosmos SDK, facilitating tasks such as payment processing and resource coordination for the Orchestration Layer. Thanks to its Cosmos SDK foundation, the Coordination Layer enables seamless interaction with other networks via IBC.

The native token, NIL, plays a key role in this layer, serving as:

-

The governance token for decision-making within the network.

-

The reward mechanism for nodes participating in Petnet operations.

-

A fee token for gas costs and blind computation requests by users on the Nillion Network.

2.6 Developer Tools

To simplify onboarding for developers, Nillion offers a comprehensive set of tools through its Nillion SDK, specifically designed for dApp developers. The SDK includes:

-

Nillion CLI Tool: A command-line interface enabling interaction with the network, such as checking its status, uploading programs, and storing confidential data.

-

Local Network: An environment for testing the network locally.

-

User and Node Key Generation: Tools to generate keys for users and nodes in local environments.

-

Program Simulator: A feature that allows developers to simulate Nada programs locally before deploying them to the network.

-

Nada Compiler: A compiler for programs written in Nada.

Nada, a domain-specific language (DSL) used within the Nillion Network, is designed specifically for privacy-focused applications. Its syntax is inspired by Python, making it accessible and familiar to developers.

Nillion also provides open-source libraries such as Nada Numpy and Nada AI to streamline the creation of privacy-preserving AI systems. These libraries adapt popular Python tools to work seamlessly with the Nada DSL.

-

Nada Numpy: Enables the use of most NumPy functions in the Nada environment.

-

Nada AI: An all-in-one AI solution that facilitates integrating models from other AI frameworks into the Nillion ecosystem. It supports uploading programs, storing data, and running privacy-preserving inferences within the network.

With these tools and libraries, Nillion empowers developers to build innovative, privacy-centric applications with ease.

3. Nillion Ecosystem

Nillion Network offers a unique concept of blind computation powered by various PETs. Its modular nature allows other projects to integrate and utilize the network, making Nillion a versatile tool for a wide range of applications.

3.1 Blockchain Networks

Most blockchain networks do not inherently provide private storage or blind computing capabilities. If these functionalities were integrated into mature ecosystems, it could enable groundbreaking applications such as private AI assistants, confidential DeFi, and secure gaming.

Developers and dApps from the following blockchain ecosystems can delegate tasks like private data storage or blind computation to Nillion. This effectively makes Nillion a modular blind computing layer for these networks. Notably, NEAR Protocol, with its focus on AI applications, could benefit significantly in areas like private inference, synthetic data, and federated learning.

-

Aptos

-

NEAR Protocol

-

Arbitrum

3.2 DeFi Protocols

-

Kayra: A decentralized dark pool exchange utilizing MPC technology for its order book.

-

Choose K: Offers encrypted order books powered by Nillion’s MPC technology.

3.3 Infrastructure

-

zkPass: An oracle protocol combining ZKP, TLS, and MPC to enable on-chain verification of private internet data.

-

Skillful AI: Provides customized virtual assistants by leveraging Nillion’s blind computing layer to securely handle high-value data.

-

Coasys: Focuses on eliminating data silos and strengthening data sovereignty using Nillion’s secure data processing capabilities.

3.4 AI Infrastructure

-

Ritual: A decentralized AI infrastructure using Nillion’s blind computation for private model inference and secure storage.

-

Rainfall: Plans to launch a personalized AI platform and data marketplace in collaboration with Nillion.

-

Mizu: A GitHub-like service for datasets that uses Nillion’s blind computation to enable private data repositories.

-

Nesa: A lightweight L1 network for AI inference, collaborating with Nillion on privacy and inference-related tasks.

-

Nuklai: An L1 network specializing in AI-related data.

3.5 Consumer AI Services

- Aloha: An AI-powered dating app that uses Nillion to protect user data while enabling secure matchmaking.

3.6 AI Agents

-

Virtuals Protocol: A platform for creating AI agents for gaming and entertainment, leveraging Nillion’s secure infrastructure for private training and inference.

-

Crush: A crypto hub using autonomous AI agents to simplify interactions with blockchain ecosystems. It employs Nillion for secure learning and personalized suggestions.

-

Capx: A user-centric AI infrastructure allowing developers to build, monetize, and trade AI agents securely with Nillion.

-

Verida: An open-source layer providing private data and confidential computing for secure AI assistants.

3.7 Custody

- Salt: A platform leveraging Nillion to let users maintain ownership of their assets while enabling management through AI or other asset managers under pre-set policies.

3.8 Secure Storage

-

NilQuantum: Offers quantum-secure password storage for Nillion ecosystem users.

-

Flux: Enables private and secure document storage.

-

Dwinity: Manages user accounts and data securely in collaboration with Nillion.

-

Blerify: A project focused on verifiable credentials.

3.9 Healthcare

-

Maya: Collaborates with Nillion to build storage and secure computation infrastructure for healthcare data.

-

Monadic DNA: Uses blind computing technology to securely process personal DNA data.

-

Space of Mind: An online mental health counseling platform.

-

Agerate: Offers diagnostic services based on blood samples, using Nillion for secure patient data management.

3.10 Others

-

DecentDAO: Provides tools for DAOs, including private voting and secure data management powered by Nillion.

-

Mailchain: Facilitates private communication using Nillion.

4. Final Thoughts

We are at the forefront of an inevitable AI revolution. While AI, led by big tech, promises to enhance our lives, it also raises significant data privacy concerns. Many companies have already adopted encryption technologies to address these challenges. However, Nillion goes a step further by incorporating blockchain to create a truly decentralized blind computing layer with incentivization mechanisms.

One concern I have about Nillion is whether the community truly recognizes the importance of data privacy at this point. While blind computing is highly likely to become an essential stack in the AI industry in the distant future, the current AI ecosystem is still in its infancy. Many users seem to lack significant awareness of issues related to personal data. It will be interesting to see if Nillion’s technological vision aligns with the perspectives of society and regulators on AI safety. If these align, it could pave the way for a future where our society moves closer to an AI-driven utopia.