Moderators at Big Tech are the unsung heroes of our social media and internet experience today. The cleaners of the interwebz if you will. Evidence of their work stays obscured from us. It is only when unintended slip ups occur that the consequences spill over onto the internet and chaos ensues.

During the tragic terrorist attacks on Christchurch mosques back in 2019, the attacker was live streaming as he went about his killing spree. While the massacre was ongoing and many hours afterwards, those heinous videos kept surfacing on Facebook, Twitter, Reddit, YouTube and other social media. Moderators on those platforms struggled to keep up. It became a game of cat and mouse with normal users scrambling to report posts that showed up so that moderators could review and remove them quickly.

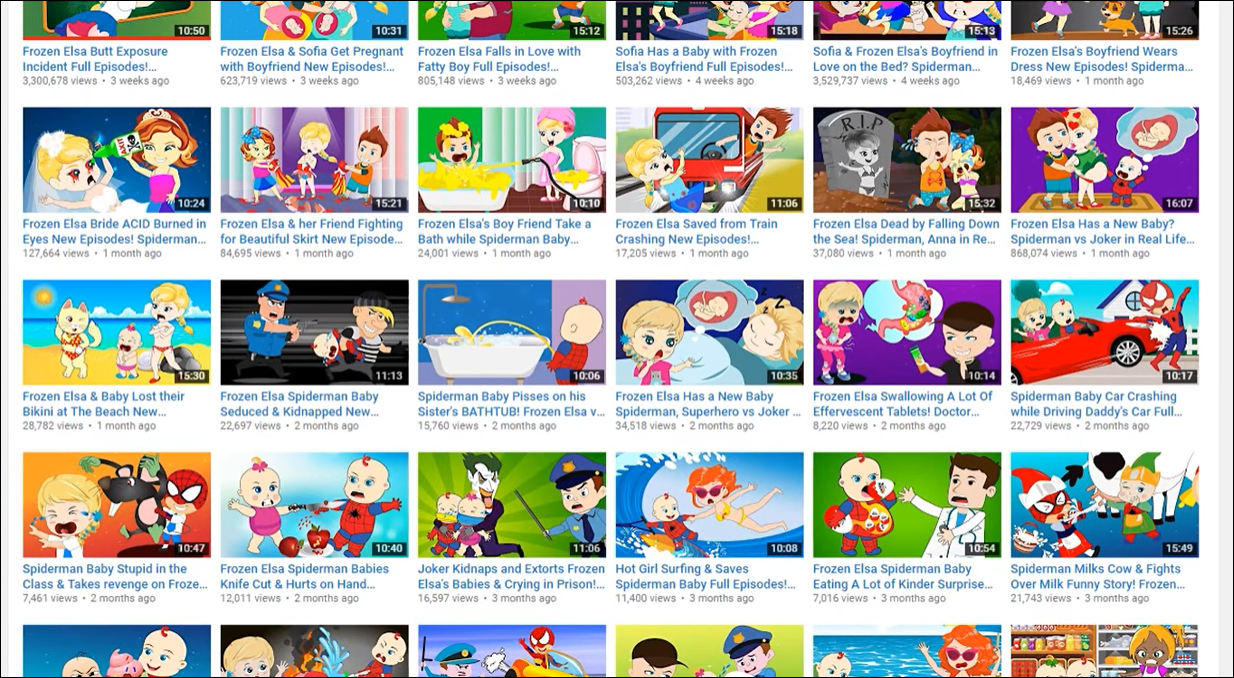

It goes without saying that moderators go through disturbing experiences like these day in and day out so that we don’t have to. Their work ensures that vile content stays off the internet. Stuff that often involves unspeakable human cruelty – from violent extremism, to murder, to animal torture, to child abuse, to rape, to self-harm, to hate speech, to terrorist propaganda and so on. Sometimes, it’s intentional shock material like Elsagate or graphic pornography. At other times, it’s straight up illegal affairs such as contraband solicitation. A moderator interviewed by VICE last year estimates 5-10% of what they see through the course of their work is potentially traumatising.

What does a Moderator do exactly?

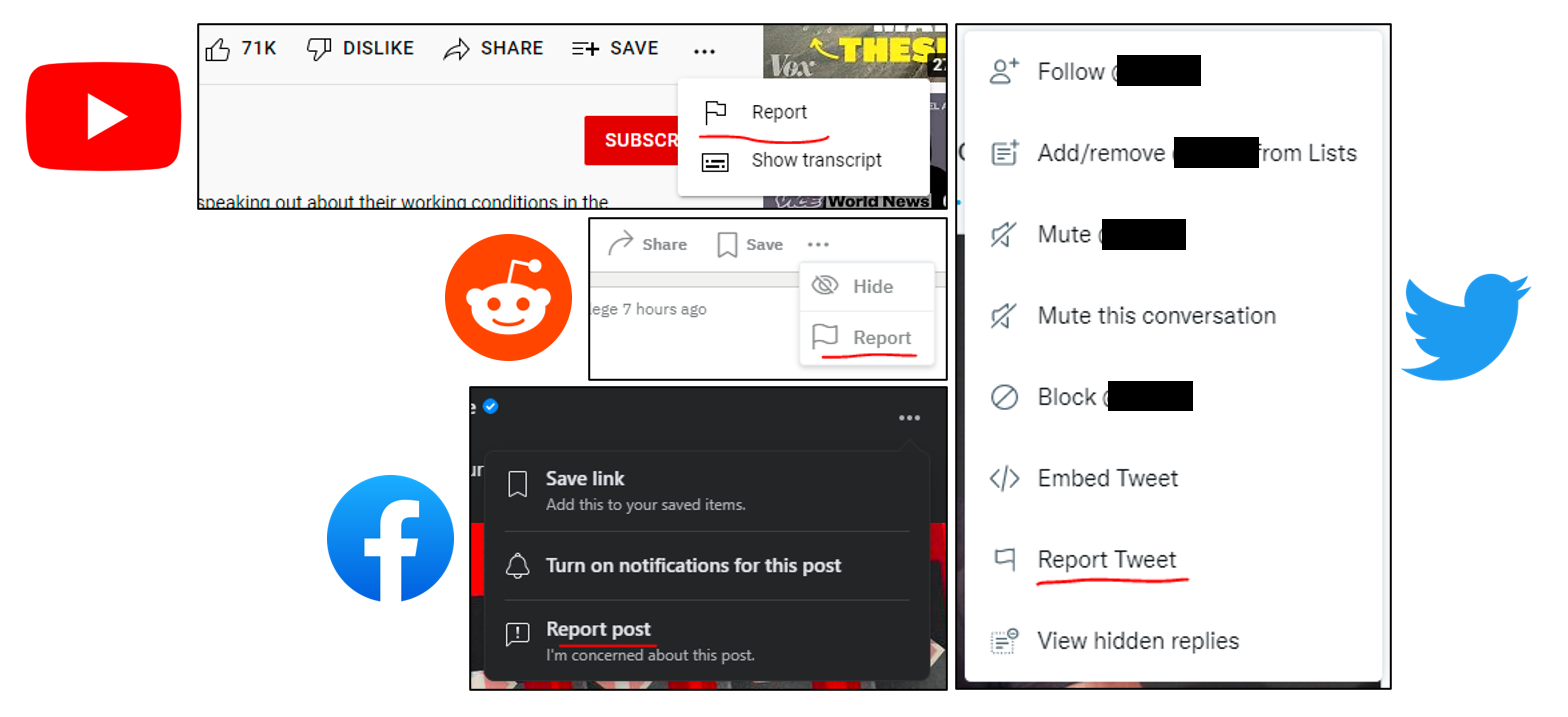

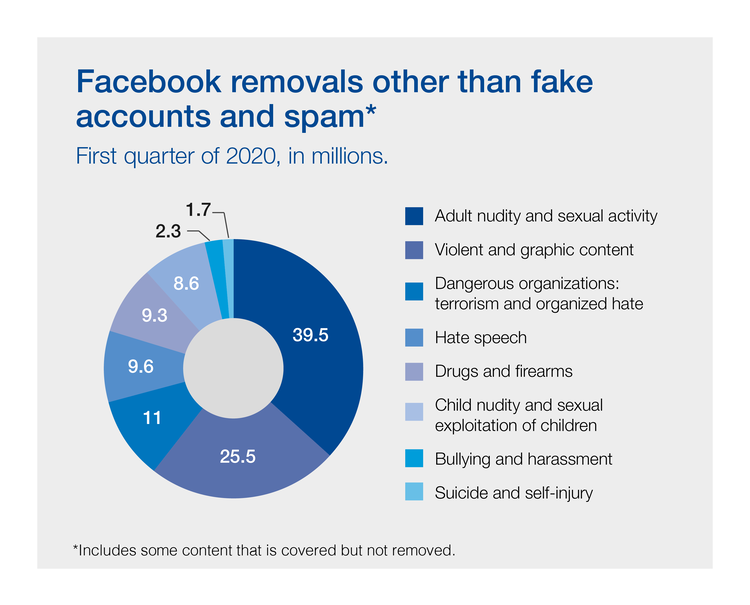

Media and Tech companies, especially those that allow user generated media, such as Facebook, Twitter, YouTube, Instagram, Reddit, Telegram etc. have community standards which are usually based on common sense principles and statutory law. In order to ensure that content posted on their platforms follow the guidelines, these companies employ AI/ML (Artificial Intelligence / Machine Learning) algorithms to automatically filter out objectionable matter. Things that slip through the cracks go over to a moderator (or mod) queue for manual review.

A mod’s designation can vary from community mod to process executive to content manager to legal removals associate etc. They can operate proactively if they detect questionable content or reactively in response to a report by a user. Based on their assessment, they can choose to keep, remove or escalate the flagged material to higher ups. Escalations happen for grey areas such as a political matter. A user can also be temporarily suspended or permanently banned from the platform based on their actions. Some companies additionally have steps in place where mods can report a user to the police.

Third party contract workers receive anywhere between $1/hour to $15/hour while directly employed moderators get better pay for this work. But the psychological trauma from reviewing 2000 flagged photos/hour or 400 reported posts/day and the resulting stress of maintaining a high accuracy score is the same for all. Many of these moderators develop long term mental health disorders such as PTSD (Post Traumatic Stress Disorder) and chronic anxiety as a result of prolonged exposure to the worst possible content.

To share some personal experience, as a community manager for various cryptocurrency projects, I have come across a variety of objectionable material in Telegram channels, Discord servers and chat forums – from racist jibes to drug peddling to CSAM (Child Sexual Abuse Material) solicitation. Actions have ranged from permanent bans to reporting the matter to the FBI. Each of those incidents have been distressing to say the least.

Where do games come in all of this?

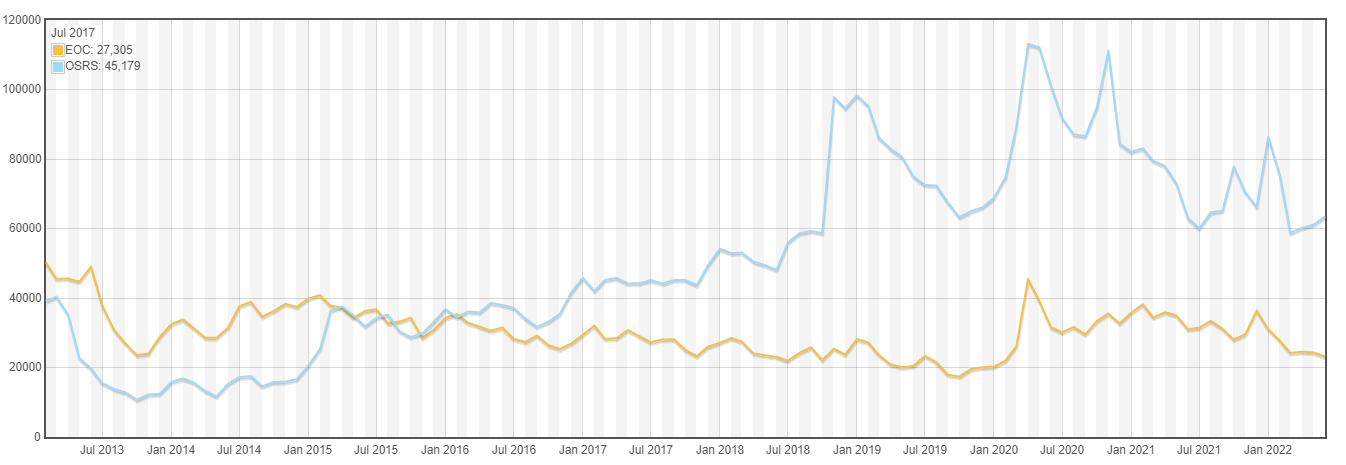

Games and social media have always been intersectional in the form of MMORPGs (Massively Multiplayer Online Role Playing Games) such as World of Warcraft, RuneScape, League of Legends, Clash of Clans, Eve Online or Battle Royale games like Fortnite, PUBG, Call of Duty, Apex Legends or even other online multiplayer games such as Among Us, Minecraft, Pokemon Go, FarmVille etc. Players interact with each other through in-game channels, Discord servers, chat rooms, Twitch etc. over text, audio, video and file transfers.

So, game moderators (or gamemasters) face similar challenges as above when handling these forums. There are fewer instances of heinous content in gaming forums compared to social media however. Instead, moderators here have to focus more on issues such as harassment, spam, trolling, cheating, bullying and ensuring that game rules are not broken. Because of the competitive nature of games, these channels of communication could turn heated leaving mods with their hands full and on their toes. Moderators may be employed by publishers/developers or volunteers from the community with high trust scores.

What are the legal implications of moderation?

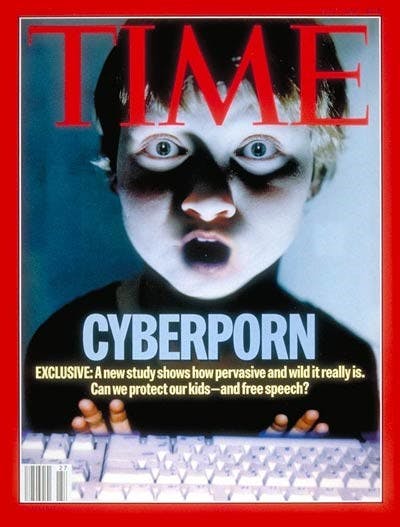

In the 90’s, the early days of the internet, there was widespread mass scare that the web would lead to spread of pornography and the resulting promiscuity would destroy social fabric. Media reports would use all kinds of names such as calling the internet a “global computer hookup”. The fear mongering got to a stage where rationalists had to counter repeated calls for censoring the internet.

Subsequently, Section 230 of the Communications Decency Act was ratified. This gave protection to platforms from the liability of publishers and the immunity to be able to remove bad content under the “Good Samaritan Clause”. This landmark law allowed the internet to grow to its size and form we see today. This is also what offers protection to moderators to go on about their jobs without fear of reprisal from affected parties.

As games and social interactions move onto metaverses (more on that later), the same law will protect mods who “police” these realms to filter out bad behaviour. However, there will be higher complexity of interactions in these settings, requiring new sets of guidelines for mods to operate. Just for an example, there could be instances of groping or stalking in the metaverse which requires a moderator to step in and take corrective action. Without clear SOPs (Standard Operating Procedures), moderators could remain second guessing on taking a decisive measure.

Wait, but what is a metaverse?

Metaverse is a loosely held term that is usually meant to describe a shared immersive virtual realm that some envision as the future of internet. This was largely a topic of science fiction until the advent of provably ownable digital assets in the form of cryptocurrencies and NFTs (Non Fungible Tokens). The closest precursors of a true metaverse experience would be various game worlds. However, traditional gaming communities and studios have been wary of crypto and its associated technologies so far. As a result, crypto-native companies like Decentraland and The Sandbox have spawned metaverse-like worlds where users can own assets (both portable and non-portable). Experiences in metaverses can range from visiting art exhibits, attending music festivals, playing games and much more.

It should be noted that while various traditional game developers have expressed concerns around portability (read here and here) from a gameplay perspective, Web3 developers consider portability from an asset ownership perspective. This is a fundamental disconnect between two differing points-of-view. I explored the crypto-native outlook of metaverses in an interview last year. One thing that both sides might agree on is that “land” in the metaverse should be limitless. The promise of true permissionless, decentralised realms should also include infinite scalability. Open Metaverse by crypto thought leader 6529 is an attempt to create an infinite metaverse where one can jump from realm to realm inside the metaverse while retaining ownership and use of their assets. As the website mentions, “Space is abundant in metaverse”.

Facebook’s much publicised recent transition to Meta is focused around building its own metaverse as well. Imran Ahmed, CEO of Centre for Countering Digital Hate, says that Facebook’s move “was an inevitable next step in the evolution of social media. Allowing the virtual physical presence of the people that you’re connected to from around the world to you”. It is unclear whether Meta will incorporate the ethos of crypto (decentralised, permissionless, self-custody) or build a walled garden with its own digital assets and wallet architecture instead.

But if recent developments at Twitter, Instagram, YouTube, Reddit, Shopify, Stripe, eBay and many other companies are an indication, NFTs, cryptocurrencies, blockchains, Web3 and all that comes along with them are inevitable. Instances of game studios nixing existing digital context (assets, score, progress etc) continue to make the case for Web3 developments. However, as some critics have pointed out, it is important to keep in mind the vectors of bad behaviour in a world where blockchains can play a large role.

This is where moderators will come in. A metaverse mod will have a completely different experience from existing media i.e. text, images, video, sound, files etc. In a metaverse, in addition to the above media, there will be avatars mimicking human actions and using digital assets to extend those behaviours which will also need moderation. It will be as real as it gets to the meatspace police trying to maintain civility and keeping the bad apples in check. Moderators will have their work cut out in a new paradigm.

Employers, on their part, will have to ensure that this work is not only suitably rewarded but the mental well being of those involved is also taken care of. Social media has already given an idea of the toll it takes on mods. This new iteration of social experience should not fail them as they strive to uphold the sanctity of metaverses for the rest of us.

Disclosure: The author has no vested interest in any of the projects mentioned in this article. No information from the article should be construed as financial advice.

Other References:

-

Something strange is happening on Youtube #ElsaGate (The Outer Light):

-

Who Moderates the Social Media Giants? A Call to End Outsourcing | Paul M. Barrett:

-

Runescape Population Avg by Qtr Hour:

-

TIME Magazine Cover: Cyber Porn | July 3, 1995:

http://content.time.com/time/covers/0,16641,19950703,00.html

-

Is Everything in Crypto a Scam? (VICE News):

-

Meta - Facebook Connect 2021 Presentation:

https://www.facebook.com/Meta/videos/facebook-connect-2021/577658430179350/

This article was originally published on 27th June, 2022 in HackerNoon. Reposting it here to keep a permanent record of this piece on Mirror.