This is the first part in a two part series about tree implementations in solidity. In this first part, we will be going over the basics of trees and implementing one of the most basic of trees, the binary search tree.

We will first talk about what trees are and why we use them, some basic overview on time complexity, and then we will go analyze an implementation in solidity.

What are trees?

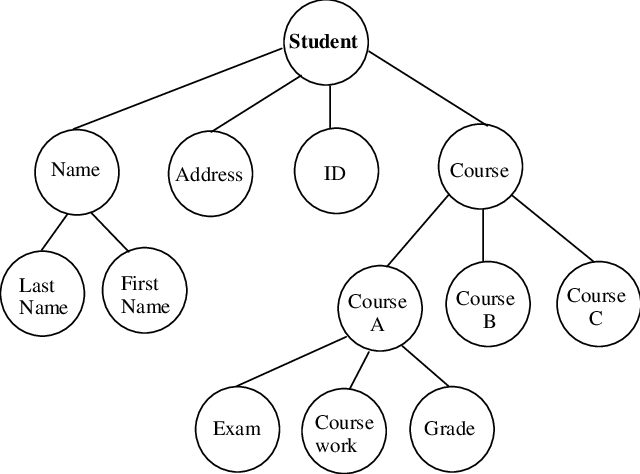

A tree is an abstract data structure where data is stored in a hierarchical tree structure in the form of nodes that are linked to other subtrees. A tree has a root node, and nodes that are linked to it are called children nodes. Let us look at an example:

In this tree, the Student node is the root of the tree. It’s children are Name, Address, ID, and Course, this group of nodes are also called siblings because they share the same parent.

Some trees have special properties that make them useful. The binary search tree is the most common example. It has several rules on how it works:

-

Each node can have a maximum of two children nodes connected to it

-

Nodes being inserted must be comparable in some way, for example, 1 < 2

-

When inserting a number, all the numbers to the left of it must be smaller, and all of the numbers to the right of it must be bigger

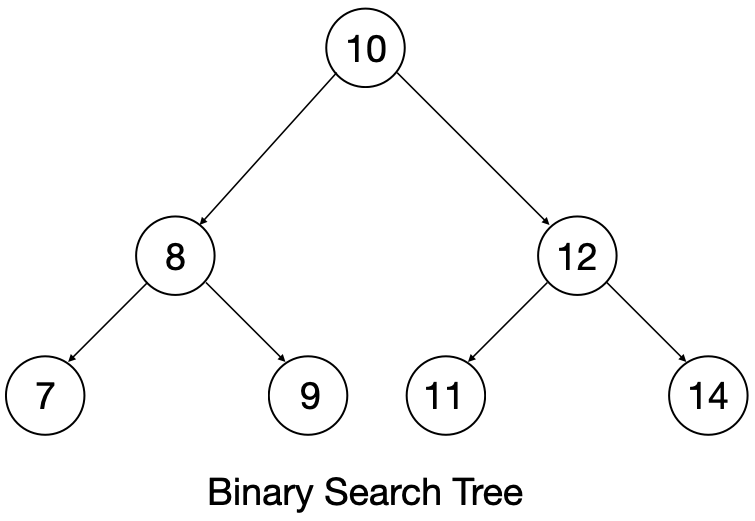

The last rule is the most important. Here is an example a binary search tree:

Start at the root of the tree, and look at the left and right sub tree. The left node is 8, which is smaller than 10, and the right node is 12, which is bigger then 10. You might be able to see where we are going with this.

Why do we use trees?

To understand why we use trees, it is good to have a basic overview of time complexity in computer algorithms.

Let us say that we want to iterate over an array once, printing each value while we are doing it. That would look something like this (pseudo code):

Array a;

n = a.length;

for (i=0; i<n; i++)

print(a[i]);

Lets declare some things:

-

The array has length of 100

-

It takes 1 unit for each iteration, lets say this unit is in seconds.

Overall, we are iterating over this array N times, which is commonly declared with the notation O(n).

We wont go over the background of the notation in this article. Just imagine if N is 100 it will take O(100) units for the algorithm to execute, which is 100 seconds in this case.

Now, let us look at another example like the one above, but instead with a nested for-loop:

Array a;

n = a.length;

for (i=0; i<n; i++)

for (x=0; x<n; x++)

print(a[x]);

Here, our top for-loop will iterate N times, and our bottom for-loop will iterate N times. Combining them, we get N*N, or N^2 times. This time complexity is denoted as O(N^2), which means this algorithm will take 10,000 units to come to completion.

What does this have to do with trees?

The problem of time complexity originated with a simple idea: lets say we have an array of 1000 items, and want to sort it. There is a simple way to do this, bubble sort:

for (int max = elements.length - 1; max > 0; max--) {

boolean swapped = false;

for (int i = 0; i < max; i++) {

int left = elements[i];

int right = elements[i + 1];

if (left > right) {

elements[i + 1] = left;

elements[i] = right;

swapped = true;

}

}

if (!swapped) break;

}

Bubble sort has a worst case time complexity of O(N^2). That means if we want to sort 10000 items, it will take 10000^2 units to sort it. With computers, this can be done almost instantly. However, the issue arises when we are sorting arrays with large N’s. For example, in C++, sorting an array of 1 million integers can take up to an hour.

Commonly when talking about time complexity, we are attempting to describe the worst possible time for an algorithm to take. There is a chance that the majority of an array is sorted already, but we care more about the worst case than the best case.

There are much better ways to sort an array, including other algorithms, and also other data structures. This is where the binary search tress come in.

Binary Search Tree Time Complexity

When inserting a number into a BST (Binary search tree), the number gets sorted into the tree. This allows operations on the BST to be efficient, and sorting itself to be more efficent.

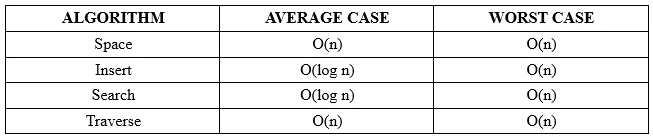

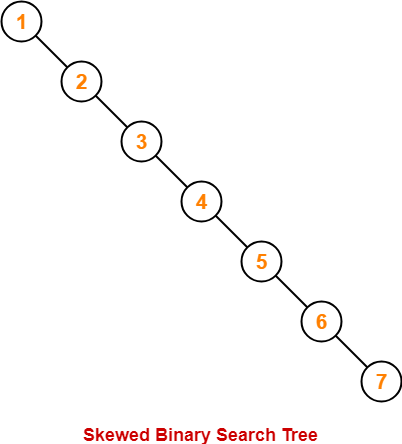

Take a look at the average case of Insert and Search, versus the worst case. The reason for the differences is because of an occurrence where numbers can be inserted in sorted order, for example:

This means that to access the 7 node, it will take O(n) time as you will have to go over all the nodes in the tree first.

A subset of the binary search tree exists that solves this issue, and achieves O(log n) worst case time complexity for insert and search. We will explore these and their implementation in solidity in part two of this series.

Binary Search Tree in Solidity

Why would we write a binary search tree in solidity? Having an efficient way to sort data in a programming language where efficiency directly saves you money (gas) is definitely something that is crucial.

Every iteration over an array costs gas, and for large array’s it is possible for you to run out of gas while sorting it. We move this gas from the sort operation to the insert operation.

The full code for this is on GitHub here:

The way nodes are stored is similar to how we did it with our stack implementation via linked lists, where we store our actual nodes in a mapping, with each node having its own unique id that other nodes can point to it via.

struct Node {

uint256 value;

bytes32 left;

bytes32 right;

}

Here is our node struct, and it is rather simple. It stores a uint256 value, and two bytes32 values, left and right. These are used to indicate the two children of the node, and are set to the address ID of those nodes.

Next we have the address of our root as a state variable, and our mapping to store our nods:

mapping (bytes32 => Node) private tree;

bytes32 private rootAddress;

Now, we have our state variables set up. Next, we look at insertion. Hang tight, this might look complicated but it is actually relatively simple!

function insert(uint256 value) public {

Node memory root = tree[rootAddress];

// if the tree is empty

if (root.value == 0) {

root.value = value;

root.left = 0;

root.right = 0;

tree[0] = root;

rootAddress = generateId(value, 0);

tree[rootAddress] = root;

} else {

// if the tree is not empty

// find the correct place to insert the value

insertHelper(value, rootAddress);

}

}

First, we attain the root of the tree by using the state-variable root address to access the root node stored inside our mapping.

Next, we check if the tree is empty. If it is empty, we create the root node, generate its unique id, and put it into the mapping.

In this example, the root node should not have a value less than 0. We are using unsigned 256 bit integers. Thus, a node can not have the value of 0.

If the tree is not empty, we use the function insertHelper, and pass the value and the root address where it recursively finds the right place to insert the node into the tree.

// helper function for insert

function insertHelper(uint256 value, bytes32 nodeAddress) internal {

Node memory node = tree[nodeAddress];

if (value < node.value) {

if (node.left == 0) {

insertNode(value, nodeAddress, 0);

} else {

insertHelper(value, node.left);

}

} else {

if (node.right == 0) {

insertNode(value, nodeAddress, 1);

} else {

insertHelper(value, node.right);

}

}

}

This function checks if the node’s value is less than the current node, if so, if the left child is empty, it inserts the node into that position. If it is less but the node is not empty, it then calls the function again but with that new node, until it finds an empty spot. It does the same operation for if the value is bigger than the current node.

The insert node function is rather simple, it takes three parameters, the value, the address to put the node, and a 0 or 1 value to indicate where to put the node, left or right.

function insertNode(uint256 value, bytes32 nodeAddress, uint256 location) internal {

Node memory parentNode = tree[nodeAddress];

bytes32 nodeId = generateId(value, nodeAddress);

if (location == 0) {

// if the value is less than the current node

parentNode.left = nodeId;

} else {

// if the value is greater than the current node

parentNode.right = nodeId;

}

// update the tree

tree[nodeAddress] = parentNode;

tree[nodeId] = Node(value, 0, 0);

}

Left = 0

Right = 1

This article is a lot longer than I was expecting, so I will skip going over how deletion works and instead go over to how to display the tree in order and how to find elements.

You can look at the deletion function in the source code, there are detailed comments on how it works.

// inorder traversal

function displayInOrder() public {

displayInOrderHelper(rootAddress);

}

// recursive helper function for inorder traversal

function displayInOrderHelper(bytes32 nodeAddress) internal {

Node memory node = tree[nodeAddress];

if (node.left != 0) {

displayInOrderHelper(node.left);

}

console.log(node.value);

if (node.right != 0) {

displayInOrderHelper(node.right);

}

}

This is also pretty simple! It recursively goes down the tree in ascending order. The code is pretty self explanatory.

I was using hardhat’s console.log feature when displaying the tree. This is the base algorithm for displaying a tree in order, and it can be expanded upon by making a non-recursive version via a stack, that way it will be easier to return the values.

Finding elements also works similarly:

// search for a value in the tree

function findElement(uint256 value) public view returns (bool) {

return findElementHelper(value, rootAddress);

}

// recursive helper function for findElement

function findElementHelper(uint256 value, bytes32 nodeAddress) internal view returns (bool) {

Node memory node = tree[nodeAddress];

if (node.value == value) {

return true;

} else if (node.value > value) {

if (node.left == 0) {

return false;

} else {

return findElementHelper(value, node.left);

}

} else {

if (node.right == 0) {

return false;

} else {

return findElementHelper(value, node.right);

}

}

}

Take a look at the repo on GitHub to see the rest of the functions and ways to interact with the tree.

Conclusion

We barely scratched the surface of what is possible with trees. In the next part, we will be looking into implementing an alternative search tree that has worst-case time complexity of search of O(logn)!

I hope you were able to learn something from this article! If you have any comments or questions feel free to direct message me on twitter @Deor