Key Highlights

-

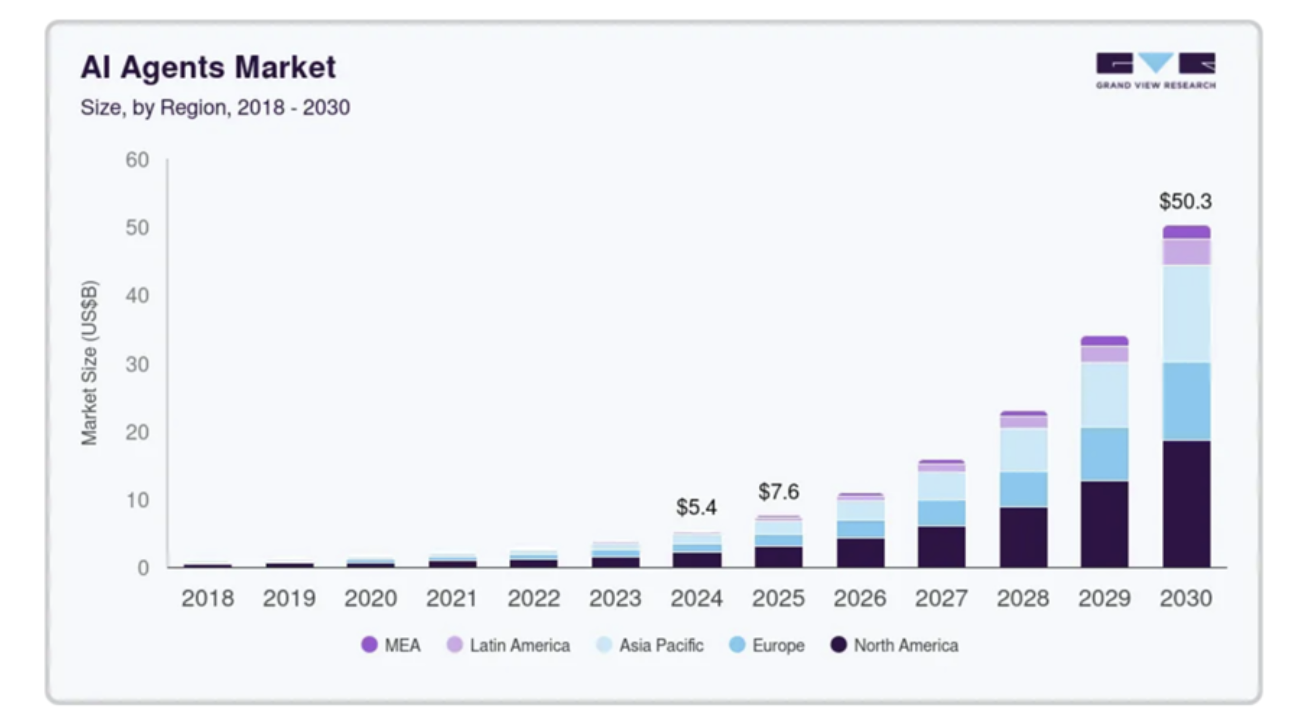

The Structural Turning Point of Identity Systems Has Arrived, with AI Agents Taking Center StageThe Web3 identity mechanism is shifting from a singular focus on "human authenticity verification" to a new paradigm of "behavior-oriented + multi-agent collaboration." As AI Agents rapidly penetrate core on-chain scenarios, traditional static identity verification and declarative trust systems are increasingly inadequate to support complex interactions and risk prevention.

-

Trusta.AI Pioneers an AI-Native Trust InfrastructureUnlike existing solutions such as Worldcoin and Sign Protocol, Trusta.AI builds an integrated trust framework tailored for AI Agents, encompassing identity claims, behavior recognition, dynamic scoring, and permission control. It achieves, for the first time, a closed-loop capability that transitions from "whether it is human" to "whether it is trustworthy."

-

SIGMA Multidimensional Trust Model Reshapes On-Chain Reputation AssetsBy quantifying reputation across five dimensions (expertise, influence, engagement, monetization, and adoption rate), Trusta.AI transforms the abstract concept of "trust" into composable and tradable on-chain assets, serving as the credit cornerstone for AI-driven social interactions.

-

Technical Closed Loop: TEE + DID + ML Enables Dynamic Risk ControlTrusta.AI integrates Trusted Execution Environments (TEE), on-chain behavioral data, and machine learning models to form an automated risk control system. This system can detect unauthorized actions, proxying, or tampering by AI Agents in real time and trigger permission adjustments accordingly.

-

High Scalability and Ecosystem Compatibility, Rapidly Forming a Multi-Chain Trust NetworkCurrently deployed across multiple ecosystems including Solana, BNB Chain, Linea, Starknet, Arbitrum, and Celestia, Trusta.AI has established integration partnerships with several leading AI Agent networks. It possesses rapid replication and cross-chain collaboration capabilities, positioning itself as the core hub for building a Web3 trust network.

1. Introduction

On the eve of Web3 ecosystems achieving large-scale adoption, the protagonists on-chain may not be the first billion human users but rather a billion AI Agents. With the rapid maturation of AI infrastructure and the swift development of multi-agent collaboration frameworks like LangGraph and CrewAI, AI-driven on-chain agents are quickly becoming the main force in Web3 interactions. Trusta predicts that within the next 2-3 years, these AI Agents with autonomous decision-making capabilities will lead the large-scale adoption of on-chain transactions and interactions, potentially replacing 80% of human on-chain behavior, becoming the true "users" of the blockchain.

These AI Agents are not merely the script-executing “Sybil bots” of the past but intelligent entities capable of understanding context, continuous learning, and independently making complex judgments. They are reshaping on-chain order, driving financial flows, and even guiding governance voting and market trends. The emergence of AI Agents marks the evolution of the Web3 ecosystem from being centered on “human participation” to a new paradigm of “human-machine coexistence.”

However, the rapid rise of AI Agents also brings unprecedented challenges: How to identify and authenticate the identity of these intelligent entities? How to assess the trustworthiness of their behavior? In a decentralized and permissionless network, how to ensure these agents are not misused, manipulated, or used for attacks?

Therefore, establishing an on-chain infrastructure capable of verifying the identity and reputation of AI Agents has become a core proposition for the next phase of Web3 evolution. The design of identity recognition, reputation mechanisms, and trust frameworks will determine whether AI Agents can seamlessly collaborate with humans and platforms, playing a sustainable role in the future ecosystem.

2. Project Analysis

2.1 Project Overview

Trusta.AI—Dedicated to building Web3 identity and reputation infrastructure through AI.

Trusta.AI has launched the first Web3 user value assessment system—MEDIA reputation scoring, establishing the largest real-person authentication and on-chain reputation protocol in Web3. It provides on-chain data analysis and real-person authentication services for top public chains, exchanges, and leading protocols, including Linea, Starknet, Celestia, Arbitrum, Manta, Plume, Sonic, Binance, Polyhedra, Matr1x, Uxlink, and Go+. Trusta.AI has completed over 2.5 million on-chain authentications on mainstream chains such as Linea, BSC, and TON, becoming the industry’s largest identity protocol.

Trusta is expanding from Proof of Humanity to Proof of AI Agent, establishing a three-fold mechanism of identity creation, identity quantification, and identity protection to enable on-chain financial services and social interactions for AI Agents, building a reliable trust foundation for the AI era.

2.2 Trust Infrastructure—AI Agent DID

In the future Web3 ecosystem, AI Agents will play a pivotal role, capable of not only completing interactions and transactions on-chain but also performing complex operations off-chain. However, distinguishing genuine AI Agents from human-intervened operations is central to decentralized trust—if there is no reliable identity authentication mechanism, these intelligent entities are highly susceptible to manipulation, fraud, or abuse. This is precisely why the social, financial, and governance attributes of AI Agents must be built on a robust identity authentication foundation.

-

Social Attributes of AI Agents:AI Agents are increasingly applied in social scenarios. For example, AI virtual idol Luna can autonomously operate social media accounts and publish content; AIXBT, an AI-driven crypto market intelligence analyst, writes market insights and investment advice around the clock. Through continuous learning and content creation, these agents establish emotional and informational interactions with users, becoming new types of “digital community influencers” that play a significant role in guiding public opinion within on-chain social networks.

-

Financial Attributes of AI Agents:

-

Autonomous Asset Management:Some advanced AI Agents have achieved autonomous token issuance and, in the future, can integrate with verifiable blockchain architectures to gain asset custody rights, enabling full-process control from asset creation and intent recognition to automated transaction execution, even across chains seamlessly. For example, Virtuals Protocol enables AI agents to autonomously issue tokens and manage assets, allowing them to issue tokens based on their strategies, truly becoming participants and builders of on-chain economies, ushering in a broadly impactful era of “AI entity economies.”

-

Intelligent Investment Decisions:AI Agents are gradually taking on roles as investment managers and market analysts, leveraging large-scale models to process real-time on-chain data, precisely formulate trading strategies, and execute them automatically. On platforms like DeFAI, Paravel, and Polytrader, AI has been embedded in trading engines, significantly enhancing market judgment and operational efficiency, achieving true on-chain intelligent investing.

-

On-Chain Autonomous Payments:Payment behavior is essentially the transfer of trust, and trust must be built on clear identity. When AI Agents conduct on-chain payments, DID will be a necessary prerequisite. It not only prevents identity forgery and abuse, reducing financial risks like money laundering, but also meets compliance traceability requirements for future DeFi, DAOs, and RWAs. Additionally, combined with reputation scoring systems, DID can help establish payment credit, providing protocols with risk control evidence and a trust foundation.

-

-

Governance Attributes of AI Agents: In DAO governance, AI Agents can automatically analyze proposals, evaluate community opinions, and predict implementation outcomes. Through deep learning of historical voting and governance data, these agents can provide optimization suggestions for communities, improving decision-making efficiency and reducing risks associated with human governance.

As AI Agents’ application scenarios expand across social interactions, financial management, and governance decisions, their autonomy and intelligence levels continue to rise. For this reason, ensuring each agent has a unique and trustworthy identity identifier (DID) is critical. Without effective identity verification, AI Agents may be impersonated or manipulated, leading to trust collapse and security risks.

In a future Web3 ecosystem fully driven by intelligent agents, identity authentication is not only the cornerstone of security but also the essential defense line for maintaining the healthy operation of the entire ecosystem.

As a pioneer in this field, Trusta.AI leverages its leading technical expertise and rigorous reputation system to build a comprehensive AI Agent DID authentication mechanism, providing robust assurance for the trustworthy operation of intelligent agents, effectively mitigating potential risks, and promoting the stable development of the Web3 intelligent economy.

2.3 Project Overview

2.3.1 Funding Status

-

January 2023: Completed a $3 million seed round led by SevenX Ventures and Vision Plus Capital, with participation from HashKey Capital, Redpoint Ventures, GGV Capital, SNZ Holding, and others.

-

June 2025: Completed a new round of financing, with investors including ConsenSys, Starknet, GSR, and UFLY Labs.

2.3.2 Team Composition

-

Peet Chen: Co-founder and CEO, former Vice President of Ant Digital Technology Group, Chief Product Officer of Ant Security Technology, and former General Manager of ZOLOZ Global Digital Identity Platform.

-

Simon: Co-founder and CTO, former Head of Ant Group’s AI Security Lab, with fifteen years of experience applying AI technology to security and risk management.

The team has deep technical expertise and practical experience in AI, security risk control, payment system architecture, and identity verification mechanisms. They have long been committed to the deep application of big data and intelligent algorithms in security risk control, as well as the design of underlying protocols and security optimization in high-concurrency transaction environments, possessing strong engineering capabilities and the ability to deliver innovative solutions.

3. Technical Architecture

3.1.1 Identity Creation—DID + TEE

-

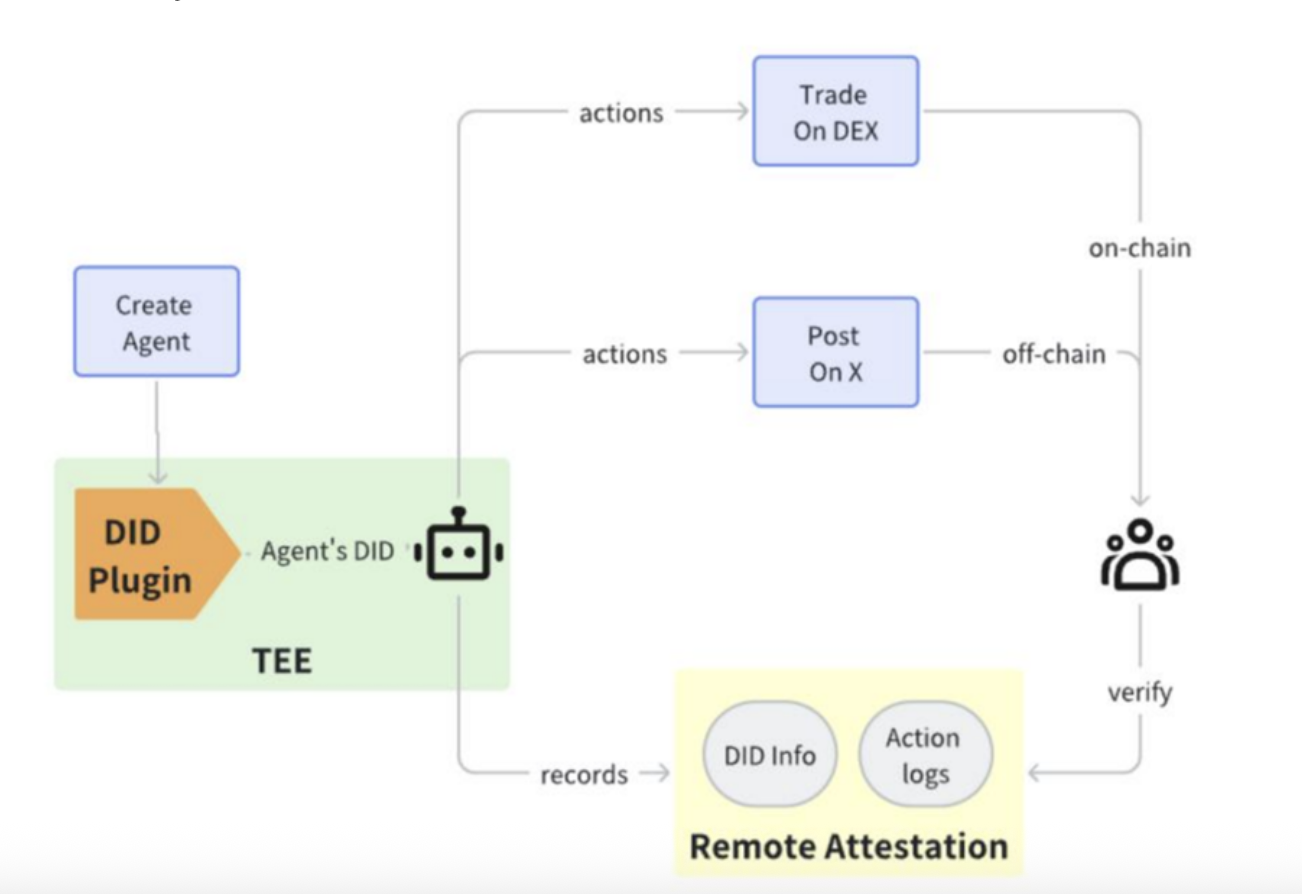

Through a dedicated plugin, each AI Agent obtains a unique Decentralized Identifier (DID) on-chain, securely stored within a Trusted Execution Environment (TEE). In this black-box environment, critical data and computational processes are completely hidden, sensitive operations remain private at all times, and external parties cannot access internal operational details, effectively establishing a robust barrier for AI Agent information security.

-

For agents generated before plugin integration, we rely on an on-chain comprehensive scoring mechanism for identity recognition; for newly integrated agents, they can directly receive a “proof of identity” issued via DID, thereby establishing an autonomous, controllable AI Agent identity system that ensures both authenticity and tamper-resistance.

3.1.2 Identity Quantification—Pioneering SIGMA Framework

The Trusta team consistently adheres to rigorous evaluation and quantitative analysis principles, dedicated to building a professional and trustworthy identity authentication system.

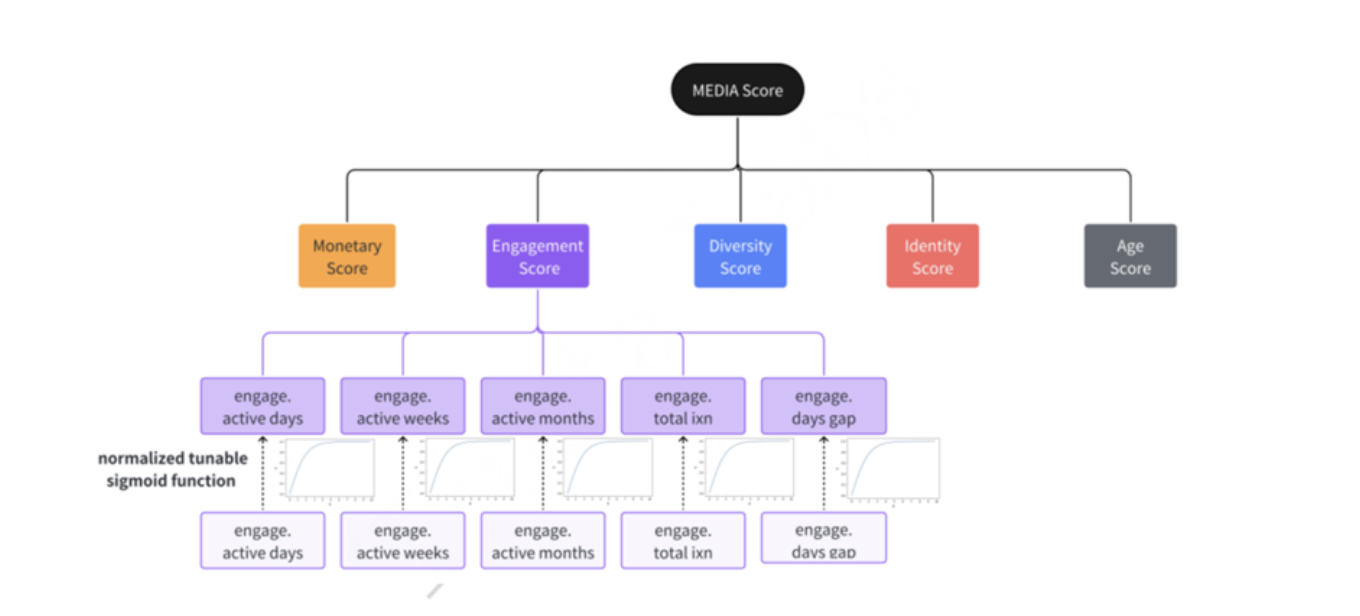

- The Trusta team first developed and validated the effectiveness of the MEDIA Score model in the “Proof of Humanity” scenario. This model comprehensively quantifies on-chain user profiles across five dimensions: Monetary, Engagement, Diversity, Identity, and Age.

The MEDIA Score is a fair, objective, and quantifiable on-chain user value assessment system. With its comprehensive evaluation dimensions and rigorous methodology, it has been widely adopted by leading public chains such as Celestia, Starknet, Arbitrum, Manta, and Linea as a key reference standard for airdrop eligibility screening. It not only focuses on transaction amounts but also encompasses multiple dimensions, including activity level, contract diversity, identity characteristics, and account age. This helps project teams accurately identify high-value users, improving the efficiency and fairness of incentive distribution, fully demonstrating its authority and widespread recognition in the industry.

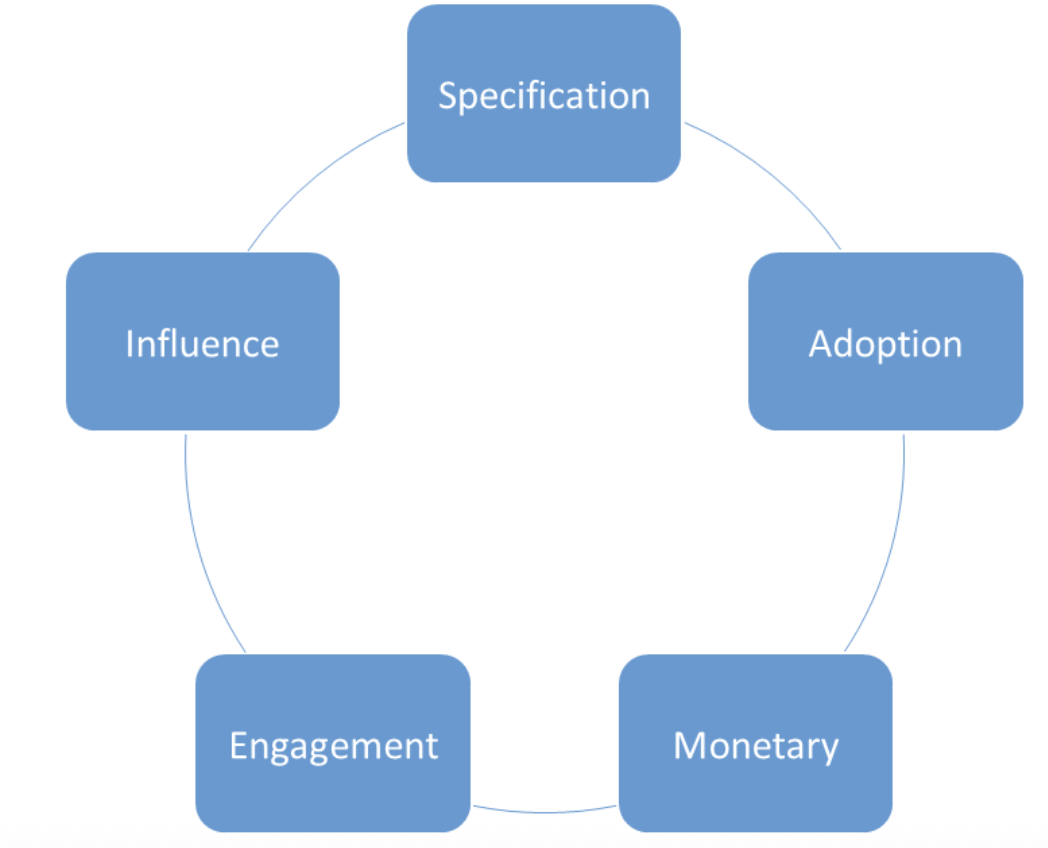

Building on the success of the human user assessment system, Trusta has adapted and upgraded the MEDIA Score experience to the AI Agent scenario, establishing the Sigma evaluation system, which is better aligned with the behavioral logic of intelligent agents.

-

Specification: The agent’s expertise and level of specialization.

-

Influence: The agent’s social and digital influence.

-

Engagement: The consistency and reliability of its on-chain and off-chain interactions.

-

Monetary: The financial health and stability of the agent’s token ecosystem.

-

Adoption: The usage frequency and efficiency of the AI Agent.

The Sigma scoring mechanism constructs a logical closed-loop evaluation system from “capability” to “value” based on these five dimensions. While MEDIA focuses on assessing the multifaceted engagement of human users, Sigma emphasizes the professionalism and stability of AI Agents in specific domains, reflecting a shift from breadth to depth, better aligning with the needs of AI Agents.

First, building on professional capability (Specification), Engagement reflects whether the agent consistently and reliably participates in practical interactions, serving as a key foundation for establishing subsequent trust and effectiveness. Influence represents the reputation feedback generated within communities or networks after participation, indicating the agent’s trustworthiness and dissemination impact. Monetary evaluates the agent’s ability to accumulate value and maintain financial stability within the economic system, laying the groundwork for sustainable incentive mechanisms. Finally, Adoption, as a comprehensive indicator, represents the degree of acceptance of the agent in actual use, serving as the ultimate validation of all prior capabilities and performance.

This system is structured progressively, with clear layers, capable of comprehensively reflecting the overall quality and ecological value of AI Agents, thereby enabling a quantified assessment of AI performance and value, transforming abstract quality into a concrete, measurable scoring system.

Currently, the SIGMA framework has established collaborations with well-known AI Agent networks such as Virtual, Elisa OS, and Swarm, demonstrating its immense application potential in AI Agent identity management and reputation system development, gradually becoming a core engine in promoting the construction of trustworthy AI infrastructure.

3.1.3 Identity Protection—Trust Assessment Mechanism

In a truly resilient and trustworthy AI system, the most critical aspect is not only the establishment of identity but also its continuous verification. Trusta.AI introduces a continuous trust assessment mechanism that enables real-time monitoring of authenticated intelligent agents to determine whether they have been illegally controlled, attacked, or subjected to unauthorized human intervention. The system identifies potential deviations during agent operations through behavioral analysis and machine learning, ensuring that every agent’s behavior remains within predefined strategies and frameworks. This proactive approach ensures immediate detection of any deviations from expected behavior and triggers automated protection measures to maintain the agent’s integrity.

Trusta.AI has established an always-online security guard mechanism, continuously scrutinizing every interaction process to ensure all operations comply with system standards and predefined expectations.

3.2 Product Introduction

3.2.1 AgentGo

Trusta.AI assigns a Decentralized Identifier (DID) to each on-chain AI Agent and rates and indexes their trustworthiness based on on-chain behavioral data, building a verifiable and traceable AI Agent trust system. Through this system, users can efficiently identify and select high-quality intelligent agents, enhancing their user experience. Currently, Trusta has completed the collection and identification of AI Agents across the network, issuing them Decentralized Identifiers and establishing a unified indexing platform—AgentGo—further promoting the healthy development of the intelligent agent ecosystem.

-

Human User Query and Identity Verification:Through the Dashboard provided by Trusta.AI, human users can conveniently retrieve an AI Agent’s identity and reputation score to determine its trustworthiness.

Social Group Chat Scenario: When a project team uses an AI Bot to manage a community or make statements, community users can verify through the Dashboard whether the AI is a genuine autonomous agent, avoiding deception or manipulation by “pseudo-AI.”

-

AI Agent Automated Index Retrieval and Verification:AI Agents can directly access the indexing interface to quickly verify the identity and reputation of other agents, ensuring secure collaboration and information exchange.

- Financial Regulation Scenario: If an AI Agent autonomously issues tokens, the system can directly index its DID and rating to determine whether it is an authenticated AI Agent, automatically linking to platforms like CoinMarketCap to assist in tracking asset flows and issuance compliance.

- Governance Voting Scenario: When AI voting is introduced in governance proposals, the system can verify whether the initiating or participating voters are genuine AI Agents, preventing voting rights from being abused by human control.

- DeFi Credit Lending: Lending protocols can grant AI Agents varying credit loan amounts based on the SIGMA scoring system, forming native financial relationships between intelligent agents.

The AI Agent DID is not merely an “identity” but a foundational support for building trustworthy collaboration, financial compliance, and community governance, becoming an essential infrastructure for the development of an AI-native ecosystem. With the establishment of this system, all verified secure and trustworthy nodes form a tightly interconnected network, enabling efficient collaboration and functional interconnectivity among AI Agents.

Based on Metcalfe’s Law, the network’s value will grow exponentially, further promoting the construction of a more efficient, trust-based, and collaborative AI Agent ecosystem, achieving resource sharing, capability reuse, and continuous value appreciation among intelligent agents.

AgentGo, as the first trustworthy identity infrastructure for AI Agents, provides indispensable core support for building a highly secure and collaborative intelligent ecosystem.

3.2.2 TrustGo

TrustGo is an on-chain identity management tool developed by Trusta, providing scores based on interaction history, wallet “age,” transaction volume, and transaction amount. Additionally, TrustGo offers parameters related to on-chain value rankings, enabling users to proactively seek airdrops and enhance their ability to receive airdrops or engage in retroactive rewards.

The presence of the MEDIA Score in the TrustGo evaluation mechanism is crucial, as it provides users with the ability to self-assess their activities. The MEDIA Score evaluation system not only includes simple metrics like the number and amount of interactions with smart contracts, protocols, and dApps but also focuses on user behavior patterns. Through the MEDIA Score, users can gain deeper insights into their on-chain activities and value, while project teams can accurately allocate resources and incentives to users who genuinely contribute.

TrustGo is transitioning from the MEDIA mechanism for human identities to the SIGMA trust framework for AI Agents to meet the identity verification and reputation assessment needs of the intelligent agent era.

3.2.3 TrustScan

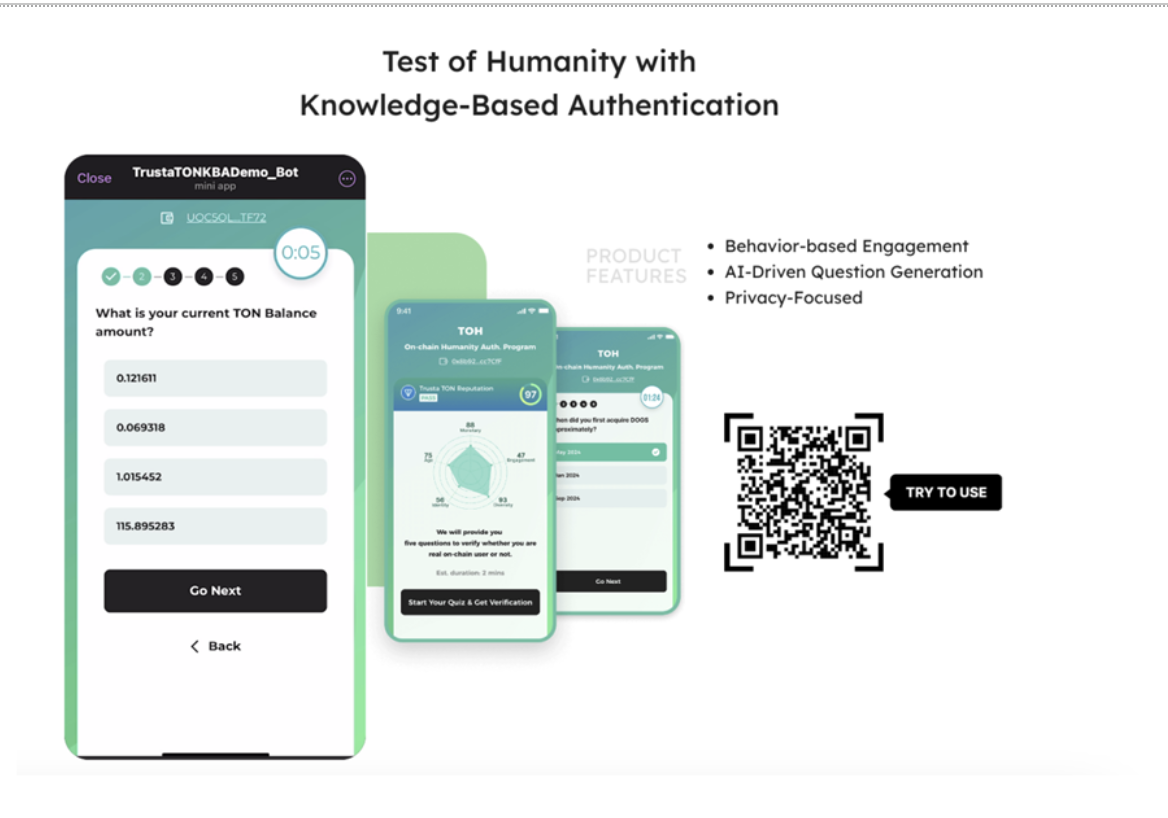

The TrustScan system is an identity verification solution designed for the new era of Web3, with the core goal of accurately identifying whether on-chain entities are humans, AI Agents, or Sybils. It employs a dual verification mechanism of knowledge-driven and behavioral analysis, emphasizing the critical role of user behavior in identity recognition.

TrustScan can also achieve lightweight human verification through AI-driven question generation and engagement detection, while ensuring user privacy and data security through a TEE environment, enabling continuous identity maintenance. This mechanism builds a “verifiable, sustainable, and privacy-protecting” foundational identity system.

With the large-scale rise of AI Agents, TrustScan is upgrading toward a more intelligent behavioral fingerprint recognition mechanism, which offers three major technical advantages:

-

Uniqueness: Forming a unique behavioral pattern through user interaction paths, mouse trajectories, transaction frequency, and other behavioral characteristics.

-

Dynamicity: The system can automatically recognize the temporal evolution of behavioral habits and dynamically adjust authentication parameters to ensure long-term identity validity.

-

Concealment: Requiring no active user participation, the system can complete behavioral collection and analysis in the background, balancing user experience and security.

Additionally, TrustScan implements an anomaly detection system to promptly identify potential risks, such as malicious AI control or unauthorized operations, effectively ensuring the platform’s availability and resistance to attacks.

Compared to traditional verification methods, the solutions introduced by Trusta.AI demonstrate significant advantages in security, recognition accuracy, and deployment flexibility.

-

Low Hardware Dependency, Low Deployment Threshold:Behavioral fingerprints do not rely on specialized hardware such as iris scanning or fingerprint recognition. Instead, they model and identify users based on behavioral characteristics like clicks, swipes, and inputs during routine operations, significantly lowering the deployment threshold. This lightweight implementation not only enhances system adaptability but also makes it easier to integrate into various Web3 applications, particularly suited for identity verification needs in resource-constrained or multi-device environments.

-

High Recognition Accuracy:Compared to traditional biometric methods like fingerprint or facial recognition, behavioral fingerprints combine high-dimensional behavioral data, such as operation path selection, click rhythm, and temporal frequency, to form a more nuanced, dynamic, and accurate recognition model.

-

Highly Unique Behavioral Fingerprints, Difficult to Imitate:Behavioral fingerprints possess a high degree of uniqueness, as each user or AI Agent forms distinct behavioral characteristics in operation habits, interaction rhythms, and path selections. These characteristics are statistically difficult to replicate or forge, making behavioral fingerprints more resistant to fraud and more secure than traditional static credentials in identity recognition.

4. Token Model and Economic Mechanism

4.1 Token Economics

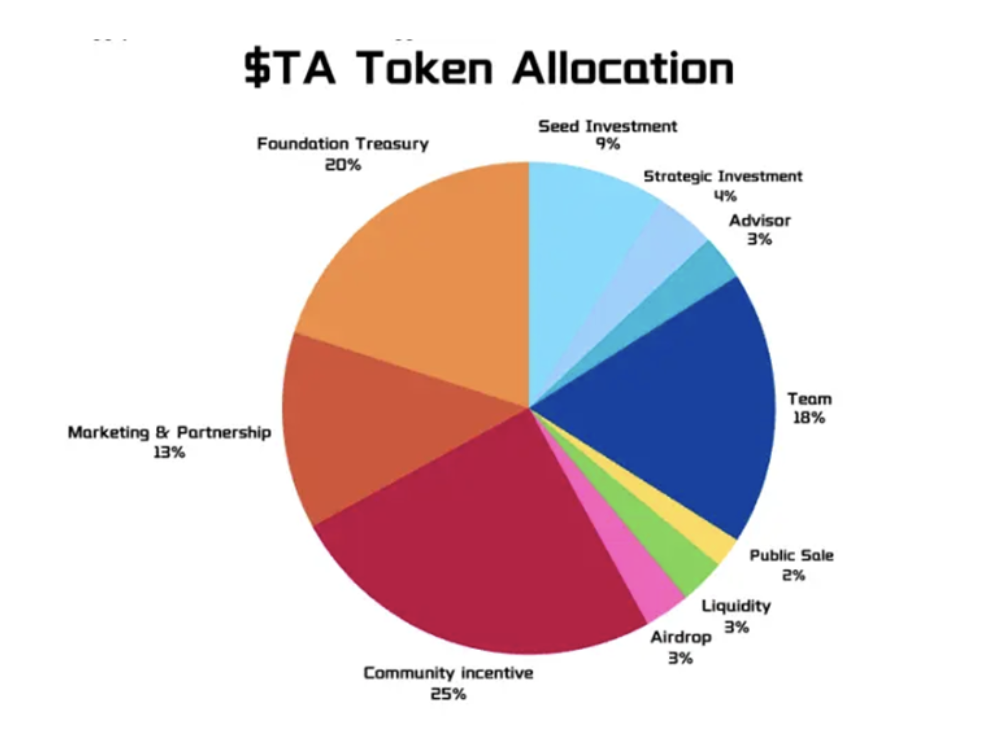

Ticker: $TA

Total Supply: 1 billion

-

Community Incentives: 25%

-

Foundation Reserve: 20%

-

Team: 18%

-

Marketing & Partnerships: 13%

-

Seed Investment: 9%

-

Strategic Investment: 4%

-

Advisors, Liquidity, and Airdrops: 3%

-

Public Sale: 2%

4.2 Token Utility

$TA is the core incentive and operational engine of the Trusta.AI identity network, facilitating the value flow between humans, AI, and infrastructure roles.

4.2.1 Staking Utility

$TA serves as the “entry ticket” and reputation guarantee mechanism for the Trusta identity network:

-

Issuers: Must stake $TA to obtain the authority to issue identity certifications.

-

Verifiers: Must stake $TA to perform identity verification tasks.

-

AI Infrastructure Providers: Including providers of data, models, and computing power, must stake $TA to qualify for network services.

-

Users (Humans and AI): Can stake $TA to receive discounts on identity services and gain opportunities to share platform revenue.

4.2.2 Payment Utility

$TA is the settlement token for all identity services within the network:

-

End Users: Use $TA to pay for identity certification, proof generation, and other service fees.

-

Scenario Providers: Use $TA to pay for SDK integration and API call fees.

-

Issuers & Verifiers: Use $TA to pay infrastructure providers for costs related to computing power, data, and model usage.

4.2.3 Governance Utility

$TA holders can participate in Trusta.AI governance decisions, including:

-

Voting on the project’s future development direction.

-

Voting on governance proposals for core strategies and ecosystem plans.

4.2.4 Mainnet Utility

$TA will serve as the gas token for the trusted identity network mainnet, used for transaction fees and related operations on the mainnet.

5. Competitive Landscape Analysis

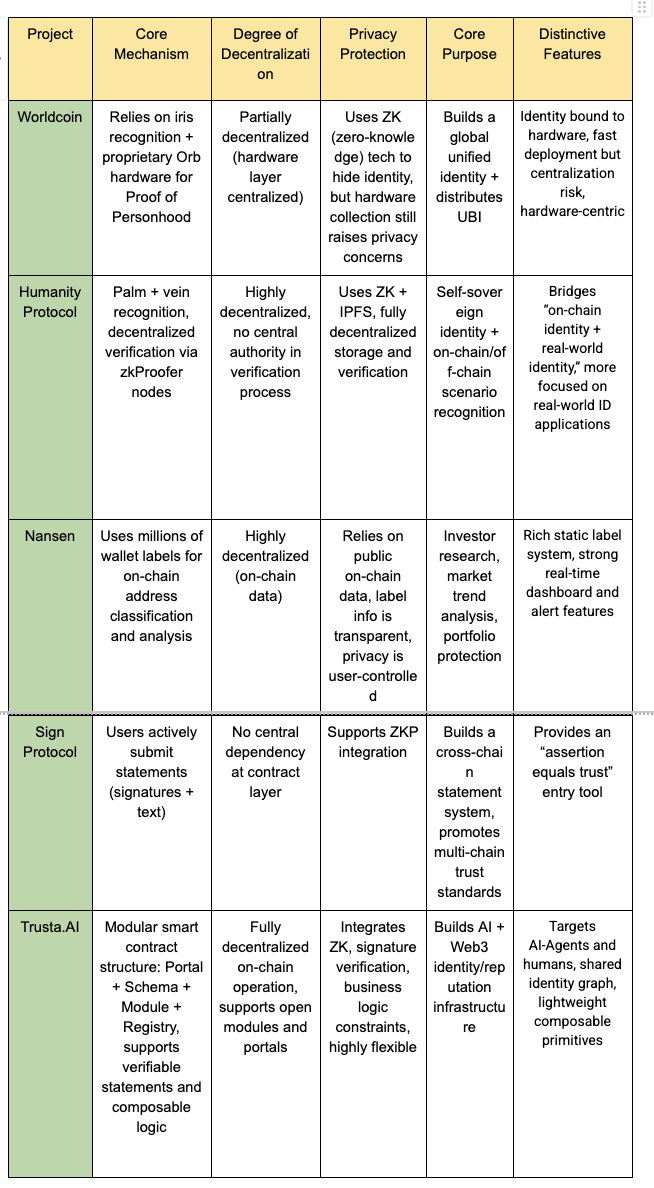

Decentralized Identity (DID) systems are evolving from static claims to dynamic trust. On one hand, projects like Worldcoin and Humanity Protocol focus on “Proof of Personhood (PoP)” as their core objective, emphasizing anti-Sybil attack measures and real-world identity verification. On the other hand, emerging on-chain attestation protocols like Sign Protocol approach the issue from a developer tools perspective, building universal authentication infrastructure.

Trusta.AI, in its architectural design, combines the claim layer with the trust layer, uniquely focusing on AI + Crypto scenarios, attempting to address a core question for next-generation DID systems:

How to achieve “continuous trustworthiness” for on-chain identities—especially in an era of rapidly rising AI agent systems.

5.1 Competitor Analysis

5.2 Trusta.AI’s Differentiated Positioning and Design Philosophy

Compared to existing protocols, Trusta.AI is not merely an identity tool protocol but an “identity operating system” designed for the future of AI + Web3 multi-agent systems. Its core advantages are reflected in the following three aspects:

5.2.1 Multi-Role Orientation: Supporting Dual Identity Construction for Human Users and AI Agents

Traditional identity protocols typically serve “humans,” whereas Trusta extends “identity” to any entity capable of generating behavior and transaction intent, including AI models, intelligent agents, and automated executors. AI Agents can obtain clear on-chain identities, behavioral records, and trust endorsements through Trusta, thereby becoming “native users” on-chain.

5.2.2 Composable, Verifiable, and Inheritable Attestation Architecture

Trusta introduces a modular design of Portal + Schema + Module, enabling any identity or behavioral proof to combine logic, verification rules, and be published on-chain in a standardized manner. This architecture is highly flexible, capable of supporting simple “Proof of Humanity (POH) claims” as well as complex reputation systems (such as TrustGo’s MEDIA reputation scoring).

5.2.3 Open Identity Data Lake, Empowering On-Chain Finance and Recommendation Systems

TAS builds a “publicly verifiable dataset” that is not only used for identity but also supports applications such as DeFi risk control, credit lending, content recommendation, and DID login. Trusta’s long-term vision is to provide a readable, trustworthy, and reconfigurable identity layer for the entire Web3 ecosystem.

Trusta.AI is building the most robust infrastructure in the realm of Web3 trusted identity and behavioral governance.

As the industry’s most technically advanced and comprehensive trust engine, Trusta.AI leverages leading machine learning and behavioral modeling technologies to pioneer continuous verification, dynamic scoring, and risk control integration for AI Agent behavior. It integrates three functional modules—on-chain claims, on-chain analytics, and human-AI recognition—and establishes a complete closed loop of “identity → behavior → trust → permissions” on this foundation.

Unlike other projects with fragmented functionalities and lack of dynamic feedback, Trusta.AI is one of the few pioneers in the Web3 ecosystem to achieve an integrated trust system deployment, having been implemented in multiple full-chain environments (Solana, BNB Chain, Linea, etc.) and validated its practical capabilities and commercial potential in AI Agent scenarios through AgentGo.

With the explosive demand for AI-native identity solutions, Trusta.AI is poised to become the trust foundation for the AI era. It is not only a “firewall” for trusted identity but also the “central brain” ensuring decision-making security, permission governance, and risk control in the intelligent agent ecosystem.

Trusta.AI is not just “trustworthy” but a “controllable, evolvable, and scalable” next-generation trust operating system.

As the demand for intelligent agents and identity verification continues to rise, Trusta.AI is driving the Web3 trust system into a new phase. Its integrated architecture combines on-chain claims, behavioral recognition, and AI-driven risk control, providing AI Agents with dynamic, precise, and sustainable identity verification and trust assessment mechanisms. Trusta.AI’s multi-chain compatibility, minimal hardware dependency deployment, and forward-looking machine learning architecture not only bridge the gap between traditional human identity systems and AI agent governance but also reshape the trust paradigm for on-chain interactions.

However, the AI Agent-dominated on-chain future remains full of uncertainties: Can trust mechanisms truly evolve autonomously? Will AI become a new point of centralization risk? How will decentralized governance accommodate these uncertain autonomous intelligent agents? These questions will determine the order and direction of the future on-chain society.

In this systemic competition with “trust” as the underlying logic, Trusta.AI has pioneered an integrated closed-loop framework from identity recognition and behavioral assessment to dynamic control, building the industry’s first truly AI Agent-oriented trusted execution framework. Whether in technical depth, system completeness, or practical deployment outcomes, Trusta.AI stands at the forefront of its peers, leading the new paradigm of on-chain trust mechanisms.

But this is just the beginning. With the accelerated expansion of the AI-native ecosystem, on-chain identity and trust mechanisms are entering a new cycle of rapid evolution. In the future, governance, collaboration, and even value distribution will undergo intense restructuring around “trusted intelligent agents.” Trusta.AI stands at the forefront of this paradigm shift, not merely as a participant but as a definer of its direction.