In recent months, there has been a resurgence in the usage of the term “web3”: a nebulous term that (like it or hate it) has suddenly become a banner for much of the innovation happening in the space of blockchain and distributed ledger technology. The term has been around for a while (since 2014 when Gavin Wood originally coined it), but now it seems to have, for better or worse, reached critical mass.

With this has come a large amount of discourse and criticism. The field of discourse has seemingly been almost perfectly split in two: first, the “blockchain likers”, who are a broad group of individuals ranging from dogmatic “moon boy" traders to seasoned cryptographers like Dan Boneh who are working on the core technology associated with the field. On the other side, there are the “blockchain dislikers”: people like Stephen Diehl (who himself actually works on a private blockchain), who consistently produce highly reflexive, reactionary, and dismissive critiques.

These critiques are usually unmoored to anything actually happening in the blockchain space, and unaware of the technological development and cultural vision. This bifurcation is extremely harmful for the health of the emerging technologies: these technologies are not going to go away, so if your only answer is dismissal and anger, you’re actually just ceding the power to decide what these technologies will look like in our world. This pattern of discourse creates a negative-sum feedback loop that does a disservice to all.

Don't fight forces, use them

Buckminster Fuller

Recently, Moxie Marlinspike (of sslstrip and Signal/TextSecure fame) posted a blog post titled “My first impressions of web3”. In it, he details his experience in diving in to some “web3" tech: building NFTs on Ethereum, and using the associated client-side RPCs to interact with the chain. Moxie points out a number of important criticisms of the current state of the Ethereum ecosystem, and of web3 more broadly. This is a very productive criticism that does not fall into the traps of reflexivity previously mentioned (“it’s all a ponzi!”, etc).

I wanted to highlight some of these criticisms, and ways I think the community can heed them and improve on the state of the status quo.

On Clients, Servers, Full Nodes, and User Laziness

One of the first major points Moxie makes:

People don’t want to run their own servers, and never will. The premise for web1 was that everyone on the internet would be both a publisher and consumer of content as well as a publisher and consumer of infrastructure.

We’d all have our own web server with our own web site, our own mail server for our own email, our own finger server for our own status messages, our own chargen server for our own character generation. However – and I don’t think this can be emphasized enough – that is not what people want. People do not want to run their own servers.

Even nerds do not want to run their own servers at this point. Even organizations building software full time do not want to run their own servers at this point. If there’s one thing I hope we’ve learned about the world, it’s that people do not want to run their own servers. The companies that emerged offering to do that for you instead were successful, and the companies that iterated on new functionality based on what is possible with those networks were even more successful.

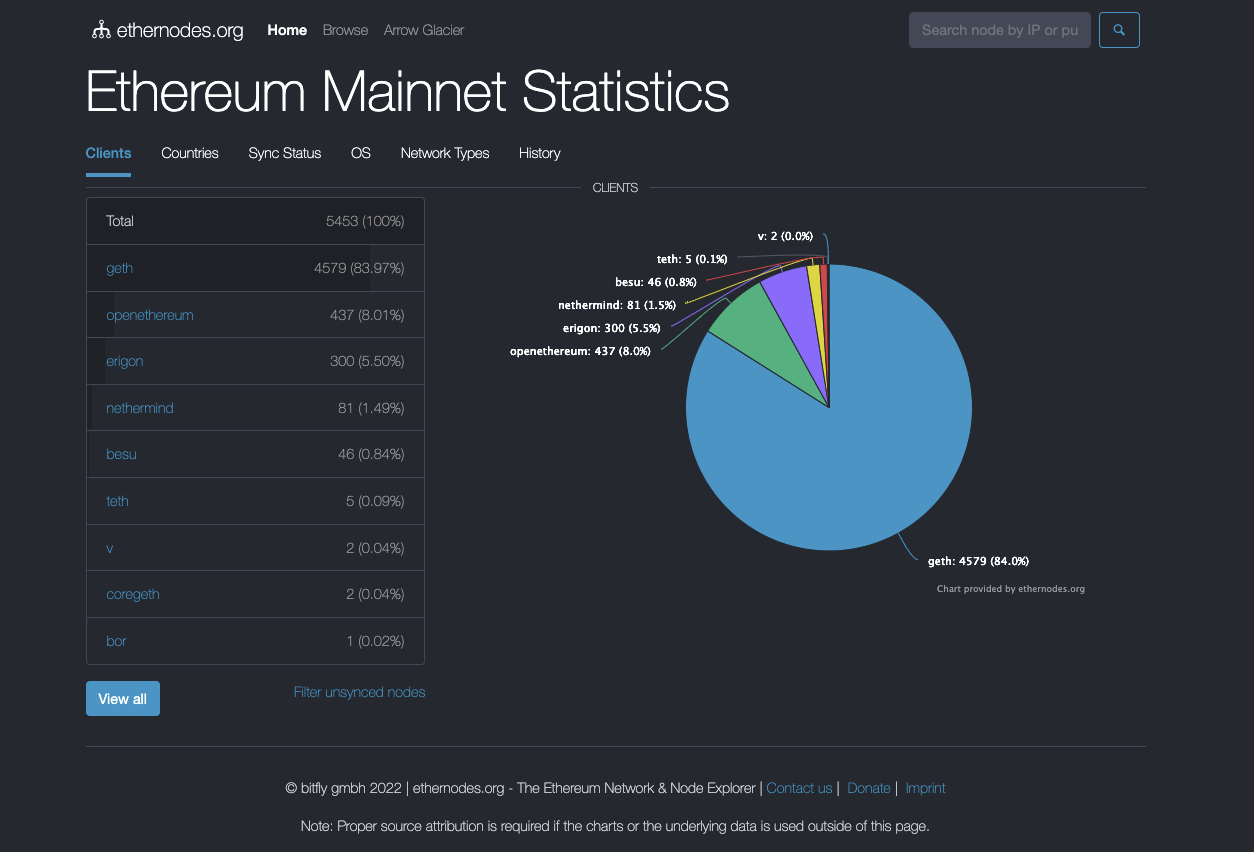

This is an important, and I think very accurate, observation. It is also borne out by the empirical data. According to ethernodes.org, the current number of Ethereum full nodes is around 5500. If just every Ethereum nerd on Twitter ran their own Ethereum nodes, this number would be much higher.

This point is also well-taken within much of the blockchain community. Vitalik Buterin famously called the idea that everyone would run their own node a “weird mountain man fantasy”.

On Infrastructure Providers

Later in the piece, Moxie mentions the current state of Web3 infrastructure providers:

For example, whether it’s running on mobile or the web, a dApp like Autonomous Art or First Derivative needs to interact with the blockchain somehow – in order to modify or render state (the collectively produced work of art, the edit history for it, the NFT derivatives, etc). That’s not really possible to do from the client, though, since the blockchain can’t live on your mobile device (or in your desktop browser realistically). So the only alternative is to interact with the blockchain via a node that’s running remotely on a server somewhere.

A server! But, as we know, people don’t want to run their own servers. As it happens, companies have emerged that sell API access to an ethereum node they run as a service, along with providing analytics, enhanced APIs they’ve built on top of the default ethereum APIs, and access to historical transactions. Which sounds… familiar. At this point, there are basically two companies. Almost all dApps use either Infura or Alchemy in order to interact with the blockchain. In fact, even when you connect a wallet like MetaMask to a dApp, and the dApp interacts with the blockchain via your wallet, MetaMask is just making calls to Infura!

These client APIs are not using anything to verify blockchain state or the authenticity of responses. The results aren’t even signed. An app like Autonomous Art says “hey what’s the output of this view function on this smart contract,” Alchemy or Infura responds with a JSON blob that says “this is the output,” and the app renders it.

This was surprising to me. So much work, energy, and time has gone into creating a trustless distributed consensus mechanism, but virtually all clients that wish to access it do so by simply trusting the outputs from these two companies without any further verification. It also doesn’t seem like the best privacy situation. Imagine if every time you interacted with a website in Chrome, your request first went to Google before being routed to the destination and back. That’s the situation with ethereum today. All write traffic is obviously already public on the blockchain, but these companies also have visibility into almost all read requests from almost all users in almost all dApps.

This highlights a crucial infrastructural vulnerability in the way that current dApps are constructed. I urge web3/crypto people to heed this part of the criticism. It is crucially important. This feels similar to when Moxie pointed out that HTTPS connections could be downgraded to HTTP: a systemic risk that had been accepted by the developer community, but which was not adequately explained to users and which was actually a systemically unacceptable risk. It is very important that we address this issue (and, the good news is that we already have the tools to do so).

On NFT Metadata and User Communication

Another criticism prominently featured in the piece is centered around NFTs. Specifically, NFTs which are intended to represent some image content (like the infamous Apes).

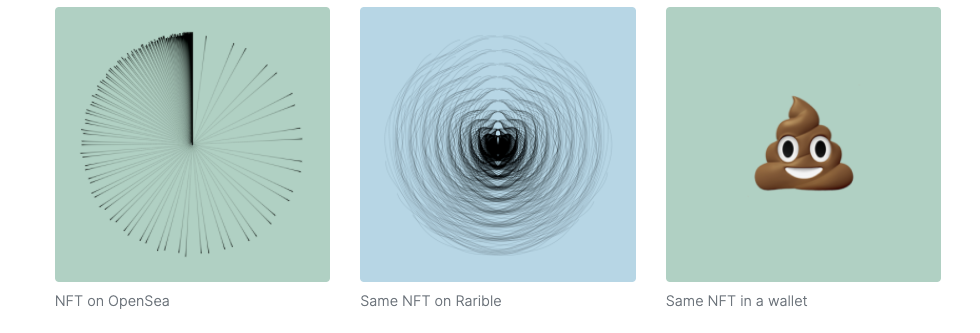

Instead of storing the data on-chain, NFTs instead contain a URL that points to the data. What surprised me about the standards was that there’s no hash commitment for the data located at the URL. Looking at many of the NFTs on popular marketplaces being sold for tens, hundreds, or millions of dollars, that URL often just points to some VPS running Apache somewhere. Anyone with access to that machine, anyone who buys that domain name in the future, or anyone who compromises that machine can change the image, title, description, etc for the NFT to whatever they’d like at any time (regardless of whether or not they “own” the token). There’s nothing in the NFT spec that tells you what the image “should” be, or even allows you to confirm whether something is the “correct” image.

So as an experiment, I made an NFT that changes based on who is looking at it, since the web server that serves the image can choose to serve different images based on the IP or User Agent of the requester. For example, it looked one way on OpenSea, another way on Rarible, but when you buy it and view it from your crypto wallet, it will always display as a large 💩 emoji. What you bid on isn’t what you get. There’s nothing unusual about this NFT, it’s how the NFT specifications are built. Many of the highest priced NFTs could turn into 💩 emoji at any time; I just made it explicit.

This is another important criticism. While it is true that this metadata field could contain a content-addressed data blob (like a Skynet link or an IPFS link), the fact is that in many cases it doesn’t. And this is bad. It represents not only a failure to build good tools that default to sane defaults (like content-addressed data), it also represents a failure of wallet technologies and infrastructure to communicate to users the contents of the on-chain metadata that they are controlling.

The Path Forward: Infrastructure Providers

When Moxie revealed SSLStrip in 2009, it highlighted a number of key infrastructural oversights in the way that TLS was deployed. These were not protocol vulnerabilities per-se, rather it was a way that TLS was broken in practice by exploiting the user’s expectations. When downgrading a https link to http, the typical user has absolutely no reason to think anything went wrong. Yes, it wasn’t a break in ECDH or RSA, but it was a practical complete break in TLS regardless. HTTP Strict Transport Security was developed afterwards, and successfully addressed this situation.

I view the issues pointed out in the article similarly. While it’s true that you can run your own node, you can inspect and mutate your data if your infrastructure provider censors you, and you can mint NFTs securely, the commonly deployed software simply does not provide sufficient default-protection for the user in the average case.

So, where does that leave us? Should we force users into the “mountain man fantasy”? As Moxie mentions, the blockchain is too large to fit on your mobile device. Should we build more infrastructure providers? What is our HTTP Strict Transport Security?

If we accept (as I believe that we should) Moxie’s point that in the average case users are not going to run nodes, then we are faced with the following extant problems with the infrastructure provider:

- Infrastructure providers can lie to the user about blockchain state, and the user’s client will have no way to discern that they have been lied to.

- Infrastructure providers can spy on their users, recording their linked addresses, trading or NFT activity,

I’ll describe two methods that, in the near term and with minimal R&D, could safely address both of these concerns.

State Verification: Light Clients

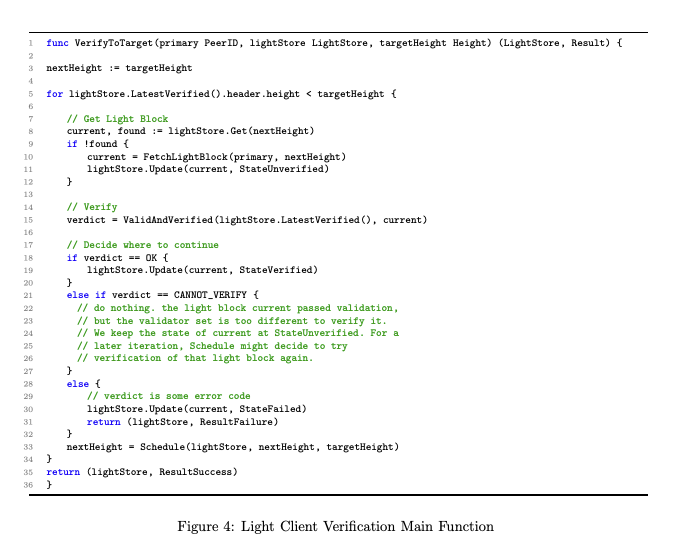

On point one, the blockchain community has known the solution for quite some time. Light clients can be used which track block headers, verify state commitments, and verify inclusion proofs for state fragments sent from the infrastructure provider. Light clients can easily be run on cheap commodity devices (like mobile phones), and require minimal storage, bandwidth, and computation costs.

Light clients are a conceptually well-explored domain, dating back to “Simplified Payment Verification” in the original Bitcoin whitepaper and commonly implemented in the Bitcoin ecosystem. Mature light clients exist for Tendermint, which have been proven out by their usage in the IBC protocol, which transfers millions in value across chains and requires the integrity protection and authentication of light clients to hold. Similar light client efforts exist for the Ethereum ecosystem, though they are not deployed into the common end-user wallets like Metamask.

Adopting light clients for user-facing wallets, like Rainbow, Metamask, and others, will address point number one through strong cryptography. In the light client model, the infrastructure provider can no longer lie about the blockchain state.

Privacy: Message Detection

To point two, we have a few options. One is to have the infrastructure provider use some sort of secure enclave (like SGX, as used for some features in Signal) to handle incoming user queries about the current chain state. Assuming that SGX is not broken, the user’s privacy should be maintained. However, SGX is often broken, and there are likely to be data flow problems with this approach (such as aggregate access pattern leakage).

Another approach is to use cryptography to obfuscate the user’s state fragments inside of a larger set of state fragments which are not related to the user’s state. An approach called fuzzy message detection was recently formalized. This problem is relevant in many privacy-preserving cryptocurrency networks, but could be generalized to allow users to hide their state fragments from their infrastructure provider, without relying on any trusted hardware like SGX. Penumbra is an example of a chain which is planning to build light client infrastructure based on this approach.

With Message Detection and Light Client Verification

With light client verification, the infrastructure can no longer lie about the inclusion of a particular state fragment in the most recent state. With message detection, users can preserve their privacy against the infrastructure provider. Where does that leave us for infrastructure provider trust, compared to running a full node?

- The infrastructure provider can refuse to deliver state fragments to our wallet

This is a far improved situation, and could itself be addressed through cryptoeconomic data availability games as used in Sia or Celestia.

The Path Forward: NFT Metadata

On the other point made by Moxie, that NFT Metadata is often not actually useful for what the user is expecting it to be useful for, I believe that we have a few options in the near term.

First, we should have better transparency tools so that users know the metadata contents of the NFTs that they are buying or minting. Platforms like OpenSea and wallets like Rainbow and Metamask should give visibility into the metadata of these NFTs, and there should be a standard way to verify if a JPEG NFT actually contains a hash of its JPEG (whether that hash is an IPFS link, a Skynet link, or something else).

Second, we should educate users and developers on the importance of having some content-addressed hash in their metadata, if the NFT is intended to be an image of some kind.

Third, wallet software should be able to verify JPEG checksums in NFTs, and throw an error if the data returned by the data availability provider doesn’t match. The checksums themselves will be protected by the integrity protection granted by the light client.

Fourth, we should use cryptoeconomically-incentivized data availability providers. IPFS is a start, but it doesn’t actually provide cryptoeconomic data availability: it relies on pinning providers to optimistically pin data. Skynet and Arweave on the other hand use a strong cryptoeconomic game to incentivize data availability, use a similar content-addressing system as IPFS, and work today.

There could be interesting standards work here for specifying a specific “ERC721-JPEG" format which better supports this type of non-malleable NFT image encoding.

The Further Future: zk-SNARKs

zk-SNARKs have been an important pillar of blockchain-related research for the last few years. Originally a concept from academic cryptography dating back to the late 1980s, blockchain technology has resurrected interest in zkSNARKs and related proof systems due to their potential applications to privacy, scalability, and more.

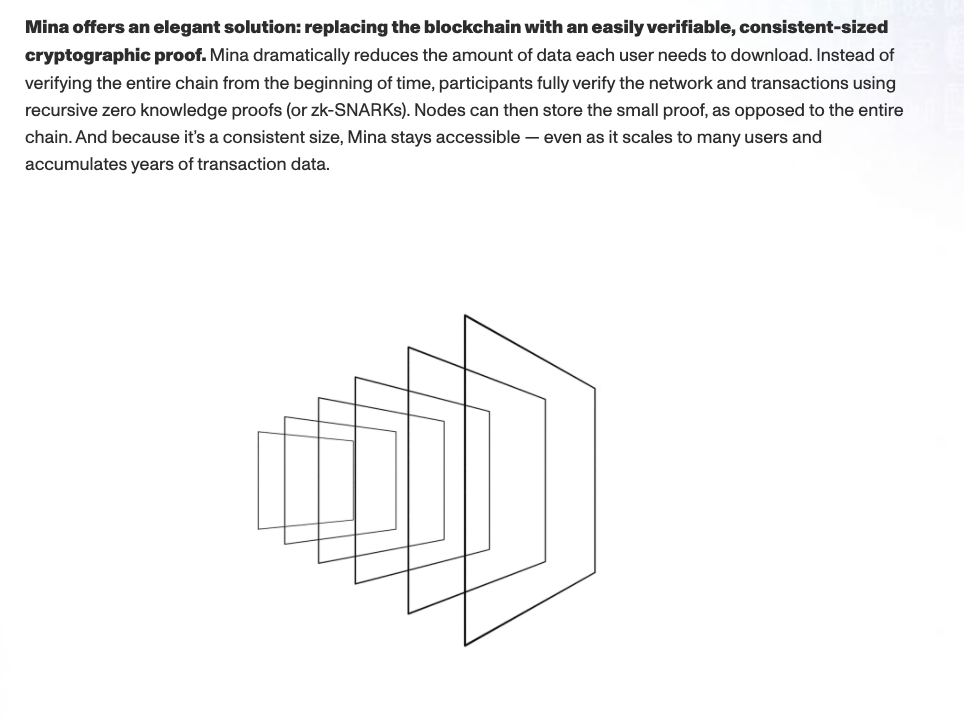

Using zk-SNARKs, it is actually possible for commodity devices to verify the entire chain state, including the semantics of every state transition. Mina uses this architecture today, and using recursive zk-SNARKs achieves a state size of around 22kb. An architecture like this could fill a similar security role to that of light clients, with the added benefit of allowing every-day users to run a fully verifying node on their commodity device.

Similarly, zk-SNARKs could be used to prove facts about computation related to NFT metadata.

Overall Thoughts

Overall, I really enjoyed Moxie’s post. I think it does a great job highlighting some important issues in the infrastructure build-out of what is (perhaps begrudgingly) called “web3”. I am hopeful that the community can take the criticism constructively, and build safer, more reliable, less centralized, and less trusted infrastructure as a result. It was very refreshing to see a measured, accurate, and non-hyperbolic critique of the space which has attracted a lot of misinformed or bad-faith criticism over the years. I hope that we see more productive criticism of these technologies going forward that helps us all build a better world.