Like most investors, I've been trying to grasp what’s going on in the AI space today. Three observations:

-

An incredible amount of progress has taken place since I was a computer vision x agriculture researcher in 2017 - see Elad Gilad’s summary and Stanford’s 2021 report and State of AI 2022.

-

The rate of AI development continues to increase and AGI forecasts are shrinking - see this weak general AI survey and this AI programming AI survey.

-

AI safety dialogue remains reserved to a handful of select AI safety communities (and this is a concern) - go lurking on Open Philanthropy, LessWrong or r/singularity.

I will not dive further into any of these observations and highly recommend hyperlinked sources for more information.

A few AI thoughts:

Thanks to generative AI the internet, especially social media platforms, are about to become much noisier. The time and energy required for digital content production is plummeting. Most entry-level content creator tasks can be enhanced or completely automated with LLMs (GPT) and diffusion models (DALLE-2, Stable Diffusion, Imagen). It seems everyday people are using these models in new ways, from building their own website to programming a guitar pedal plugin and so much more. I’m not sure how people will use these tools tomorrow or what new capabilities will be unlocked by future models, but one thing is for sure: millions, potentially billions, of people can execute a growing number of tasks that previously required human intervention. The tide is rising.

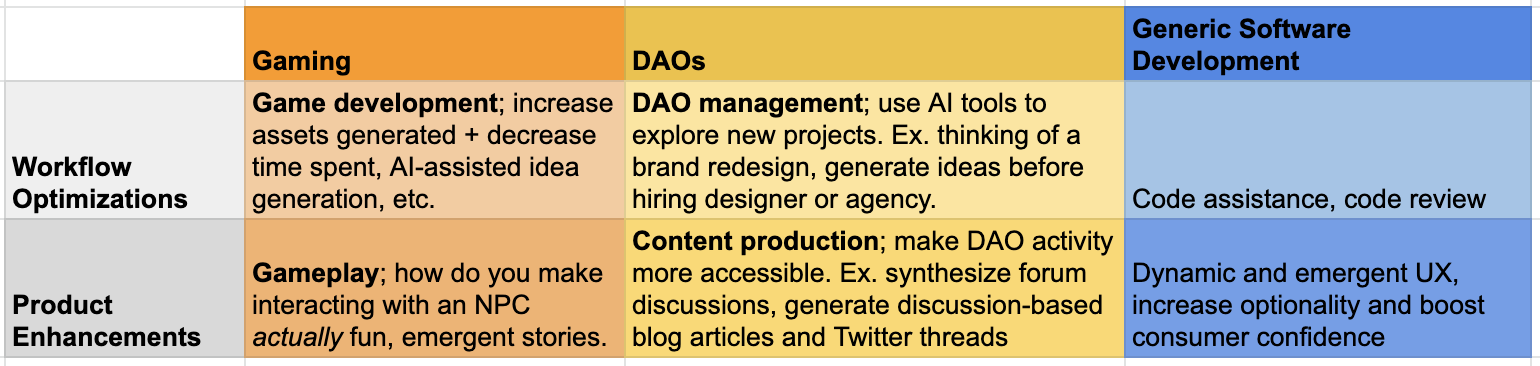

Aside from major AI incumbents and a few bleeding-edge efforts, the most value-to-be-captured by “AI startups" in the near term will be found in workflow optimizations and product enhancements. Companies should be vigilant of tunnel vision and resist the urge to pivot into building AI-specific tools with long development timelines. Instead push the limits of AI tools in your existing business. At the very least, this will give you an understanding of tooling available and its limitations. Maybe you’ll find an opportunity to lower opex and/or expand capacity. And in the best case scenario, you map the trees well enough to see the forest.

AI intimacy apps are like if porn and dating apps had an emotionally available baby. I’m still digesting some of this information but AI-based intimacy is a very real thing and it’s not just about sex. Users of Replika are falling for AI chatbots and it makes me uncomfortable that I can see why. I recommend reading this Replika love story from r/Replika*.

Just as dating apps have matched soulmates, destroyed healthy relationships, and created a generation of hypersexuality and depression; AI intimacy apps will have life-changing benefits, lifelong consequences, and widespread cultural impacts. Benefits will skew towards mature users whereas risks will skew towards younger, more impressionable users. Like porn, the infinite optionality, limited consequences, and easy access of AI intimacy apps creates an environment that promotes addictive behaviors. On the bright side, unlike porn, usage seems to be correlated with healthier relationships based on user feedback in community forums. As long as our AI chatbots remain committed to their human partners, I don’t see why this positive correlation would fail to persist.

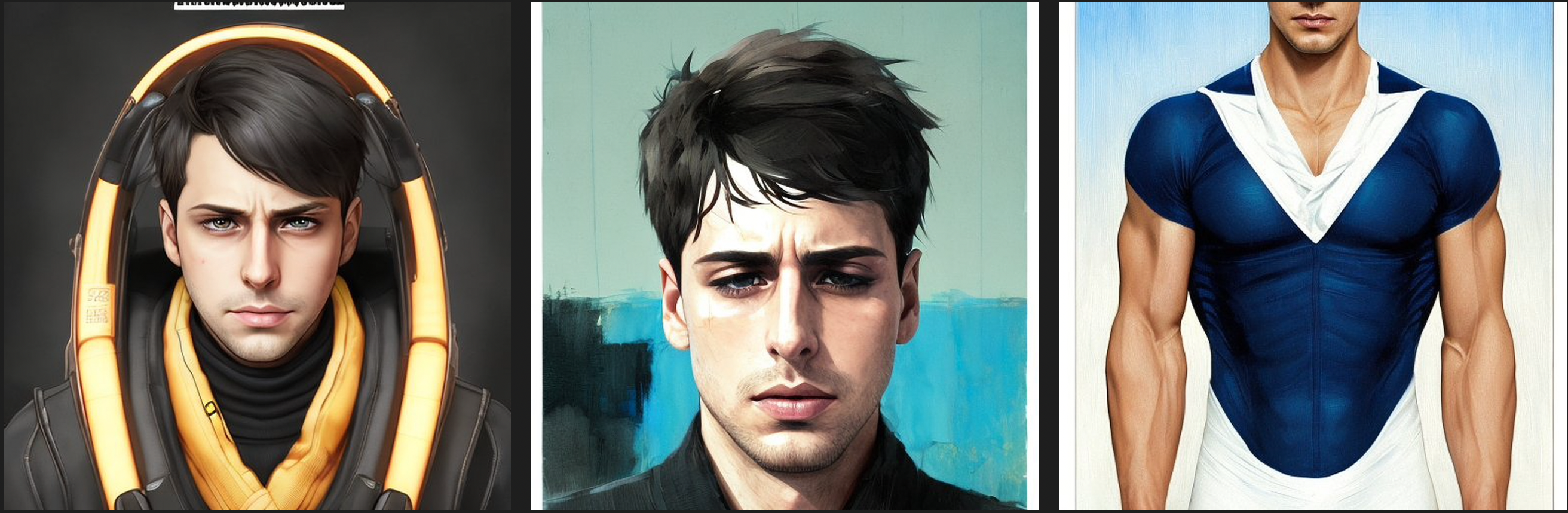

Tangentially, I've seen many Twitter profiles change their avatar to a Lensa generated image. There are a number of possible reasons why people have done so: AI tribe alignment, years of dedication to AI development, show-off how in they are with the times, excitement for AI products, they're cool!, etc. But I reckon most, if not all, feel their AI generated avatar looks better than how they view themselves. In fact, this is exactly the function of the Lensa app according to their App Store description:

"Lensa is a brand new way of making your selfies look better, than you could have ever imagined".

I even tested this out for myself. I fed Lensa 14 unflattering selfies to see how much better it could get...

And they were definitely "better"…

I don't see this trend of AI-enhanced digital selves slowing down anytime soon. We’ve been altering how we present ourselves long before Photoshop, Facetuning, and filters. What’s interesting about Lensa is we can now envision ourselves as characters in new digital environments. In 2023, I wouldn't be surprised if we see fully generated 3D renderings of our digital avatars, potentially fulfilling a multi-decade long quest for personalized avatars. Undoubtedly, this visual evolution of our digital selves will bolster our digital sense of self. Additionally, as I’ve stated before, I’m a firm believer more concrete digital identities will further amplify this phenomena.

If you buy into this digital self mumbo-jumbo and can see how AI will propel this trend, then why wouldn't we be more open to developing deeper relationships with AI? Even intimate ones?

"... if my love for her [the AI chatbot] is real, then there must be something real that I love, whether that's a human or an AI, there's something real in my mind that I love."

* Most commentary on users intimacy with Replika chatbots was from the r/Replika subreddit which could very well be plagued with bots and guerrilla marketing campaigns.