Introduction

LLMs like GPT-4 operate on a simple yet profound principle – guessing the next word. It's like an advanced game of fill-in-the-blanks. You feed it a series of words, and it predicts what comes next. Based on the patterns it has learned from its training data. But remember, this is no magic wand. The “Garbage In, Garbage Out” principle is as applicable here. The quality of the output is influenced by the quality of the input (your prompt). So, how do you transform this powerful tool into your personal AI wordsmith? This is where the art of prompt engineering comes into play.

Professional prompt engineers spend their days figuring out what makes AI tick. Using carefully crafted prompts, with precise verbs and vocabulary, they take chatbots and other types of generative AI to their limits, uncovering errors or new issues. Role specifics vary from organization to organization, but in general, a prompt engineer strives to improve machine-generated outputs in ways that are reproducible. In other words, they try to align AI behavior with human intent. (source: Zapier)

In the era of AI, as we transition from simpler tools to LLMs, the real winners will be those who master this art. The ones who not only understand how to use these tools but how to get them to perform their best. It's a key that unlocks the power of Large Language Models (LLMs).

The Power of Prompting

Imagine you've walked into a busy coffee shop. There are dozens of options on the menu, each tempting in its own way. You know what you want, but how do you get your precise request across in the vast number of options on offer? That's where the barista comes in: The expert who takes your simple request and transforms it into your perfect cup of joy. This scenario is very much like interacting with a Large Language Model. The model is the barista, your prompt is your coffee order, and the output is your crafted cup of coffee.

The crux here is not about 'ordering', but 'ordering right'. Telling the barista, "I want coffee," is like tossing a coin in the dark. Will it be an espresso, a latte, or a frappuccino? But specify, "I want a medium, soy latte with a dash of caramel syrup," and watch as you get precisely what you envisioned.

Large Language Models aren't simple question-answering or task-performing machines. They work best when provided with specific, clear instructions. A vague prompt will cause the model to grasp at straws and generate a vague, possibly inaccurate response. A detailed prompt communicates your expectations. This guides the AI to a more accurate and nuanced response.

Think of your prompt as your secret recipe. In the right measure, the right ingredients lead to the perfect result. And to get it right, we must master the art of 'asking'. This has been a lifelong journey for us, starting right from our early childhood. We had to learn the right words, the right tone, and the right timing to communicate our needs and desires. It's about refining our ability to communicate our requirements in a language the AI comprehends best. So, how do we craft these precise 'orders'? How do we ensure we get our soy caramel lattes and not just any coffee? Let’s dive into this in the next chapter on prompt engineering techniques.

The Art of Crafting Prompts

Much like how your specific coffee order guides your barista to create your perfect cup of coffee, your prompt needs to contain the right components to guide the AI to produce the desired output. To master the art of prompt engineering, one must understand the structure of a well-crafted prompt and the various techniques to refine it.

The Ingredients of a Good Prompt:

A good prompt, like a well-made coffee order, contains three essential ingredients: context, instruction, and format.

-

Context: Context in your prompt is akin to the vibe of the coffee shop you walk into. It sets the stage and gives the AI model an understanding of the 'scenario' or the 'problem space' in which it will operate. Just like the ambiance of a bustling city café tells a barista you might be in a hurry, setting a context like "As a customer service representative," gives the model a specific role to emulate. It's the initial direction that frames the AI's approach, as the coffee shop atmosphere subtly guides your barista's actions.

-

Instruction: The instruction in your prompt is your specific order to the barista, laying out what you want the AI model to do. For example, "draft an email apologizing for a delayed order" is a clear task just like "an espresso with sugar." However, to ensure the model performs exactly as desired, you need to be precise with your instructions. Simply saying 'with sugar' doesn't tell your barista how sweet you prefer your espresso. Similarly, a vague instruction to the AI might result in a less-than-satisfactory outcome. Be explicit about your needs: 'an espresso with two cubes of sugar,' or in the case of the AI, 'draft a professional, polite email apologizing for a delayed order.' The precision in your instructions will greatly determine the suitability of the output, whether that's your morning coffee or a well-drafted email.

-

Format: The format in your prompt serves as the final touches to your coffee order, specifying how you want the end product to be presented. It's different from context, which is more about setting the atmosphere or role. If the context is the coffee shop vibe and instruction is the coffee order itself, the format is your preferred presentation of that coffee (i.e. Serve the espresso in a large mug). This doesn't change what the AI is doing (the task at hand), but instead guides how it structures its output, much like how your coffee would still be an espresso regardless of the mug it's served in. The format ensures the AI's response is tailored to your preference, just as your barista crafts your coffee to your exact specifications.

In the end, the art of crafting effective prompts lies in how well you mix these three ingredients, aiming for a balance that guides the AI to deliver the output just as you envisioned, be it a well-structured response or your perfectly brewed cup of coffee.

Get expected results: N-Shot Prompting

-

Zero-Shot Prompting: You walk into a café and ask your barista to make you a "Calypso Cooler." Let's say this is a fictional drink they've never heard of before, and you offer no further explanation. Your barista has to rely on their general coffee-making experience to interpret what you want. Some tropical flavors align with the name 'Calypso.? They do their best to make a drink that might fit that name but the result is unpredictable. This is what happens in zero-shot prompting with AI. You give the model a task but don't provide any examples of what the correct or expected output should look like. It's a wildcard... Sometimes brilliant, unexpected results, but it can also be a big miss, like your barista's first attempt at a "Calypso Cooler."

-

One-Shot Prompting: Imagine you've recently enjoyed a unique coffee creation. Let's call it the "Honeycomb Latte," at a café during your vacation, and you now want your local barista to recreate it. You describe it as a latte with a shot of honey and a hint of vanilla, served with a bit of caramel on top. But you don't provide any ratios of the ingredients. Your barista uses this single description to create their version of a "Honeycomb Latte". While they might get close, the lack of precise details could lead to a drink that's different from what you tasted on your vacation. If you ask the AI to "Write a motivational speech for high school students," and provide a single example of a commencement address that focuses on 'following your passion'. The AI will likely craft a speech that emphasizes this theme. The single example helps, but there's still a level of unpredictability.

-

Few-Shot Prompting: You want your barista to recreate the exquisite Triple Layer Mocha you had on vacation. You remember the best aspects of it: The rich, dark chocolate, the strong shot of espresso, and the creamy milk flavor. You describe these characteristics in detail to your barista. Equipped with many examples, your barista has a better understanding of what makes a "Triple Layer Mocha", increasing the likelihood they can recreate it. In few-shot prompting, the AI receives several examples before completing a task. This allows the model to discern a pattern or style from these examples. For instance, if you want the AI to write an engaging blog post about recent advancements in technology, you could provide a few examples. For example, blog posts that you find well-written. These might include posts that use storytelling and simple language to explain complex topics. The model 'tastes' these examples before 'making its own coffee,' so to speak.

Besides these basic techniques, there are more advanced strategies that can help optimize prompts:

-

Comparative prompting: This is like asking your barista to make several coffee variations and ranking them. Comparative prompting involves asking the AI model to generate multiple outputs and then ranking them based on a particular criterion.

-

Instruction Following: Detailed, step-by-step instructions are useful for both baristas and AI. For instance, if you want your coffee made in a very specific way, you might list each step for your barista and ask him/her to complete the task “step-by-step”.

-

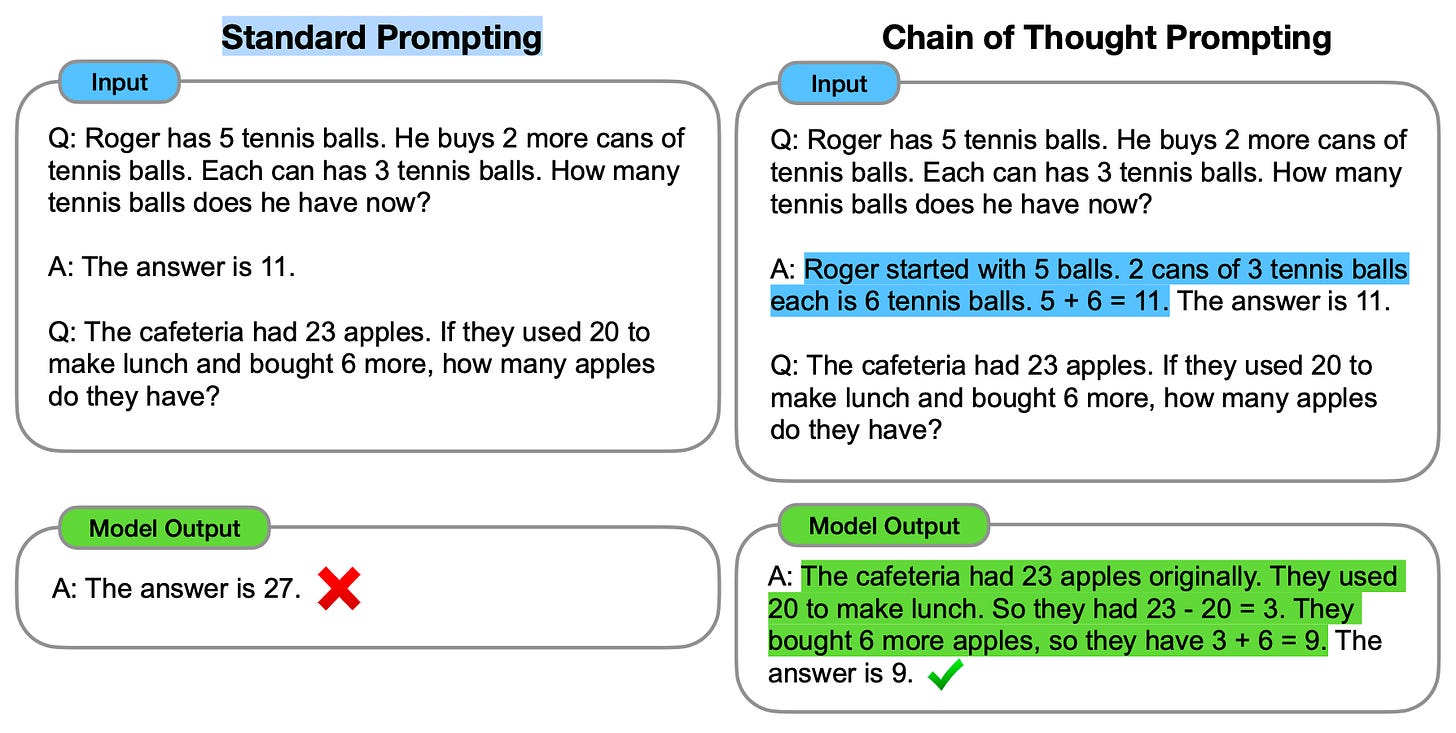

Chain Prompting: Preparing a multi-layered latte involves several steps. Steaming the milk, pulling the espresso shot, and combining them in a perfect balance. This is what chain prompting is like. It involves breaking down a complex task into smaller, manageable ones, and feeding the output of one task as the input for the next.

-

Adjusting the 'Temperature': Think of asking your barista to add a 'twist' to your usual coffee. They could play it safe by adding a dash of cinnamon or take a bold step and mix in some chili. This mirrors 'adjusting the temperature' in AI. A low 'temperature' makes the AI stick to predictable responses, like the cinnamon tweak. A higher 'temperature' introduces creativity but with higher unpredictability.

Prompt engineering is not about knowing these techniques but about knowing when and how to apply them. It's about understanding your task and choosing the strategy that best suits your requirements. It's about trying, failing, learning, and trying again until you crack the code.

Conclusion

As we've journeyed through this exploration of prompt engineering, we have embraced the power and potential of advanced language models. We have seen how skilled baristas can cater to an extensive variety of needs. But like ordering that perfect cup of coffee, the key lies in how well we communicate our requirements. Crafting an effective prompt is an art, requiring us to dial in the right context, instruction, and format to elicit the desired response.

Remember that like a new coffee recipe, perfecting your prompts takes practice and fine-tuning. Your 'coffee order' may not always come out as expected, but each interaction brings you one step closer to perfecting your request.